Let’s talk about some of the popular container orchestration tools available in the market.

What is a Container Orchestration?

Container platforms like Docker are very popular these days to package applications based on a microservices architecture. Containers can be made highly scalable, which can be created on-demand. While this is good for a few containers but imagine you have hundreds of them.

It becomes extremely difficult to manage the container lifecycle and its management when numbers increase dynamically with demand.

Container orchestration solves the problem by automating the scheduling, deployment, scalability, load balancing, availability, and networking of containers. Container orchestration is the automation and management of the lifecycle of containers and services.

It is a process of managing and organizing multiple containers and microservices architecture at scale.

Luckily, there are many container orchestration tools available in the market.

Let’s explore them!

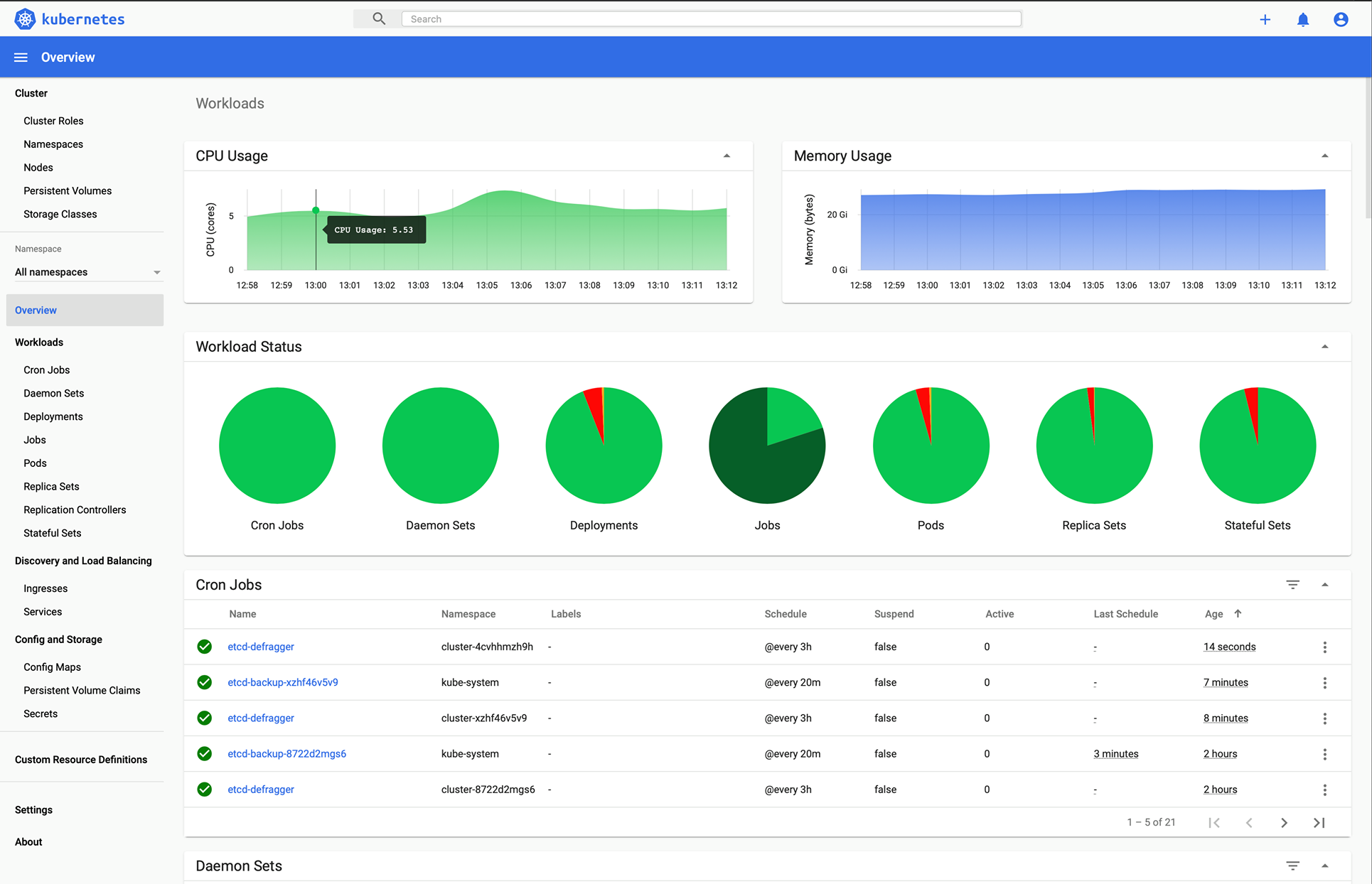

Kubernetes

You guessed it, isn’t it?

Kubernetes is an open-source platform that was originally designed by Google and now maintained by the Cloud Native Computing Foundation. Kubernetes supports both declarative configuration and automation. It can help to automate deployment, scaling, and management of containerized workload and services.

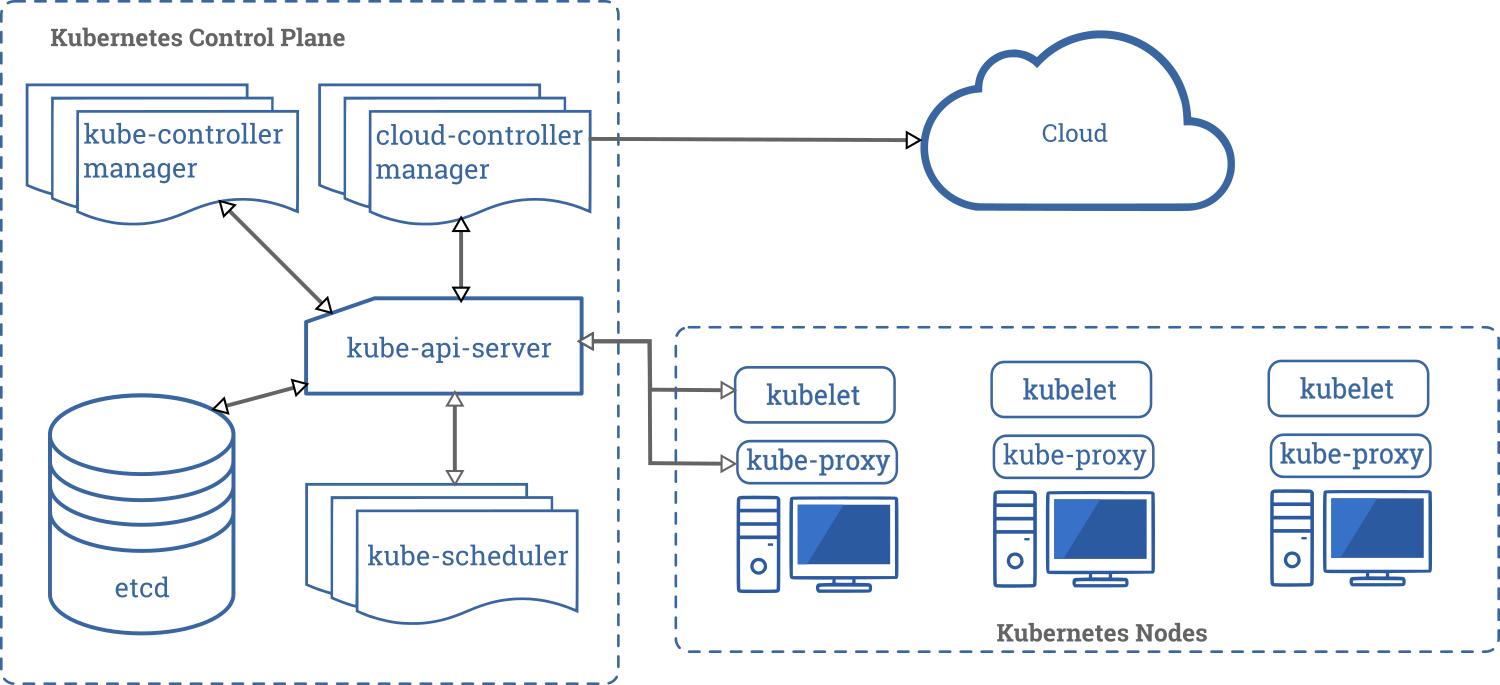

Kubernetes API helps to establish communication between users, cluster components, and external third-party components. Kubernetes control plane and Nodes run on a group of nodes that together form the cluster. Application workload consists of one or more Pods that runs on Worker node(s). The control plane manages Pods and worker nodes.

Companies like Babylon, Booking.com, AppDirect extensively use Kubernetes.

Features

- Service discovery and load balancing

- Storage orchestration

- Automated rollouts and rollbacks

- Horizontal scaling

- Secret and configuration management

- Self-healing

- Batch execution

- IPv4/IPv6 dual-stack

- Automatic bin packing

Want to learn Kubernetes? Check out these learning resources.

OpenShift

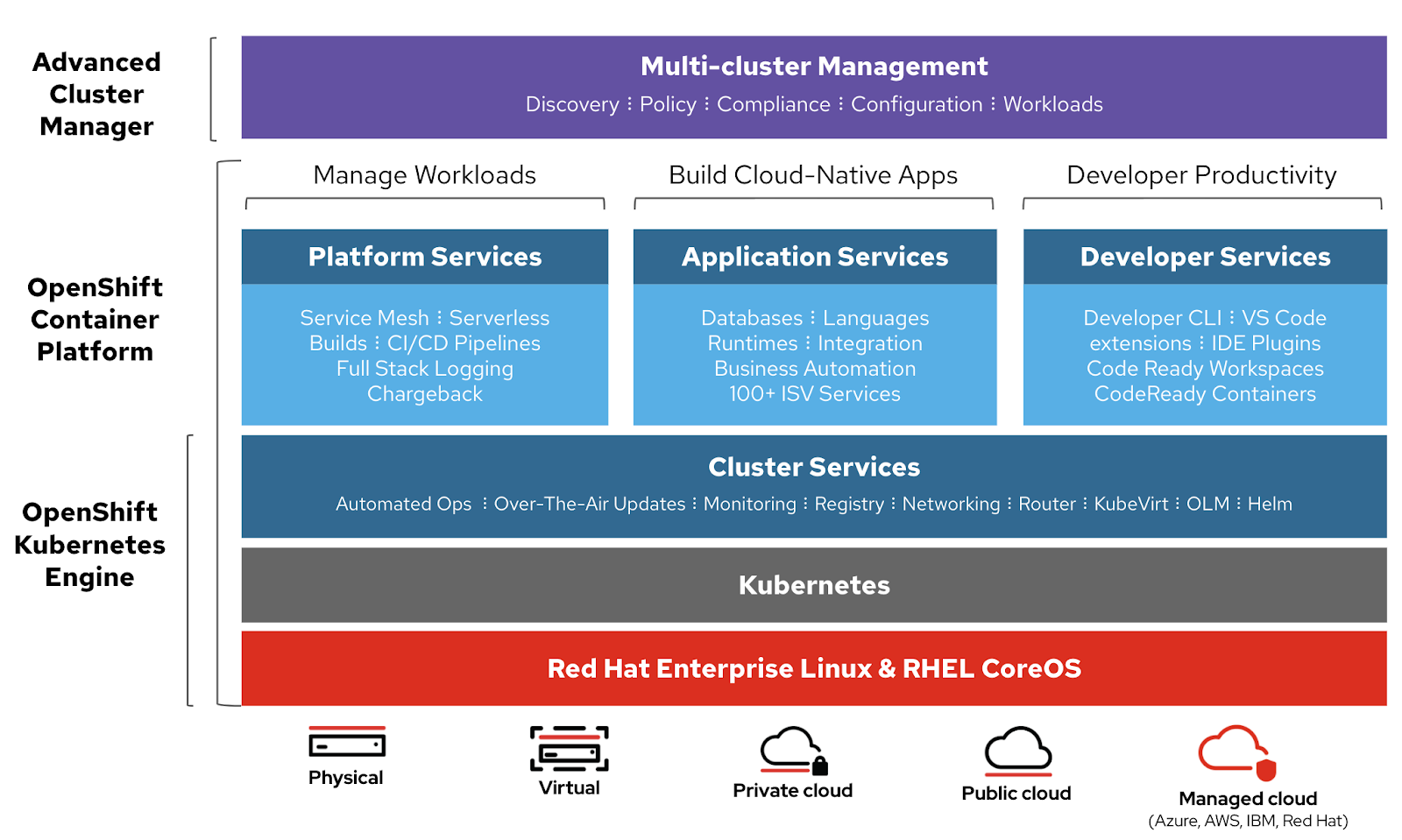

Redhat offers OpenShift Container Platform as a Service (PaaS). It helps in the automation of applications on secure and scalable resources in hybrid cloud environments. It provides enterprise-grade platforms for building, deployment, and managing containerized applications.

It’s built on Redhat enterprise Linux and Kubernetes engine. Openshift has various functionalities to manage clusters via UI and CLI. Redhat provides Openshift in two more variants,

- Openshift Online – offered as software as a service(SaaS)

- OpenShift Dedicated – offered as managed services

Openshift Origin (Origin Community Distribution) is an open-source upstream community project which is used in OpenShift Container Platform, Openshift Online, and OpenShift Dedicated.

Nomad

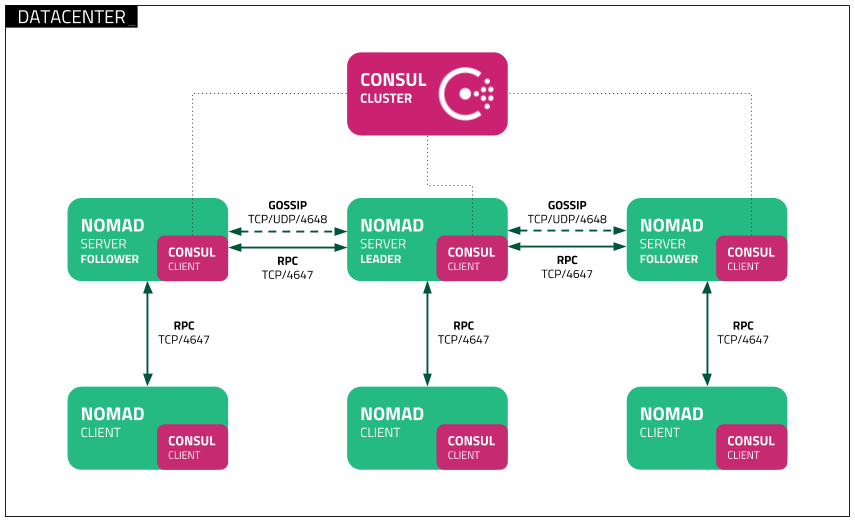

Nomad is a simple, flexible, and easy-to-use workload orchestrator to deploy and manage containers and non-containerized applications across on-prem and clouds at scale. Nomad runs as a single binary with a small resource footprint (35MB) and is supported on macOS, Windows, and Linux.

Developers use declarative infrastructure-as-code (IaC) for deploying their applications and define how an application should be deployed. Nomad automatically recovers applications from failures.

Nomad Orchestrate applications of any type (not just containers). It provides First-class support for Docker, Windows, Java, VMs, and more.

Features

- Simple & Reliable

- Modernize Legacy Applications without Rewrite

- Easy Federation at Scale

- Proven Scalability

- Multi-Cloud with Ease

- Native Integrations with Terraform, Consul, and Vault

Docker Swarm

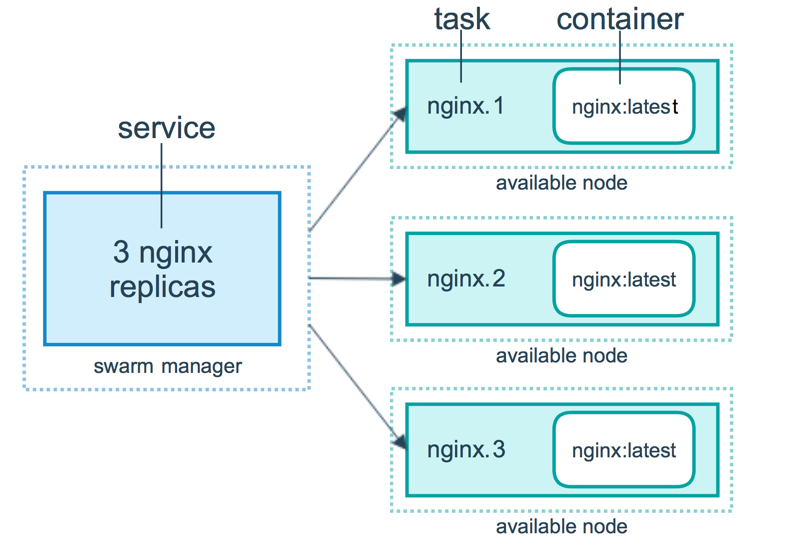

Docker Swarm uses a declarative model. You can define the desired state of the service, and Docker will maintain that state. Docker Enterprise Edition has integrated Kubernetes with Swarm. Docker is now providing flexibility in the choice of orchestration engine. Docker engine CLI is used to create a swarm of docker engines where application services can be deployed.

Docker commands are used to interact with the cluster. Machines that join the cluster are known as nodes, and the Swarm manager handles the activities of the cluster.

Docker Swarm consists of two main components:

- Manager – manager nodes assign tasks to worker nodes in the swarm. A leader is elected based on a Raft consensus algorithm. The leader handles all swarm management and task orchestration decisions for the swarm.

- Worker Node – worker Node receives tasks from the manager node and executes them.

Features

- Cluster management integrated with Docker Engine

- Decentralized design

- Declarative service model

- Scaling

- Desired state reconciliation

- Multi-host networking

- Service discovery

- Load balancing

- Secure by default

- Rolling updates

Docker Compose

Docker Compose is for defining and running multi-container applications that work together. Docker-compose describes groups of interconnected services that share software dependencies and are orchestrated and scaled together.

You can use a YAML file (dockerfile) to configure your application’s services. Then, with a docker-compose up command, you create and start all the services from your configuration.

A docker-compose.yml look like this:

version: '3'

volumes:

app_data:

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:6.8.0

ports:

- 9200:9200

- 9300:9300

volumes:

- ./elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- ./elastic-certificates.p12:/usr/share/elasticsearch/config/elastic-certificates.p12

- ./docker-data-volumes/elasticsearch:/usr/share/elasticsearch/data

kibana:

depends_on:

- elasticsearch

image: docker.elastic.co/kibana/kibana:6.8.0

ports:

- 5601:5601

volumes:

- ./kibana.yml:/usr/share/kibana/config/kibana.yml

app:

depends_on:

- elasticsearch

image: asadali08527/app:latest

ports:

- 8080:8080

volumes:

- app_data:/var/lib/app/You can use Docker Compose to factor the app code into several independently running services that communicate using an internal network. The tool provides CLI for managing the entire lifecycle of your applications. Docker Compose has traditionally been focused on the development and testing workflows, but now they are focussing on more production-oriented features.

The Docker Engine may be a stand-alone instance provisioned with Docker Machine or an entire Docker Swarm cluster.

Features

- Multiple isolated environments on a single host

- Preserve volume data when containers are created

- Only recreate containers that have changed

- Variables and moving a composition between environments

MiniKube

Minikube allows users to run Kubernetes locally. With Minikube, you can test applications locally inside a single-node Kubernetes cluster on your personal computer. Minikube has integrated support for the Kubernetes Dashboard.

Minikube runs the latest stable release of Kubernetes and supports the following features.

- Load Balancing

- Multi-cluster

- Persistent Volumes

- NodePorts

- ConfigMaps and Secrets

- Container Runtime: Docker, CRI-O, and containered

- Enabling CNI (Container Network Interface)

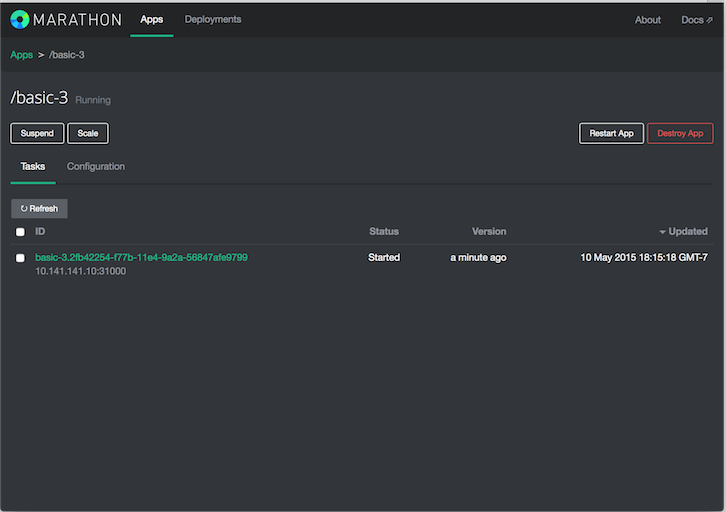

Marathon

Marathon is for Apache Mesos that has the capability to orchestrate apps as well as frameworks.

Apache Mesos is an open-source cluster manager. Mesos is a project by Apache that has the ability to run both containerized and non-containerized workloads. The major components in a Mesos cluster are Mesos Agent Nodes, Mesos Master, ZooKeeper, Frameworks – Frameworks coordinate with the master to schedule tasks onto agent nodes. Users interact with the Marathon framework to schedule jobs.

The Marathon scheduler uses ZooKeeper to locate the current master to submit tasks. Marathon scheduler and the Mesos master have secondary master running to ensure high availability. Clients interact with Marathon using the REST API.

Features

- High Availability

- Stateful apps

- Beautiful and powerful UI

- Constraints

- Service Discovery & Load Balancing

- Health Checks

- Event Subscription

- Metrics

- REST API’s

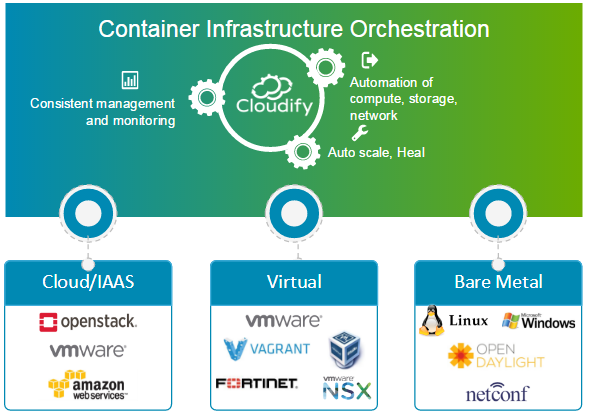

Cloudify

Cloudify is an open-source cloud orchestration tool for deployment automation and lifecycle management of containers and microservices. It provides features such as clusters on-demand, auto-healing, and scaling at the infrastructure level. Cloudify can manage container infrastructure and orchestrate the services that run on container platforms.

It can be easily integrated with Docker and Docker-based container managers, including the following.

- Docker

- Docker Swarm

- Docker Compose

- Kubernetes

- Apache Mesos

Cloudify can help to create, heal, scale, and tear down container clusters. Container orchestration is key in providing a scalable and highly-available infrastructure on which container managers can run. Cloudify provides the ability to orchestrate heterogeneous services across platforms. You can deploy applications using the CLI and Cloudify Manager.

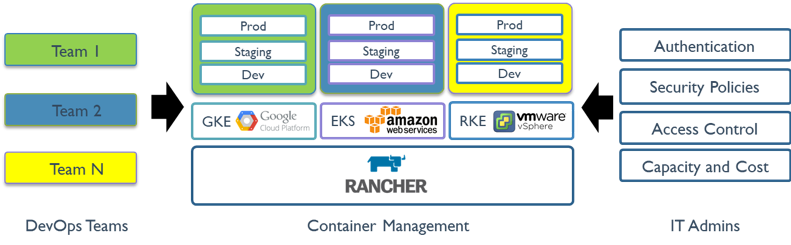

Rancher

Rancher is an open-source platform that uses container orchestration known as cattle. It allows you to leverage orchestration services like Kubernetes, Swarm, Mesos. Rancher provides the software required to manage containers so that organizations don’t need to build container services platforms from scratch using a distinct set of open source technologies.

Rancher 2.x allows the management of Kubernetes clusters running on the customer-specified providers.

Getting started with Rancher is two steps process.

Prepare a Linux Host

Prepare a Linux host with 64-bit Ubuntu 16.04 or 18.04 (or another supported Linux distribution, and at least 4GB of memory. Install a supported version of Docker on the host.

Start the server

To install and run Rancher, execute the following Docker command on your host:

$ sudo docker run -d --restart=unless-stopped -p 80:80 -p 443:443 rancher/rancherThe rancher user interface allows the management of thousands of Kubernetes clusters and nodes.

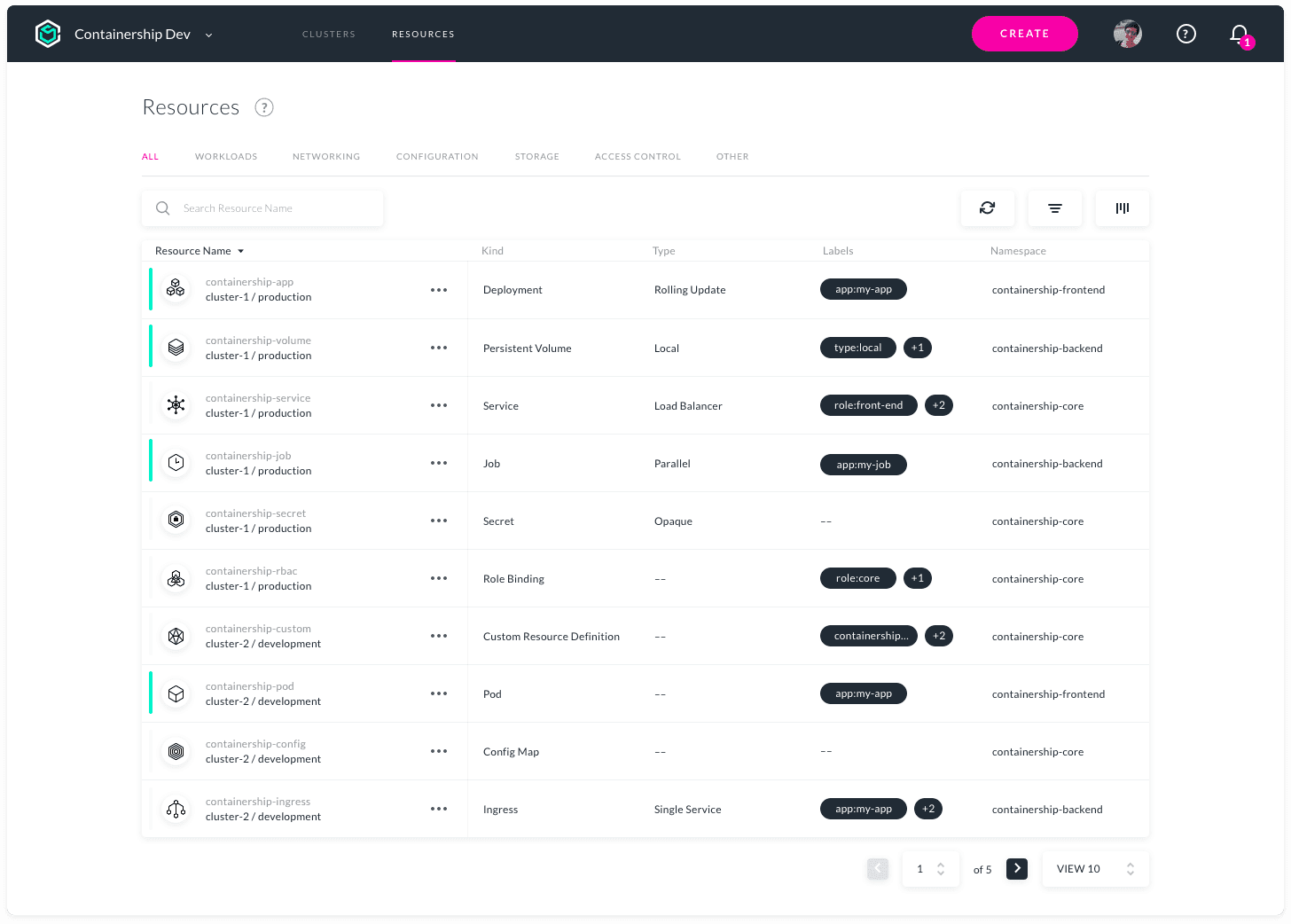

Containership

Containership is for enabling the deployment and management of multi-cloud Kubernetes infrastructure. It’s flexible to operate in the public, private cloud, and on-premise environments from a single tool. It enables to provision, management, and monitoring of your Kubernetes clusters across all major cloud providers.

Containership is built using cloud-native tools, like Terraform for provisioning, Prometheus for monitoring, and Calico for networking and policy management. It is built on top of vanilla Kubernetes. The Containership platform offers an intuitive dashboard, as well as powerful REST API for complex automation.

Features

- Multicloud Dashboard

- Audit Logs

- GPU Instance Support

- Non-disruptive Upgrades

- Schedulable Masters

- Integrated Metrics

- Realtime Logging

- Zero-downtime Deployments

- Persistent Storage Support

- Private Registry Support

- Workload Autoscaling

- SSH Key Management

AZK

AZK is an open-source orchestration tool for development environments through a manifest file (the Azkfile.js), which helps developers to install, configure, and run commonly used tools for developing web applications with different open source technologies.

AZK uses containers instead of virtual machines. Containers are like virtual machines, with better performance and lower consumption of physical resources.

Azkfile.js files can be reused to add new components or create new ones from scratch. It can be shared, which assures total parity among development environments in different programmers’ machines and reduces the chances of bugs during deployment.

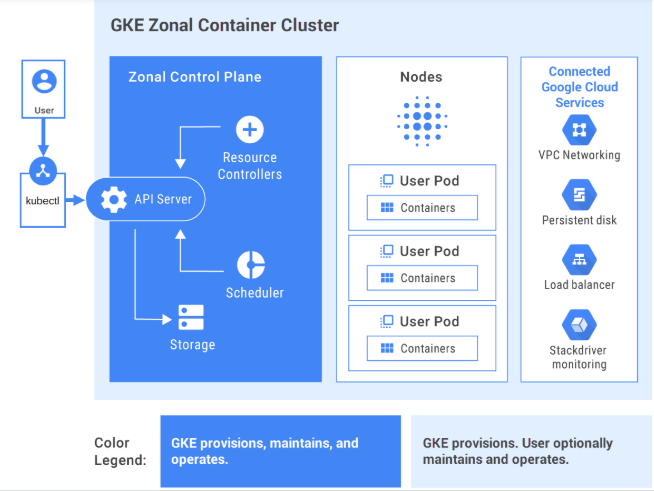

GKE

GKE provides a fully managed solution for container applications orchestration on Google Cloud Platform. GKE clusters are powered by the Kubernetes. You can interact with clusters using Kubernetes CLI. Kubernetes commands can be used to deploy and manage applications, perform administration tasks, set policies, and monitor the health of deployed workloads.

Advanced management features of Google Cloud also available with GKE clusters like Google Cloud’s load-balancing, Node pools, autoscaling of nodes, Automatic upgrades, Node auto-repair, Logging, and monitoring with Google Cloud’s operations suite.

Google Cloud provides CI/CD tools to help you build and serve application containers. You can use Cloud Build to build container images (such as Docker) from a variety of source code repositories, and Container Registry to store your container images.

GKE is an enterprise-ready solution with prebuilt deployment templates.

Interested in learning GKE? Check out this beginner course.

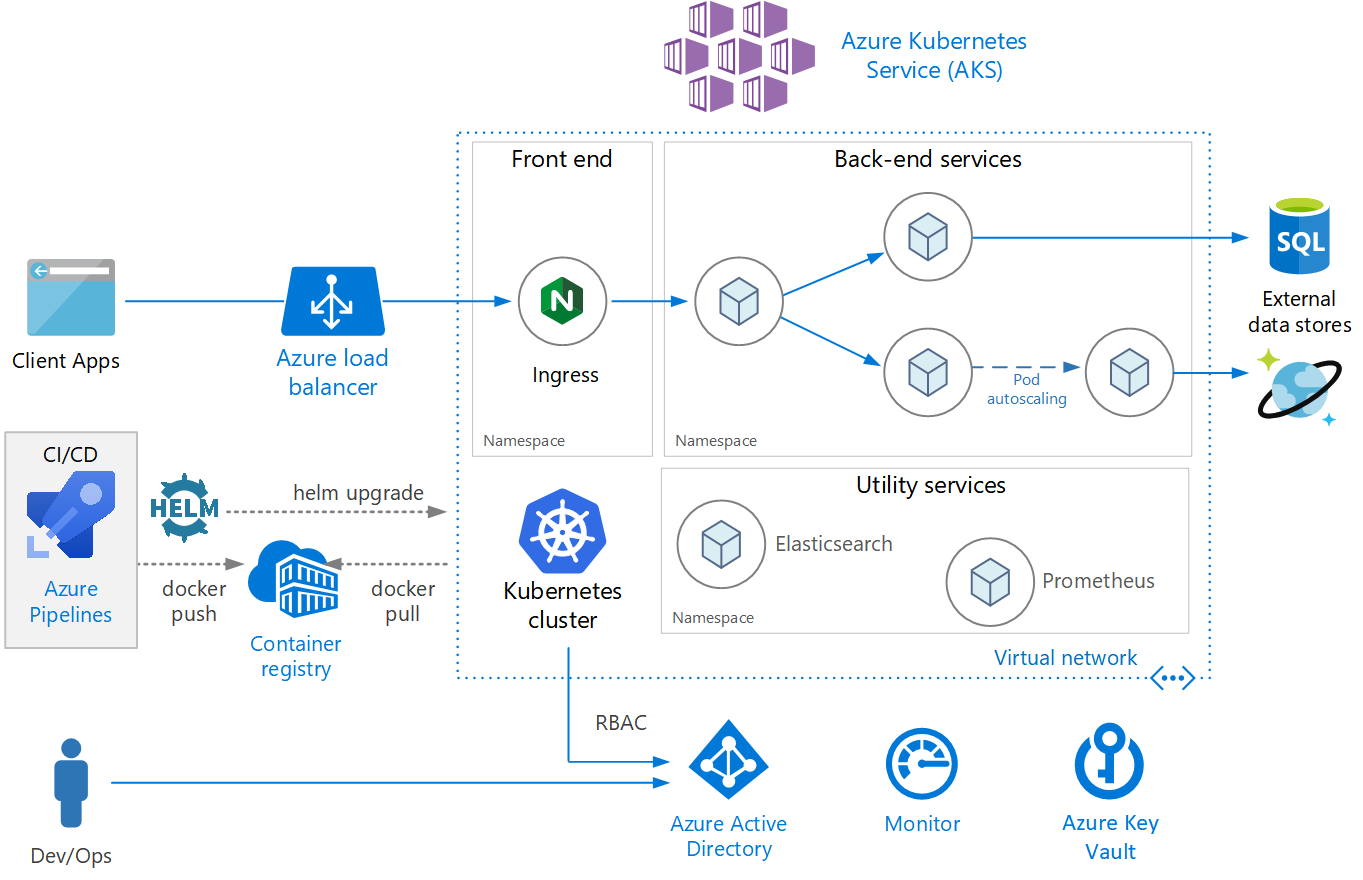

AKS

AKS is a fully managed Kubernetes service offered by Azure, which offers serverless Kubernetes, security, and governance. AKS manages your Kubernetes cluster and allows you to easily deploy containerized applications. AKS automatically configures all Kubernetes master and nodes. You only need to manage and maintain the agent nodes.

AKS is free; you only pay for agent nodes within your cluster and not for masters. You can create an AKS cluster in the Azure portal or programmatically. Azure also supports additional features such as advanced networking, Azure Active Directory integration, and monitoring using Azure Monitor.

AKS also supports Windows Server containers. Its cluster and deployed application performance can be monitored from Azure Monitor. Logs are stored in an Azure Log Analytics workspace.

AKS has been certified as Kubernetes conformant.

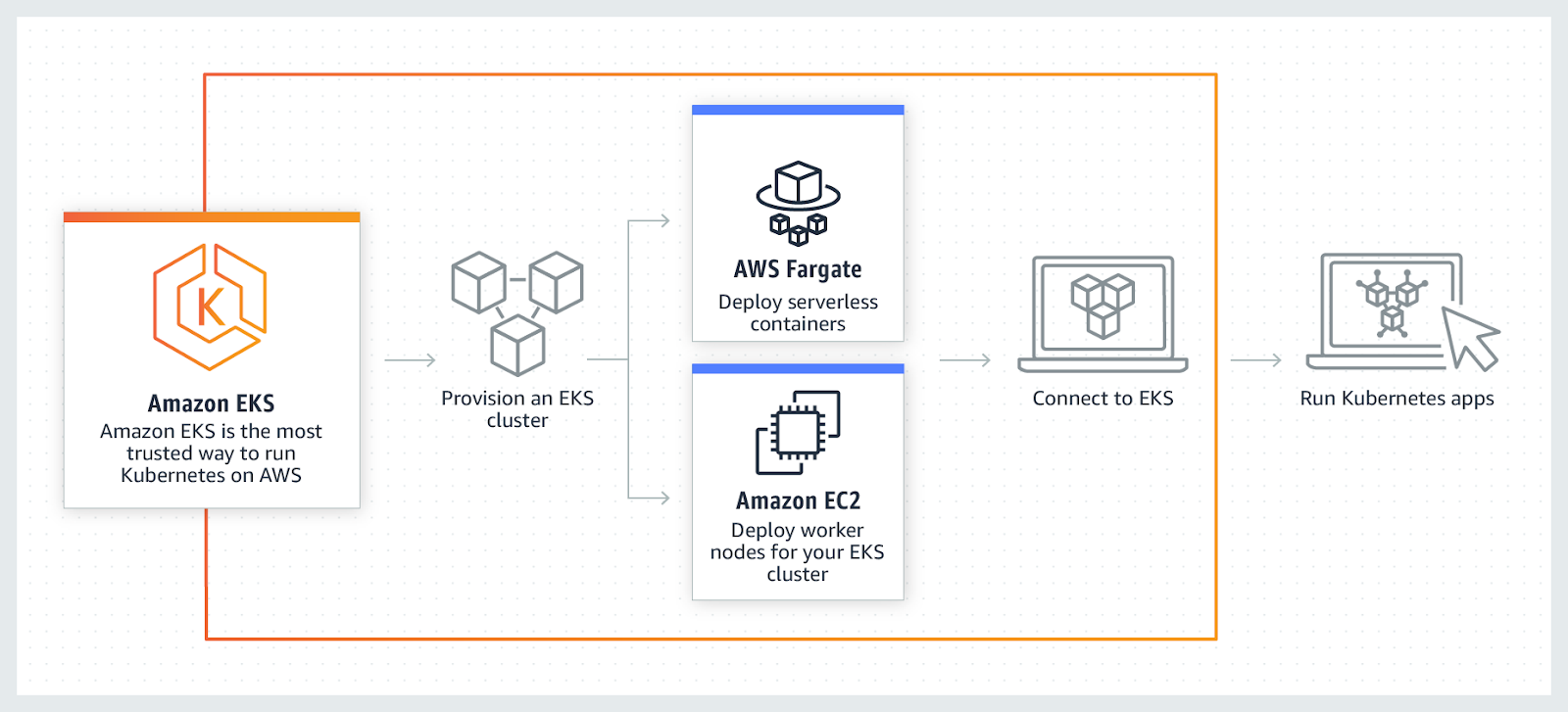

AWS EKS

AWS EKS is a fully-managed Kubernetes service. AWS allows you to run your EKS cluster using AWS Fragrate, which is a serverless compute for containers. Fragrance removes the need to provision and manage servers, allowing pay per resource per application.

AWS allows the use of additional features with EKS such as Amazon CloudWatch, Amazon Virtual Private Cloud (VPC), AWS Identity, Auto Scaling Groups, and Access Management (IAM), monitor, scale, and load-balance applications. EKS integrates with AWS App mesh and provides Kubernetes native experience. EKS runs the latest Kubernetes and is certified Kubernetes conformant.

Conclusion

In the end, I hope the above list has given a fair understanding of the various container orchestration tools, and now depending upon the use case, it will be easier to opt for the best one.

Next, find out Kubernetes Management Software.