Llama 2 is a family of pre-trained and fine-tuned large language models (LLMs) developed by Meta, available for personal and commercial use at no cost, with varying sizes to fit specific use cases and computational requirements.

Meta released the Llama series of LLMs, followed by the popularity of OpenAI’s ChatGPT. Llama 2 is now freely available for everyone to use and experiment with generative AI technology.

What is Llama 2?

Llama 2 is a collection of LLMs built by Meta. An LLM is a specific type of neural network trained to handle language-related inputs and respond likewise.

The foundational model, Llama 2, has been trained on two trillion tokens and has varying model sizes ranging from 7 to 70 billion parameters. The other one, Llama 2 Chat, has been additionally optimized for dialogues using supervised fine-tuning and trained with over 1 million new human annotations to ensure safety and helpfulness.

Llama 2, although available for personal use, is primarily aimed at business professionals. It will help developers to swiftly make their own generative AI-based apps. Especially, the fined tuned versions (Llama 2 Chat and Code Llama) are great for small businesses to get started without spending resources on the pretraining.

How Does Llama 2 Work?

Llama 2 is an autoregressive language model based on transformer architecture. This means it takes the sequence of words as input to generate output one token at a time, all the while continuously taking the context of the ongoing response.

LLMs like Llama 2 and Llama 2 Chat can easily understand the day-to-day language, which helps them ace natural language processing (NLP) tasks, such as writing, coding, translation, summarization, and more, within a simple chat interface.

The base model, Llama 2, needs fine-tuning to serve specific use cases. On the other hand, Llama 2 Chat has been optimized for dialogues, like its well-known competitor, ChatGPT.

System Requirements to Run Llama 2

To run Llama 2 effectively, Meta recommends using multiple ultra-high-end GPUs such as NVIDIA A100s or H100s and utilizing techniques like tensor parallelism. The specific hardware requirements depend on the desired speed and type of task.

Tensor parallelism involves running large scale neural networks by dividing workload across multiple devices.

Meta further states that Llama 2 can be run with Tensor Processing Units (TPUs) and also with inexpensive, standard hardware via optimization projects like llama.cpp and MLC LLM.

On the software front, Llama 2 is officially supported only on Linux. Still, unofficial support for other operating systems is there. For instance, llama.cpp can help you experience Lllama 2 on macOS, Windows, Linux, FreeBSD, and even as a Docker image. Likewise, MLC LLM also supports Android and iOS in addition to major desktop platforms.

Features of Llama 2

Llama 2 offers features such as language support, distribution, coding version, model variants, and more.

- Multi-language Support: Llama 2 is primarily trained in English but also works in 28 other languages, with limited capability in those languages. Community-driven projects are working to improve support for other languages.

- Accessibility and Distribution: Meta has released Llama 2 under a permissive license for free personal and commercial use. Businesses with less than 700 million monthly active users can use it for free, while others need approval from Meta.

- Advanced Coding Capabilities: Code Llama, a coding-specific version of Llama 2, is purpose-built by Meta for programming. It has been released in three variants: base model, Python-specialized, and the last one for a better understanding of natural language instructions.

- Model Variants: Llama 2 comes in various model sizes, such as 7B, 13B, 34B, and 70B, giving ample choice in choosing for varying tasks and computational availability.

- Deployment Flexibility: One can deploy Llama 2 on enterprise-grade hardware or commodity equipment. Besides, you can avail of pre-configured setups of Llama 2 with cloud service providers, including Microsoft Azure, AWS, and Google Cloud.

- Auto-regressive Capabilities: Llama 2 responds auto-regressively, allowing it to generate contextually relevant text. This makes it an AI model well-suited for story generation, conversation, and short-range content creation.

How to Use Llama 2?

There are multiple ways to use Llama 2, including locally, on the cloud, and with third-party platforms.

Local Install: This method involves several steps, such as requesting model access from Meta, downloading Python and satisfying other dependencies, loading the model, tokenizing the input, generating text, and fine-tuning (optional).

On the cloud: Using Llama 2 on Meta’s official partner, Azure, starts with locating it on the model catalog and selecting the preferred one from the collection after checking their model cards. Next, configure inference parameters, fine-tune the model, evaluate with test data, and deploy.

Third-party platforms: This is the simplest method to test how these models might perform. There are websites like llama2.ai that allow selecting the model, adjusting inference parameters and testing.

Llama vs Llama 2: Differences You Should Know

Llama 2 is a significant upgrade over its predecessor, Llama. Here’s a table summarizing a few important differences among them.

| Attributes | Llama | Llama 2 |

|---|---|---|

| Release date | February 24, 2023 | July 18, 2023 |

| Training | 1-1.4 trillion tokens | 2 trillion tokens |

| Context length | 2048 Tokens | 4096 Tokens |

| Model variants | 7B, 13B, 33B, 65B | 7B, 13B, & 70B |

| Official fine-tuned models | No | Llama 2 Chat & Code Llama |

| Language support | 20 Languages | 27 Languages |

| Model training carbon footprint | 1,015 tCO2eq | 539 tCO2eq (compensated) |

| Native cloud availability | Not supported | Azure, AWS, GCP, etc. |

In addition, Llama 2 outperforms Llama on most NLP standards, such as MMLU, TriviaQA, HumanEval, Natural Questions, AGIEval, and more, indicating overall better performance.

Comparing Llama 2, GPT, and Gemini: A Detailed Analysis of Features and Performance

Llama2, GPT, and Gemini all belong to the same league of LLMs. However, the major difference between them is that GPT and Gemini are closed-source projects, while Llama 2 is an open-source project. This gives developers the freedom to build their applications on top of Meta’s LLM.

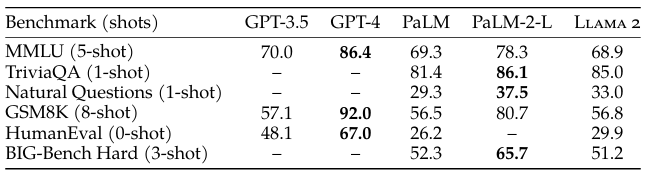

On the performance front, GPT-4 races ahead of Llama 2 and Gemini (PaLM2) on benchmarks, including overall knowledgebase (MMLU), basic mathematics (GSM8K), and coding (HumanEval).

Gemini performs impressively in research and analysis (TriviaQA), real-time information retrieval (Natural Questions), and high-level reasoning (BIG-Bench Hard).

Llama 2 performed on par with GPT-3.5, except for coding-related tasks. Overall, Llama 2 came out as a jack of all LLM trades without a single point of sheer brilliance. The major incentive for deploying Llama2 still remains it is open source and free.

Go With Llama 2!

Meta has made LLM technology more accessible with Llama 2, allowing businesses to integrate pre-trained models into their applications. However, some individuals may switch to competitors due to the simplified interface and limited geographic availability of Meta AI.

Llama 2 is primarily aimed at businesses but requires further improvement before becoming popular. It can also be integrated with Meta’s metaverse apps to enhance conversations and interactions in virtual environments.

Frequently Asked Questions

Llama 2 can be used for building generative AI based applications, similar to ChatGPT.

No. GPT-4 is better than Llama 2 in answering multiple domain-specific queries, mathematics, and coding tasks.

Yes. Llama 2 is free for personal and commercial use, but organizations with more than 700 million active users per month need to contact Meta for additional terms.

Yes, Llama 2 is available for commercial use. A business can deploy Llama 2 on-premises or using cloud services like Microsoft Azure, AWS, and Google Cloud.

Llama 2 has been trained in 27 languages, but its support for languages other than English is limited. There are community projects available for searching for additional language support.

Official support for Llama 2 is limited to Linux, but there are projects like llama.cpp and MLC LLM that allow it to run on other operating systems, such as Windows and macOS.

The Llama 2 architecture differs from Llama 1 in various aspects. Both variants are transformer-based, but Llama 2 features adjustments in parameter size, training data, and context length. Additionally, larger Llama 2 models utilize Grouped-Query Attention for enhanced inference scalability.

Related Articles

-

EditorNarendra Mohan Mittal is a senior editor & writer at Geekflare. He is an experienced content manager with extensive experience in digital branding strategies.

EditorNarendra Mohan Mittal is a senior editor & writer at Geekflare. He is an experienced content manager with extensive experience in digital branding strategies.