If you’re interested in AI, this article will certainly help you dive deep into its intricacies. I’m here to guide you on your journey into the realm of LLMOps platforms and provide clarity on the crucial tools offered for testing, improving, and deploying LLMs.

The platforms featured in this prominent listicle play a pivotal role in unlocking the full potential of Language Models, presenting innovative solutions for development and management.

Esteemed organizations like Google, OpenAI, and Microsoft harness LLMOps platforms to ensure thorough testing, continuous refinement, and effective deployment of their language models, resulting in reliable and precise outcomes.

Recognizing that LLMOps might be new to many, let’s begin by gaining a basic understanding of LLMOps: its significance, functions, and benefits.

With this foundation, we can then proceed to our primary objective – identifying the optimal tools for our readers based on their specific requirements. The prime listicle embedded within this article serves as a guide towards achieving this goal.

What is LLMOps?

LLMOps stands for Language Model Operations. It’s about managing, deploying, and improving large language models like those used in AI. LLMOps involves tools and processes to train, test, and maintain these models, ensuring they work well and stay accurate over time.

Although LLMs are easy to prototype, using them in commercial products poses challenges. The LLM development cycle includes intricate steps like data prep, model tuning, and deployment, requiring seamless teamwork. LLMOps covers this cycle, ensuring smooth experimentation, deployment, and enhancement.

And finally, I would like you to understand what LLMOps Platform is since it will provide you with accurate clarity, and moving on this path will definitely give you a good outcome after reading it.

The LLMOps platform fosters collaboration among data scientists and engineers, aiding iterative data exploration. It enables real-time co-working, experiment tracking, model management, and controlled LLM deployment. LLMOps automates operations, synchronization, and monitoring across the ML lifecycle.

How does LLMOps work?

LLMOps platforms simplify the entire lifecycle of language models. They centralize data preparation, enable experimentation, and allow fine-tuning for specific tasks. These platforms also facilitate smooth deployment, continuous monitoring, and seamless version transitioning.

Collaboration is promoted, errors are minimized through automation and ongoing refinement is supported. In essence, LLMOps optimizes language model management for diverse applications.

Benefits of LLMOps

The primary advantages I find significant include efficiency, accuracy, and scalability. Here’s an elaborated version of the benefits that LLMOps offers:

- Efficiency: LLMOps platforms optimize the complete cycle of language model development, testing, and deployment, leading to time and effort savings.

- Collaboration: These platforms foster seamless cooperation between data scientists, engineers, and stakeholders, promoting effective teamwork.

- Accuracy: LLMOps maintain and enhance model accuracy over time by continuously monitoring and refining the models.

- Automation: LLMOps automates several tasks, including data preprocessing and monitoring, reducing the need for manual intervention.

- Scalability: By effectively scaling up models, LLMOps platforms can easily accommodate increased workloads or demands.

- Deployment Ease: LLMOps ensure models are smoothly integrated into applications or systems, minimizing challenges related to deployment.

In essence, LLMOps improve efficiency, accuracy, and scalability while promoting collaboration, automation, and seamless deployment.

Now, let’s move forward to our list of platforms. This list is a guide from Geekflare, but the decision to choose the best one for you, based on your requirements and needs, is in your hands.

Dify

Are you intrigued by the rapid advancements in LLM technologies like GPT-4 and excited about their practical potential? Dify is designed to cater to you. It empowers developers and even those without a strong technical background to quickly create valuable applications using extensive language models. These applications aren’t just user-friendly; they’re set for ongoing enhancement.

Key Features:

- User-Friendly LLMOps Platform: Effortlessly develop AI applications using GPT-4 and manage them visually.

- Contextual AI with Your Data: Utilize documents, web content, or Notion notes as AI context. Dify handles preprocessing and more, saving you development time.

- Unleash LLM’s Potential: Dify ensures seamless model access, context embedding, cost control, and data annotation for smooth AI creation.

- Ready-Made Templates: Choose from dialogue and text generation templates, ready to customize for your specific applications.

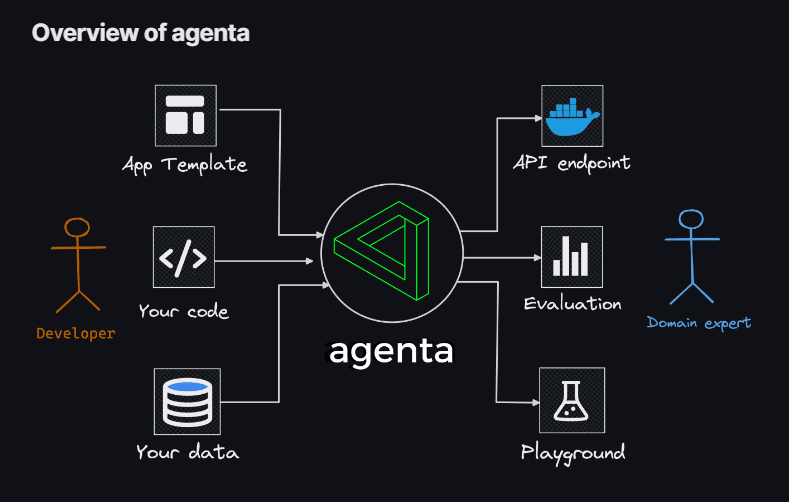

Agenta

If you’re looking for the flexibility to use coding for crafting LLM apps, free from the constraints of models, libraries, or frameworks, then Agenta is your solution. Agenta emerges as an open-source, end-to-end platform designed to streamline the process of bringing complex Large Language Model applications (LLM apps) into production.

With Agenta, you can swiftly experiment and version prompts, parameters, and intricate strategies. This encompasses in-context learning with embeddings, agents, and custom business logic.

Key Features:

- Parameter Exploration: Specify your application’s parameters directly within your code and effortlessly experiment with them through an intuitive web platform.

- Performance Assessment: Evaluate your application’s efficacy on test sets using a variety of methodologies like exact match, AI Critic, human evaluation, and more.

- Testing Framework: Create test sets effortlessly using the user interface, whether it’s by uploading CSVs or seamlessly connecting to your data through our API.

- Collaborative Environment: Foster teamwork by sharing your application with collaborators and inviting their feedback and insights.

- Effortless Deployment: Launch your application as an API in a single click, streamlining the deployment process.

Furthermore, Agenta fosters collaboration with domain experts for prompt engineering and evaluation. Another highlight is Agenta’s capability to systematically evaluate your LLM apps and facilitate one-click deployment of your application.

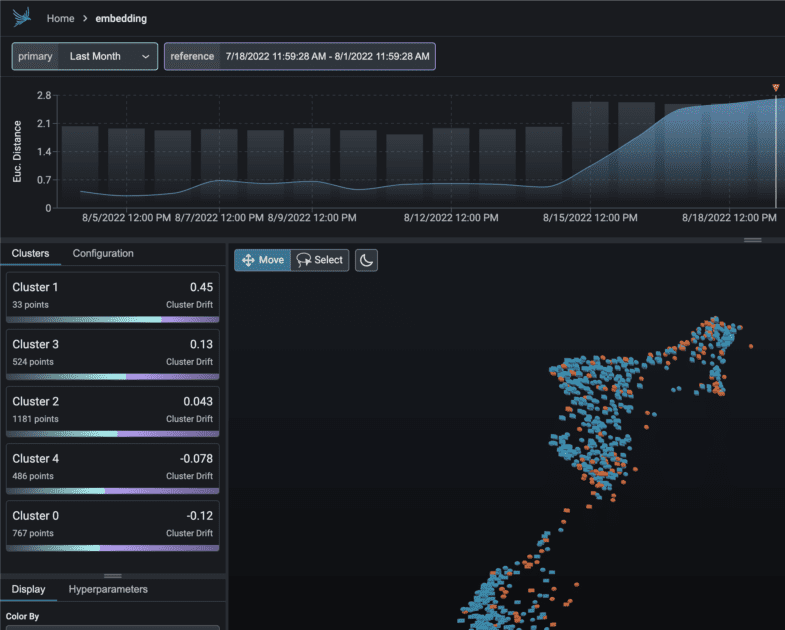

Phoenix

Embark on an instant journey into MLOps insights powered by Phoenix. This ingenious tool seamlessly unlocks observability into model performance, drift, and data quality, all without the burden of intricate configurations.

As an avant-garde notebook-centric Python library, Phoenix harnesses the potency of embeddings to unearth concealed intricacies within LLM, CV, NLP, and tabular models. Elevate your models with the unmatched capabilities that Phoenix brings to the table.

Key Features:

- Embedded Drift Investigation: Dive into UMAP point clouds during instances of substantial Euclidean distance and pinpoint drift clusters.

- Drift and Performance Analysis via Clustering: Deconstruct your data into clusters of significant drift or subpar performance through HDBSCAN.

- UMAP-Powered Exploratory Data Analysis: Shade your UMAP point clouds based on your model’s attributes, drift, and performance, unveiling problematic segments.

LangKit

LangKit stands as an open-source toolkit for text metrics designed to monitor large language models effectively.

The driving force behind the creation of LangKit stems from the realization that transforming language models, including LLMs, into production entails various risks. The countless potential input combinations, leading to equally numerous outputs, pose a considerable challenge.

Key Features:

- Prompt Injection Analysis: Gauge similarity scores with recognized fast injection attacks.

- Sentiment Analysis: Assess the sentiment tone within the text.

- Text Quality Assessment: Evaluate readability, complexity, and grade scores.

- Jailbreak Detection: Identify similarity scores with known jailbreak attempts.

- Toxicity Analysis: Detects levels of toxicity in the provided content.

The unstructured nature of text further complicates matters in the realm of ML observability – a challenge that merits resolution. After all, the absence of insight into a model’s behavior can yield significant repercussions.

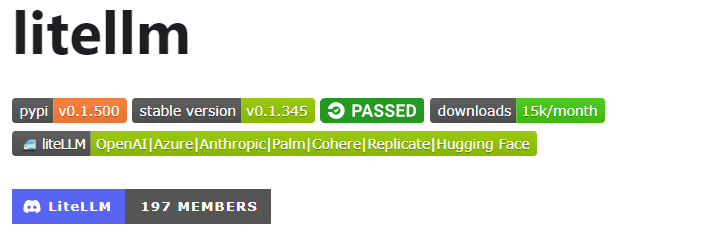

LiteLLM

With LiteLLM, simplify your interactions with various LLM APIs – Anthropic, Huggingface, Cohere, Azure OpenAI, and more – using a lightweight package in the OpenAI format.

This package streamlines the process of calling API endpoints from providers like OpenAI, Azure, Cohere, and Anthropic. It translates inputs to the relevant provider’s completion and embedding endpoints, ensuring uniform output. You can always access text responses at [‘choices’][0][‘message’][‘content’].

Key Features:

- Streamlined LLM API Calling: Simplifies interaction with LLM APIs like Anthropic, Cohere, Azure OpenAI, etc.

- Lightweight Package: A compact solution for calling OpenAI, Azure, Cohere, Anthropic, and API endpoints.

- Input Translation: Manages translation of inputs to respective provider’s completion and embedding endpoints.

- Exception Mapping: Maps common exceptions across providers to OpenAI exception types for standardized error handling.

Additionally, the package includes an exception mapping feature. It aligns standard exceptions across different providers with OpenAI exception types, ensuring consistency in handling errors.

LLM-App

Embark on the journey of crafting your unique Discord AI chatbot, enriched with the prowess of answering questions, or dive into exploring similar AI bot ideas. All these captivating functionalities converge through the LLM-App.

I am presenting Pathways LLM-App – a Python library meticulously designed to accelerate the development of groundbreaking AI applications.

Key Features:

- Crafted for Local ML Models: LLM App is configured to run with on-premise ML models, staying within the organization’s boundaries.

- Real-time Data Handling: This library adeptly manages live data sources, including news feeds, APIs, and Kafka data streams, with user permissions and robust security.

- Smooth User Sessions: The library’s query-building process efficiently handles user sessions, ensuring seamless interactions.

This exceptional asset empowers you to deliver instantaneous responses that mirror human interactions when addressing user queries. It accomplishes this remarkable feat by effectively drawing on the latest insights concealed within your data sources.

LLMFlows

LLMFlows emerges as a framework tailored to simplify, clarify, and bring transparency to developing Large Language Model (LLM) applications like chatbots, question-answering systems, and agents.

The complexity can be amplified in real-world scenarios due to intricate relationships between prompts and LLM calls.

The creators of LLMFlows envisioned an explicit API that empowers users to craft clean and understandable code. This API streamlines the creation of intricate LLM interactions, ensuring seamless flow among various models.

Key Features:

- Seamlessly configure LLM classes, meticulously selecting specific models, parameters, and settings.

- Ensure robust LLM interactions with automatic retries upon model call failures, assuring reliability.

- Optimize performance and efficiency by utilizing Async Flows for parallel execution of LLMs when inputs are available.

- Infuse personalized string manipulation functions directly into flows, facilitating tailored text transformations beyond LLM calls.

- Maintain complete control and oversight over LLM-powered applications with callbacks, offering comprehensive monitoring and visibility into execution processes.

LLMFlows’ classes provide users with unbridled authority without hidden prompts or LLM calls.

Promptfoo

Accelerate evaluations through caching and concurrent testing using promptfoo. It provides command-line interface (CLI) and a library, empowers the assessment of LLM output quality.

Key Features:

- Battle-Tested Reliability: Promptfoo was meticulously crafted to evaluate and enhance LLM apps that cater to over 10 million users in a production environment. The tooling provided is flexible and adaptable to various setups.

- User-Friendly Test Cases: Define evaluations without coding or grappling with cumbersome notebooks. A simple, declarative approach streamlines the process.

- Language Flexibility: Whether you’re using Python, Javascript, or any other language, promptfoo accommodates your preference.

Moreover, promptfoo enables systematic testing of prompts against predefined test cases. This aids in evaluating quality and identifying regressions by facilitating direct side-by-side comparison of LLM outputs.

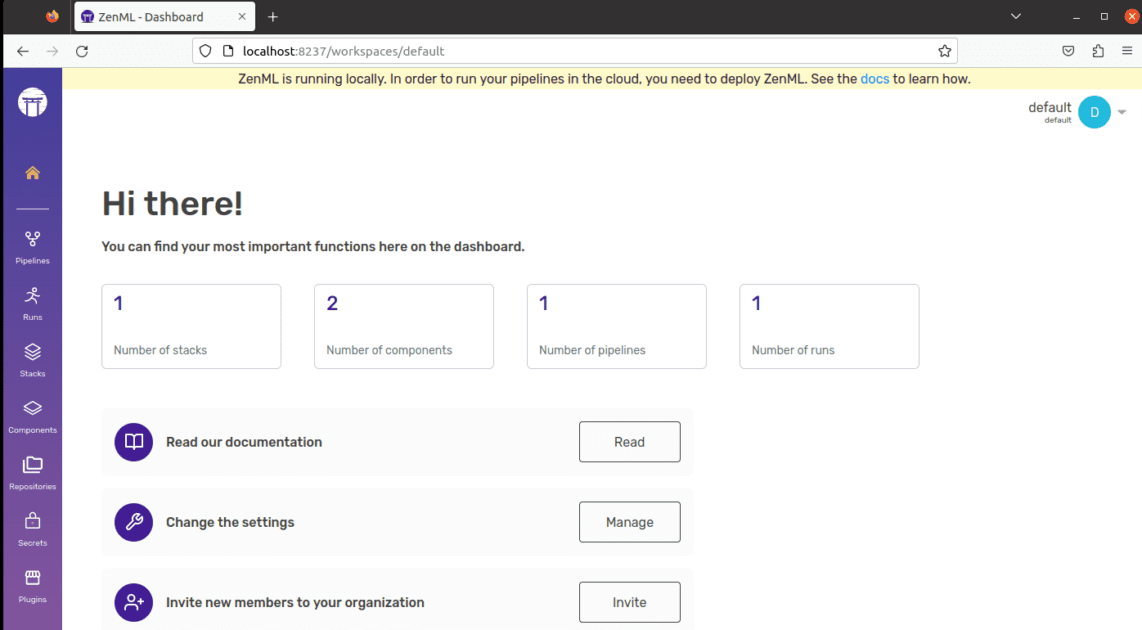

ZenML

Say hello to ZenML – an adaptable, open-source tool designed to make the world of machine learning pipelines easier for professionals and organizations. Imagine having a tool that lets you create machine learning pipelines ready for real-world use, no matter how complex your project is.

ZenML separates the technical stuff from the code, making it more straightforward for developers, data scientists, MLOps experts, and ML engineers to work together. This means your projects can move from the idea stage to being ready for action more smoothly.

Key Features:

- For Data Scientists: Focus on creating and testing models while ZenML prepares your code for real-world use.

- For MLOps Infrastructure Experts: Set up, manage, and deploy complex systems quickly so your colleagues can use them without hassle.

- For ML Engineers: Handle every step of your machine learning project, from start to finish, with the help of ZenML. This means less handing off work and more clarity in your organization’s journey.

ZenML is made for everyone – whether you’re a professional or part of an organization. It comes with a way of writing code designed for machine learning tasks, and it works well with any cloud service or tool you use. Plus, it helps you manage your project in one place, so you don’t have to worry about juggling different things. Just write your code once and use it easily on other systems.

Final Thought

In this exhilarating odyssey, always keep in mind that each platform presents a distinct key capable of unlocking your AI aspirations. Your selection holds the power to shape your path, so choose wisely!

You may also explore some AI Tools for developers to build apps faster.