Want to download files from a URL using Python? Let’s learn the different ways to do so.

When you’re working on a Python project, you may need to download files from the web—from a specific URL.

You can download them manually into your working environment. However, it is more convenient to download files from their URLs programmatically within a Python script.

In this tutorial, we’ll cover the different ways to download files from the web with Python—using both built-in and third-party Python packages.

How to Use Python for Downloading Files from URL

If you’re familiar with Python, you’d have come across this popular XKCD Python comic:

As an example, we’ll try to download this XKCD comic image (.png extension) PNG image into our working directory using various methods.

Throughout the tutorial, we’ll be working with several third-party Python packages. Install them all in a dedicated virtual environment for your project.

Using urllib.request

You can use Python’s built-in urllib.request module to download files from a URL. This built-in module comes with functionality for making HTTP requests and handling URLs. It provides a simple way to interact with web resources, supporting tasks like fetching data from websites.

Let’s download the XKCD Python comic from its URL using urllib.request:

import urllib.request

url = 'https://imgs.xkcd.com/comics/python.png'

urllib.request.urlretrieve(url, 'xkcd_comic.png')Here we do the following:

- Import the

urllib.requestmodule. - Set the URL of the XKCD Python comic image.

- Use

urllib.request.urlretrieveto download the image and save it as ‘xkcd_comic.png’ in the current directory.

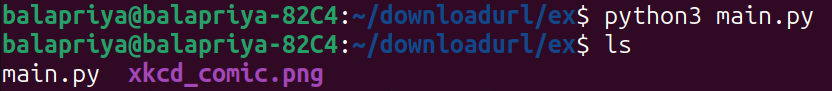

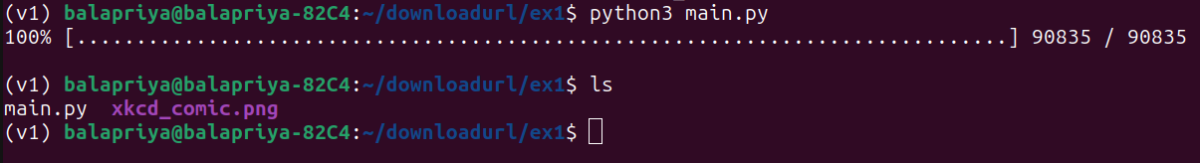

If you now run the ls command at the terminal to view the contents of the current directory, you’ll see the ‘xkcd_comic.png’ file:

Using the Requests Library

The Requests library is a popular and one of the most-downloaded Python packages. You can send HTTP Requests over the web and retrieve content.

First, install the requests library:

pip install requestsIf you’ve created a new Python script in the same directory, delete ‘xkcd_comic.png’ before running the current script.

import requests

url = 'https://imgs.xkcd.com/comics/python.png'

response = requests.get(url)

with open('xkcd_comic.png', 'wb') as file:

file.write(response.content)Let’s break down what we’ve done in this approach:

- Import the

requestslibrary. - Set the URL of the XKCD Python comic image.

- Send a GET request to the URL using

requests.get. - Save the content of the response (the image data) as ‘xkcd_comic.png’ in binary write mode.

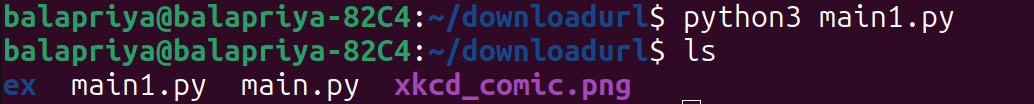

And you should see the downloaded image when printing out the contents of the directory:

Using urllib3

We’ve seen how to use the built-in urllib.request. But you can also use the third-party Python package urllib3.

Urllib3 is a Python library for making HTTP requests and managing connections in a more reliable and efficient manner than the built-in urllib module. It provides features like connection pooling, request retries, and thread safety, making it a robust choice for handling HTTP communication in Python applications.

Install urllib3 using pip:

pip install urllib3Now let’s download the XKCD Python comic using the urllib library:

import urllib3

# URL of the XKCD comic image

url = 'https://imgs.xkcd.com/comics/python.png'

# Create a PoolManager instance

http = urllib3.PoolManager()

# Send an HTTP GET request to the URL

response = http.request('GET', url)

# Retrieve the content (image data)

image_data = response.data

# Specify the file name to save the comic as

file_name = 'xkcd_comic.png'

# Save the image data

with open(file_name, 'wb') as file:

file.write(image_data)This approach seems to be more involved than the previous approaches using urllib.requests and the requests library. So let’s break down the different steps:

- We begin by importing the

urllib3module, which provides functionality for making HTTP requests. - Then we specify the URL of the XKCD comic image.

- Next, we create an instance of

urllib3.PoolManager(). This object manages the connection pool and allows us to make HTTP requests. - We then use the

http.request('GET', url)method to send an HTTP GET request to the specified URL. This request fetches the content of the XKCD comic. - Once the request is successful, we retrieve the content (image data) from the HTTP response using

response.data. - Finally, we write the image data (retrieved from the response) to the file.

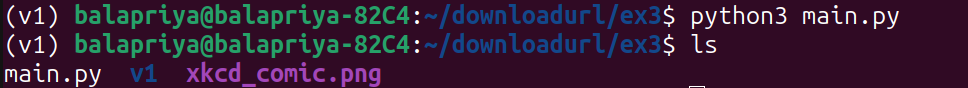

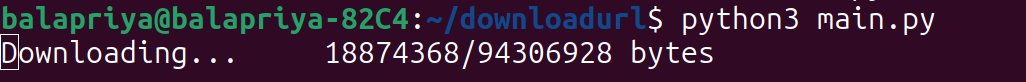

When you run your Python script, you should get the following output:

Using wget

The wget Python library simplifies file downloads from URLs. You can use it to retrieve web resources and is especially handy for automating download tasks.

You can install the wget library using pip and then use its functions to download files from URLs:

pip install wgetThis snippet uses the wget module to download the XKCD Python comic and save it as ‘xkcd_comic.png’ in the working directory:

import wget

url = 'https://imgs.xkcd.com/comics/python.png'

wget.download(url, 'xkcd_comic.png')Here:

- We import the

wgetmodule. - Set the URL of the XKCD Python comic image.

- Use

wget.downloadto download the image and save it as ‘xkcd_comic.png’ in the current directory.

When you download the XKCD comic using wget, you should see a similar output:

Using PyCURL

If you’ve used a Linux machine or a Mac, you may be familiar with the cURL command-line tool to download files from the web.

PyCURL, a Python interface to libcurl, is a powerful tool for making HTTP requests. It provides fine-grained control over requests and you can use it for advanced use cases when handling web resources.

Installing pycurl in your working environment may be complex. Try installing using pip:

pip install pycurl⚠️ If you get errors during the process, you can check the PyCURL installation guide for troubleshooting tips.

Alternatively, if you have cURL Installed, you can install the Python bindings to libcurl like so:

sudo apt install python3-pycurlNote: Before you install the Python binding, you need to have cURL installed. If you don’t have cURL installed on your machine, you can do it like so:

apt install curl.

Downloading Files with PyCURL

Here’s the code to download the XKCD Comic using PyCURL:

import pycurl

from io import BytesIO

# URL of the XKCD Python comic

url = 'https://imgs.xkcd.com/comics/python.png'

# Create a Curl object

c = pycurl.Curl()

# Set the URL

c.setopt(pycurl.URL, url)

# Create a BytesIO object to store the downloaded data

buffer = BytesIO()

c.setopt(pycurl.WRITEDATA, buffer)

# Perform the request

c.perform()

# Check if the request was successful (HTTP status code 200)

http_code = c.getinfo(pycurl.HTTP_CODE)

if http_code == 200:

# Save the downloaded data to a file

with open('xkcd_comic.png', 'wb') as f:

f.write(buffer.getvalue())

# Close the Curl object

c.close()Let’s break down the larger snippet into smaller code snippets for each step:

Step 1: Import the Required Modules

First, we import pycurl so we can use it for making HTTP requests. Then we import BytesIO from the io module to create a buffer for storing the downloaded data:

import pycurl

from io import BytesIOStep 2: Create a Curl object and Set the URL

We specify the URL of the XKCD Python comic that we want to download. And create a curl object, which represents the HTTP request. Then, we set the URL for the Curl object using c.setopt(pycurl.URL, url):

# URL of the XKCD Python comic

url = 'https://imgs.xkcd.com/comics/python.png'

# Create a Curl object

c = pycurl.Curl()

# Set the URL

c.setopt(pycurl.URL, url)Step 3: Create a BytesIO object and Set the WRITEDATA option

We create a BytesIO object to store the downloaded data and configure the Curl object to write the response data to our buffer using c.setopt(pycurl.WRITEDATA, buffer):

# Create a BytesIO object to store the downloaded data

buffer = BytesIO()

c.setopt(pycurl.WRITEDATA, buffer)Step 4: Perform the Request

Execute the HTTP request using c.perform()and retrieve the comic image data:

# Perform the request

c.perform()Step 5: Check the HTTP Status Code and Save the Downloaded Data

We get the HTTP status code using c.getinfo(pycurl.HTTP_CODE) to ensure the request was successful (HTTP code 200). If the HTTP status code is 200, we write the data from the buffer to the image file:

# Check if the request was successful (HTTP status code 200)

http_code = c.getinfo(pycurl.HTTP_CODE)

if http_code == 200:

# Save the downloaded data to a file

with open('xkcd_comic.png', 'wb') as f:

f.write(buffer.getvalue())Step 6: Close the Curl Object

Finally, we close the curl object using c.close() to clean up resources:

# Close the Curl object

c.close()How to Download Large Files in Smaller Chunks

So far, we’ve seen different ways to download the XKCD Python comic—a small image file—into the current directory.

However, you may also want to download much larger files such as installers for IDEs and more. When downloading such large files, it’s helpful to download them in smaller chunks and also track the progress as the download proceeds. We can use the requests library’s functionality for this.

Let’s use requests to download the VS Code installer in chunks of size 1 MB:

import requests

# URL of the Visual Studio Code installer EXE file

url = 'https://code.visualstudio.com/sha/download?build=stable&os=win32-x64-user'

# Chunk size for downloading

chunk_size = 1024 * 1024 # 1 MB chunks

response = requests.get(url, stream=True)

# Determine the total file size from the Content-Length header

total_size = int(response.headers.get('content-length', 0))

with open('vs_code_installer.exe', 'wb') as file:

for chunk in response.iter_content(chunk_size):

if chunk:

file.write(chunk)

file_size = file.tell() # Get the current file size

print(f'Downloading... {file_size}/{total_size} bytes', end='\r')

print('Download complete.')Here:

- We set the `chunk_size` to determine the size of each chunk (1 MB in this example).

- Then we use

requests.getwithstream=Trueto stream the response content without loading the entire file into memory at once. - We save each chunk to the file sequentially as it is downloaded.

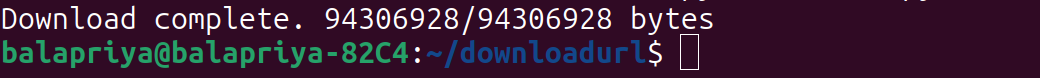

As the download proceeds, you’ll see the number of bytes currently downloaded/total number of bytes:

After the download is complete you should see the ‘Download complete’ message:

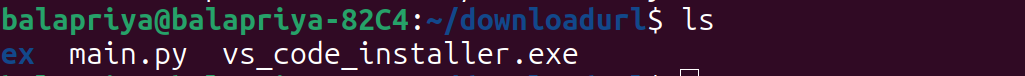

And you should see the VS Code installer in your directory:

Wrapping Up

I hope you have learned a few different ways to download files from URLs using Python. In addition to the built-in urllib.request, we’ve covered popular third-party Python packages such as requests, urllib3, wget, and PuCURL.

As a developer, I’ve used the requests library more than others in my projects for downloading files and working with web APIs in general. But the other methods might also come in handy depending on the complexity of the download task and the level of granularity you need on the HTTP requests. Happy downloading!

Next, you may also read about how to use Python cURL.