Meet the robot that knows your house better than you do

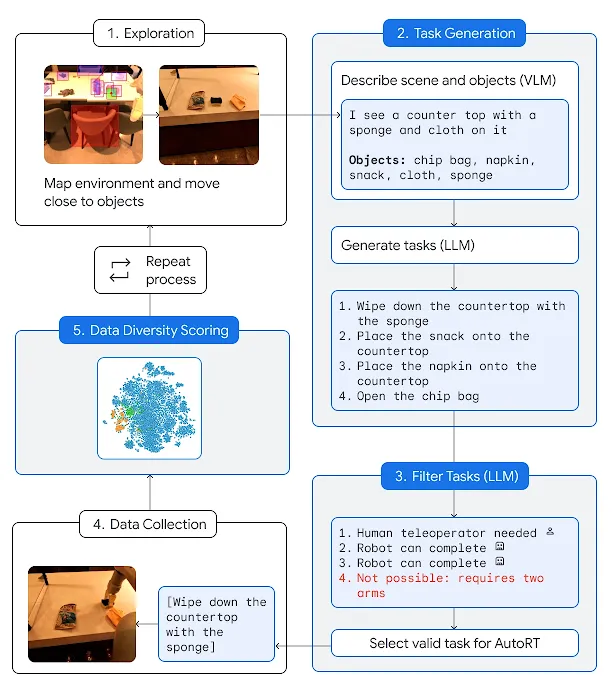

Have you ever wondered how Google’s Deepmind trains its robots? Google just put out a new research paper showing how they’re using Gemini 1.5 Pro’s big context window to teach robots to move around and get stuff done. This is a big deal for AI-assisted robots.

Compared to its predecessors, Gemini 1.5 Pro’s AI model can process a far larger amount of data thanks to its extended context window. The robot can “remember” and understand its surroundings really well, which helps it be more flexible and adaptable.

Making clever use of this by enabling robots to “watch” virtual tours of locations in the same way that a human would.

Thanks to this “long context window,” AI can process and understand large volumes of data at once. This revolutionizes the way robots perceive and engage with their surroundings.

This is how they work:

- A tour of a location, such as a home or office, is filmed by researchers.

- Using Gemini 1.5 Pro as power, the robot views this video.

- The robot picks up on the room’s essential elements, layout, and locations of objects.

- The robot navigates by using its “memory” of the video when it receives a command later.

For instance, the robot can direct you to a power outlet it recognizes from the video if you show it your phone and ask, “Where can I charge this?” The research group used a massive 9,000 square feet to test these Gemini-powered robots. Ninety percent of the time, the robots successfully followed over fifty different instructions.

This latest a͏dvanc͏ement signifies a significant step in robots navigating in͏tricate ͏areas more efficiently. The technology has numerous pr͏actical applications l͏ike͏ enhan͏cing work procedures and as͏sisting the aging population. It’s possible that these robots͏ could have capabilities exc͏eeding just movement.

Early evidence suggests that these robots can plan out multi-step tasks, according to the DeepMind team. For instance, a user asks if their preferred beverage is available when they have empty soda cans on their desk. The robot determines that it must:

- Head to the refrigerator.

- Verify the particular beverage.

- Return and report on its findings.

This is sh͏owing a ͏level of execution and ͏u͏nderstanding beyond simple navigation. However,͏ there is still plent͏y͏ of room for enhancement. For example, the system takes ten to thirty seconds to ͏execute each comma͏nd, which is not quick enough for real-world application.

Furthermore, they have only tested in controlled environments, not the chaotic, unpredictable real world. However, the DeepMind group is not giving up. The system is being worked on to make it faster and more capable of handling intricate tasks. With further advancements in technology, it may one day be possible to create robots that can navigate our environment and comprehend human language.