Salesforce Has a New AI Goal — and It’s Not AGI

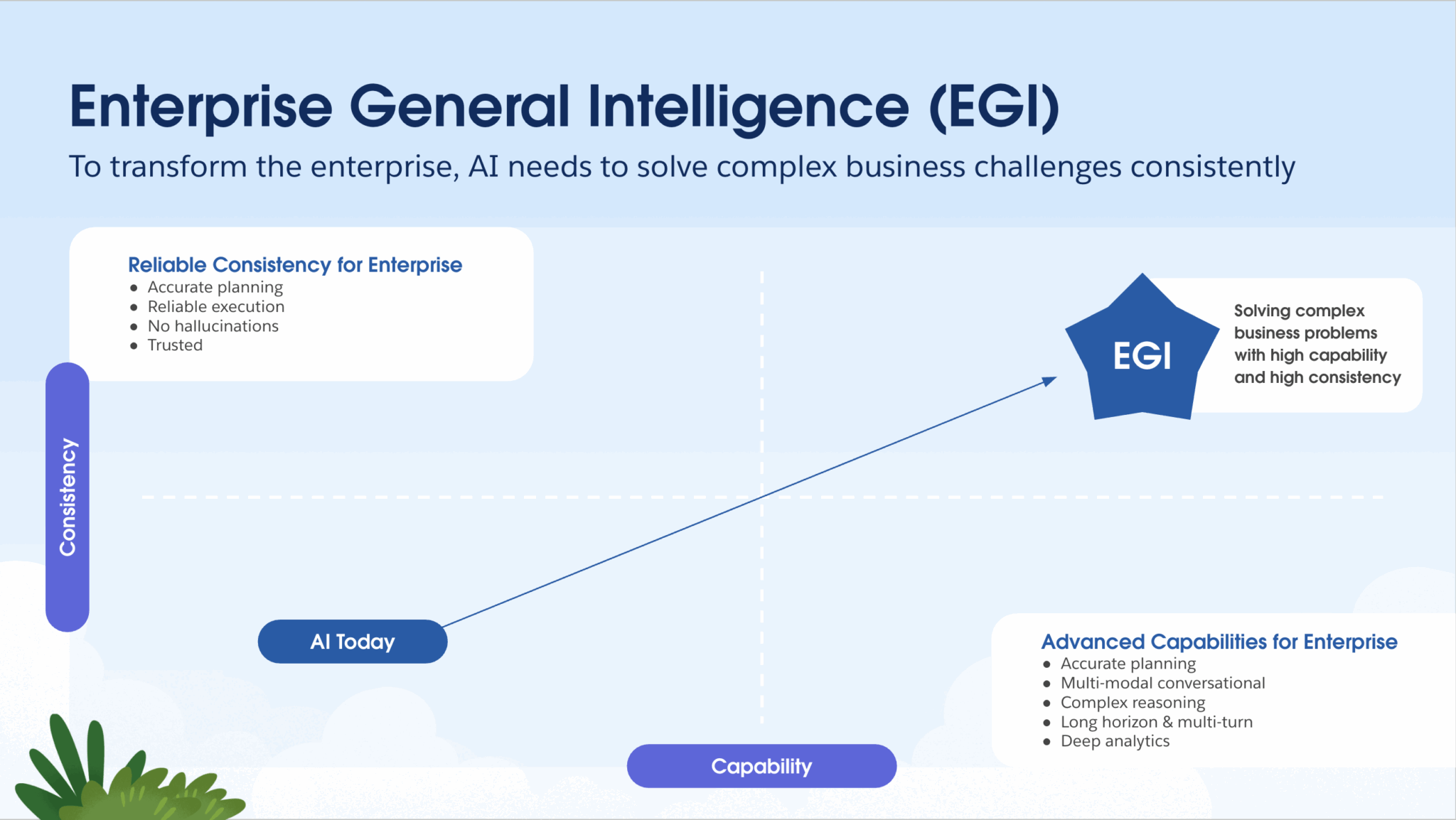

Today, the majority of AI companies are chasing Artificial General Intelligence (AGI). However, Salesforce has taken a different approach. It is working on a more immediate and practical problem: making AI reliable for businesses to use. The company is doubling down on what it calls Enterprise General Intelligence (EGI), a new framework focused less on AI’s potential and more on its consistency, safety, and trustworthiness in real-world enterprise settings.

The shift comes as large language models (LLMs) are capable of writing emails, summarizing reports, and reasoning over vast datasets today. However, they are increasingly showing signs of “jagged intelligence.” In simple terms, they may perform impressively on some tasks while failing miserably on basic ones. And the worst part is that they sometimes make things up.

These hallucinations remain a key roadblock to enterprise adoption. While consumer-facing errors might go viral or be brushed off, they can lead to compliance failures, financial losses, and customer mistrust in business environments.

As a result, Salesforce is now aiming to address this issue not by chasing futuristic goals but by building infrastructure to ensure AI can be trusted in the present.

From Jagged Intelligence to a Consistent AI Layer

The company diagnosed the current AI situation and discovered that LLMs are uneven. “It’s like hiring an intern who can write perfect code but forgets to save the file,” the company noted in its shared blog post.

In order to fix this issue, Salesforce is introducing multiple layers of reinforcement, which is designed to increase the consistency of its AI agents. As expected, Agentforce, the company’s own agentic system, is leading this charge.

Also key is the Atlas Reasoning Engine, a system that enables more accurate retrieval and reasoning by combining internal and external data sources. Together, these systems form the backbone of Salesforce’s EGI framework, which aims to make digital labor trustworthy and dependable.

Testing AI Like a Pilot in a Flight Simulator

The company is taking a different approach to testing its AI agents. Instead of solely relying on benchmarks, it has launched CRMArena, a simulated environment that evaluates AI agents across real-world CRM tasks like service support and business analytics.

The early signs of this benchmarking approach are not inspiring. As success rates for key tasks even with guided prompting are below 65% mark. However, if we look closely, that’s the point Salesforce is making here. The company wants to stress-test AI agents under real conditions, revealing where they fall short before they’re deployed in customer-facing roles.

A Platform Approach, Not Just a Model

So, if we go by Salesforce’s message, it’s clear that enterprises need more than capable models; they need a system that ensures predictability, accountability, and safe interaction at scale.

With this in mind, Salesforce is positioning EGI not as a future promise but as a current objective that sidesteps the hype around AGI and focuses on making AI usable, trustworthy, and consistent in real work environments. However, how effective the EGI will be, only time will tell. But at least for now, the AI team at Salesforce seems to be thinking in the right direction.