Load balancers are available as enterprise (paid) and open-source options. Enterprise load balancers offer premium features, high scalability, and dedicated support, making them ideal for large enterprises with complex requirements. In contrast, open-source load balancers excel in customization, transparency, and cost-effectiveness, benefiting from community-driven development and support.

When you are working on non production application or less critical infrastructure, choosing a free load balancer software is a good option.

Below, I’ve listed application and network load balancer software based on scalability, performance, ease of configuration, protocol supports, security and, more importantly, community support.

- 1. Free LoadMaster

- 2. Skudonet Community Edition

- 3. HAProxy Community Edition

- 4. Traefik

- 5. Gobetween

- 6. Caddy

- 7. Seesaw

- 8. Cilium

- 9. Balance

- 10. MetalLB

- 11. Keepalived

- 12. Envoy

- 13. NGINX

- Show less

1. Free LoadMaster

Free LoadMaster provides a powerful application load-balancing solution for small businesses and developers in development, testing, and pre-production environments. It integrates well with Azure and AWS cloud services and can serve as a reverse proxy for resources such as Microsoft Exchange, Lync, and web servers. It offers a Web Application Firewall (WAF) engine, which allows administrators to set and deploy custom rules to protect web servers.

Free LoadMaster includes the Kemp edge security pack (ESP), providing features like endpoint pre-authentication, group membership validation, and active directory authentication integration. Integrating with ADFS (Active Directory Federation Services) ensures cross-site resilience, maintaining authentication services even during site outages. The load-balanced ADFS solution integrates well with both cloud services and on-premises deployments, delivering continuous availability.

Free LoadMaster includes Kemp’s Global Server Load Balancer (GSLB), enabling efficient load balancing across multiple locations, such as managed service providers, private and public clouds, and in-house data centers. GSLB intelligently directs traffic to the nearest and best-performing server using DNS responses.

2. Skudonet Community Edition

Skudonet Community Edition is an open-source load-balancing tool optimized for performance and scalability. Built on Linux, it offers robust security, transparency, and customization flexibility.

With a user-friendly interface, Skudonet simplifies service management while providing advanced load balancing with granular control, allowing traffic distribution based on real-time server performance metrics.

Skudonet Community Edition provides real-time performance and health monitoring, enabling proactive network management for your website, application, or business. With its application-layer focus, it efficiently distributes application and network loads across multiple servers, enhancing performance and ensuring efficient handling of increased web traffic.

Read more in our Skudonet review.

Skudonet offers a Knowledge Base with training, essential information, and user guidance, along with a community support forum for interaction and tip sharing. Users can also submit support tickets for quick help from the support team.

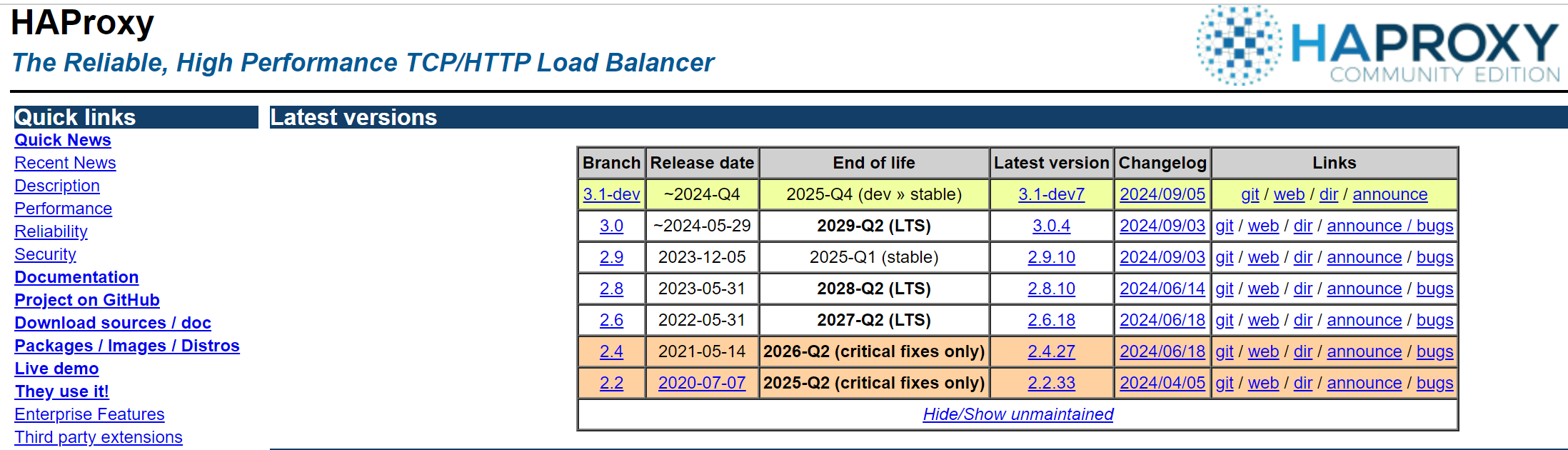

3. HAProxy

HAProxy is a free, popular network load balancer software designed for high-traffic websites. It scales efficiently, handling up to 2 million SSL requests per second and 100 gbps of forwarded traffic. Its event-driven architecture ensures quick responsiveness to I/O events.

HAProxy is designed with robust security by default, ensuring that non-portable and unreliable functions are replaced for stability. All the input data gets cleaned very early in the lower layers. To safeguard applications, it offers defensive features such as strict protocol validation, privilege drops, and fork prevention.

HAProxy offers around 10 different load-balancing algorithms, including the “first” algorithm, which is ideal for short-lived virtual machines. This algorithm consolidates all connections onto the smallest subset of servers, allowing the remaining servers to power down, conserving resources. HAProxy also supports session stickiness, ensuring that repeat visitors are routed to the same server, enhancing cookie storage, and speeding up data access.

HAProxy integrates with third-party apps like 51Degrees, ScientiaMobile, MaxMind, NSI, and Device Atlas to help extend its functionality. For instance, the ScientiaMobile integration brings device detection, making it easy for application and website users to collect device analytics about tablets and smartphones. You can analyze traffic patterns, pinpoint problems, and identify opportunities to improve your app/website’s performance.

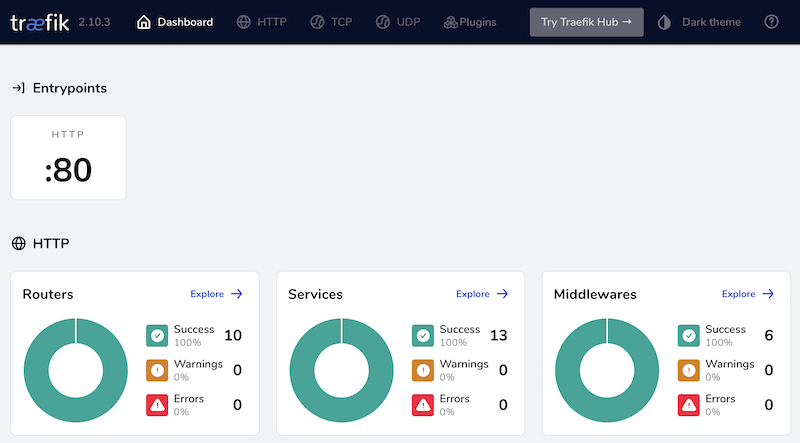

4. Traefik Proxy

Traefik is a cloud native application proxy and virtual load balancer designed for microservices and APIs. It supports both Layer 4 (TCP) and Layer 7 (HTTP) traffic. Layer 4 handles IP addresses, network address translation, and packet routing, while Layer 7 operates at the application level, allowing more granular control over HTTP traffic and routing incoming requests based on content.

Users also get precise control over their direct traffic as Traefik supports advanced routing techniques based on headers, paths, and hostnames. The Let’s Encrypt integration is handy for those who want production-ready deployments, as it automatically handles certificate management.

Traefik integrates well with container platforms like Docker, Kubernetes, and Mesos, automatically routing traffic to them without manual configuration. It inspects your infrastructure, looks for relevant information, and automatically discovers which service serves which request.

Traefik enables you to configure health checks and automatically remove unhealthy servers from the pool. And, with the help of RateLimit Middleware, you can control request rates, protecting applications from resource exhaustion and Denial-of-Service (DoS) attacks. This ensures service availability even during high traffic.

User review on Traefik Proxy

A.N.M. Saiful Islam

I use Traefik Proxy for Geekflare API and it is a really helpful tool. It works easily with Docker and Kubernetes, making my setup much more straightforward. It also automatically finds services and updates configurations, saving me a lot of time and effort.

-

What do you like the most about Traefik Proxy?

Ease of use, auto discovery, extensive plugins support, lightweight, and a beautiful dashboard are some things I like the most about Traefik.

-

What you didn’t like about Traefik?

The documentation isn’t very clear, and I often find myself confused when using advanced features. Debugging was also challenging because the logs were long and hard to understand. Fixing these issues would make it so much better.

5. Gobetween

Gobetween is a flexible load balancer designed for microservice architectures. By default, it uses TCP for load balancing, but you can configure TLS for secure traffic decryption and termination before forwarding it to the backends.

Gobetween conducts routine health checks to monitor the status of backend nodes. Several methods can be used, including a basic ping to verify the connection between each backend node and Gobetween. Additionally, users can create custom scripts for health checks. It includes a built-in server-side discovery feature. Depending on your configuration, it can operate as a router by querying various service registries or using static settings.

You can use Gobetween to do simple load balancing, SVR balancing, ElasticSearch cluster with Exec discovery, Docker/ Swarm balancing, and service balancing. It enables access control through REST API or configuration files, allowing policies to either “allow” or “deny” traffic.

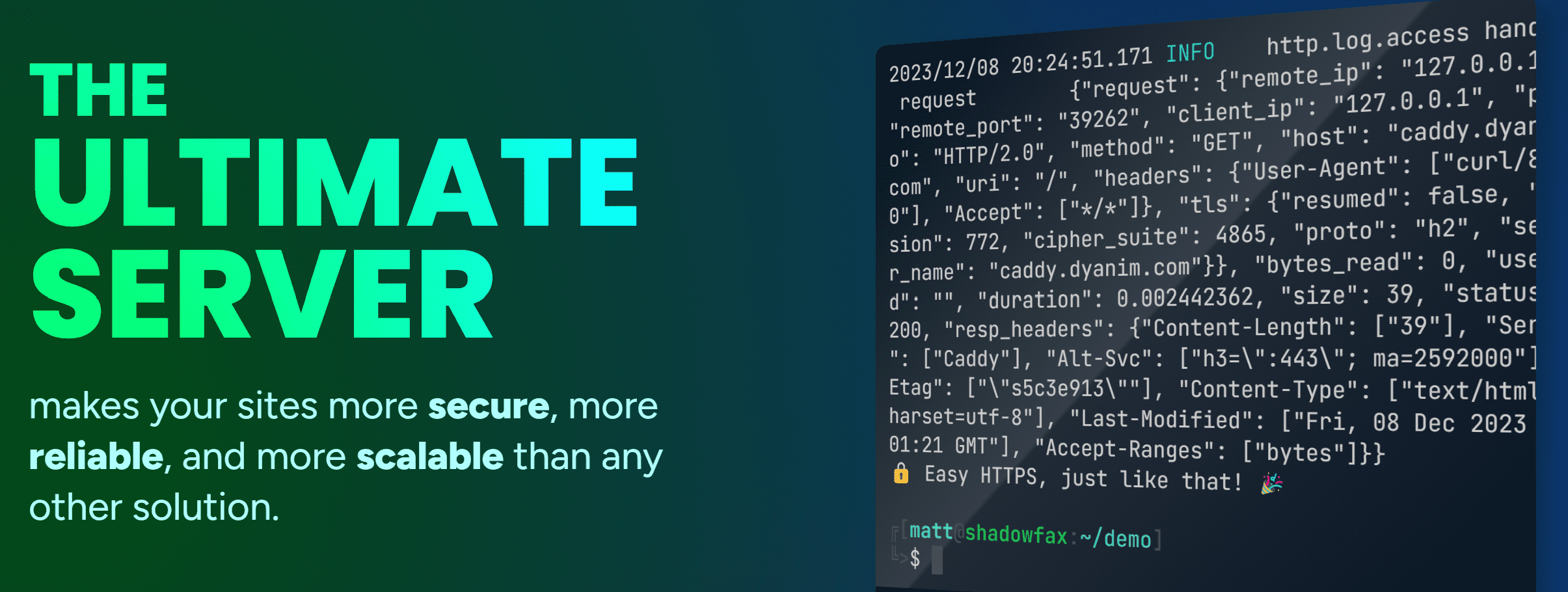

6. Caddy

Caddy is an open-source web server written in Go, functioning as both a web server and load balancer without external dependencies. It automatically uses HTTPS and employs a “random” load balancing protocol, sending each incoming request to a randomly selected upstream server.

Caddy handles TLS termination by managing SSL certificates and encrypting/decrypting HTTPS traffic, offloading this workload from backend servers so they can focus on business logic. It also automates SSL certificate issuance and renewal through Let’s Encrypt, ensuring secure communication.

Caddy offers high availability features like load limiting, graceful (hitless) config changes, advanced health checks, online retries, and circuit breaking. It also offers dynamic scaling where you can add or remove backend servers as your traffic needs change. It also enhances performance by serving precompressed files or compressing them on the fly.

You can configure Round-Robin Load Balancing, ensuring traffic requests are distributed using a round-robin design. For example, if you have two web servers, the first request will be routed to the first server, the second request to the second server, and the third one to the first server again. Other load balancing options on Caddy are the least connected load balancing, the first available policy, and load balancing by IP address.

7. Seesaw

Seesaw is a flexible load-balancing tool providing basic and advanced network-balancing capabilities. It supports Direct Server Return (DSR) and Anycast, enabling efficient traffic management. It offers centralized configuration and supports multiple VLANs, making it ideal for complex network environments.

To create a load-balancing cluster, you require at least two Seesaw nodes. The nodes can be virtual instances or physical machines. Each node should have at least two network interfaces: one for the host and the next for cluster VIP. All four interfaces are connected to the same Layer 2 network.

Seesaw fully supports Anycast VIPs by dynamically managing their availability. It advertises active Anycast VIPs and withdraws those that are unavailable. To enable this functionality, you’ll need to install and configure the Quagga BGP daemon with BGP peers that accept host-specific routes for Anycast VIPs.

After configuration, users can view the state of the Seesaw load balancer via the Seesaw command line interface. In case of any issues, users can use components such as seesaw_watchdog and seesaw_healthcheck to troubleshoot and devise solutions.

8. Cilium

Cilium is a powerful Layer 4 load balancer designed for cloud-native environments. It leverages BGP (Border Gateway Protocol) to attract traffic and accelerates its performance using eBPF (extended Berkeley Packet Filter) and XDP (eXpress Data Path) to ensure a secure, high-performance load-balancing solution.

Cilium offers robust support for both north-south and east-west load-balancing strategies. For north-south traffic, its eBPF-based implementation is highly optimized for maximum performance and can be integrated with XDP (eXpress Data Path) for even faster packet processing. It also supports advanced features like Maglev consistent hashing and Direct Server Return (DSR) when the load balancing occurs off the source host.

For east-west traffic, Cilium enables efficient service-to-backend translation directly within the Linux kernel’s socket layer, ensuring high-performance internal communication between services.

You can use Cilium’s Cluster Mesh allows multi-cluster routing across on-premise and cloud environments. This approach allows exposure of backends running in different clusters using a shared global service. It also comes with Cilium Service Mesh, sidecar-free data plane powered by Envoy, that offloads a large portion of service mesh functionality into the kernel, reducing the overhead and complexity.

9. Balance

Balance by Inlab Networks is a lightweight yet powerful TCP proxy with round-robin load-balancing. It distributes incoming traffic evenly by sequentially routing each request to the next available server. It is cross-platform, working on Mac-OS X, Linux, BSD/OS, HP-UX, and many more operating systems.

Balance provides failover features to ensure service continuity by automatically switching to another server if the primary one fails. It also performs regular health checks to monitor server status and detect potential issues.

Users can dynamically adjust Balance’s behavior via its simple command-line interface. It supports IPv6, simplifying routing and enabling automatic IP address configuration through Stateless Address Autoconfiguration (SLAAC).

10. MetalLB

MetalLB is a robust load-balancer designed specifically for bare metal Kubernetes clusters, utilizing standard routing protocols. It automatically assigns a fault-tolerant external IP whenever a LoadBalancer service is added to your cluster.

With support for local traffic, MetalLB ensures that the machine receiving the request handles the data, enhancing efficiency and performance.

MetalLB requires a Kubernetes cluster running version 1.13.0 or later, without built-in load balancing. It offers two modes: Border Gateway Protocol (BGP) and Layer 2 (L2). BGP is ideal for large-scale networks and data centers due to its scalability. It connects with external routers, BGP routers, or network switches to announce the IP addresses assigned to Kubernetes services.

On the backend, MetalLB uses a FRR Mode that uses an FRR container to handle BGP sessions. This makes it easy to advertise IPV6 addresses and pair BGP sessions with BFD sessions, features that are unavailable with native BGP implementation. FRR mode is available for all users requiring BFD or IPV6 protocols.

Layer 2 (L2) on MetalLB is ideal for small local networks, like home labs, where minimal configuration is needed. It doesn’t require external routers or advanced networking knowledge. However, L2 mode is less scalable than BGP, as it relies on ARP/NDP broadcasts, which can become inefficient in larger networks.

11. Keepalived

Keepalived is a routing software that offers high availability and load balancing for Linux systems. It utilizes Layer 4 load balancing via the Linux Virtual Server (IPVS) kernel module, distributing traffic across multiple servers to ensure system resilience and optimal performance, even during server failures.

Keepalived uses adaptive checkers to monitor the health of load-balanced server pools. When a server becomes unreachable or unhealthy, traffic is automatically redirected to healthy servers.

Keepalived delivers high availability by leveraging VRRP (Virtual Router Redundancy Protocol). It integrates a series of hooks into VRRP, enabling fast, low-level protocol interactions. Additionally, VRRP utilizes BFD (Bidirectional Forwarding Detection) for rapid network failure detection. When paired with tools like IPVS or HAProxy, Keepalived can handle anything from basic load balancing to more advanced configurations.

Keepalived implementation is based on an I/O multiplexer that handles a multi-threading solid framework. This I/O multiplexer handles all the events. Advanced users can build Keepalived from the source tree if they have its associated libraries, autoconf and automake. You can use Keepalived frameworks independently or altogether to build resilient infrastructures.

12. Envoy

Envoy is an open-source edge and service proxy tailored for cloud-native applications and microservices. It offers advanced load balancing features like zone-local balancing, global rate limiting, circuit breaking, request shadowing, and automatic retries.

As an L7 proxy and communication bus, Envoy is ideal for large, modern, service-oriented architectures. It performs dynamic health checks and integrates service discovery to route traffic only to healthy servers.

Envoy’s circuit-breaking policies prevent traffic from being sent to offline or overloaded servers, while retry policies limit the number of retries a server can handle. Users can configure global rate limiting to control traffic to specific paths or services over a set duration, protecting backend systems from traffic spikes.

Envoy load balances servers in a single place, making them available to any application. This tool uses various load-balancing strategies, like round-robin, where traffic is distributed evenly across all upstreams, or at least request that sends requests to a server with the fewest active connections.

Envoy offers first-class support for HTTP/2 and gRPC for both incoming and outgoing connections. It provides advanced observability, including distributed tracing, deep L7 traffic insights, and wire-level monitoring for MongoDB and DynamoDB. Additionally, its robust API allows for dynamic configuration management.

13. NGINX

NGINX is a powerful load balancer, web server, and proxy for all Linux distributions and major operating systems. It is highly flexible and configurable, so you can adjust it to suit your needs.

Instead of a single monolithic process, NGINX operates through a collection of processes to scale beyond OS limitations. The “master” process manages worker processes, while the “workers” handle tasks such as processing HTTP requests.

NGINX distributes incoming traffic using methods like round robin, least connections, and IP hash. The IP hash method ensures requests from the same client are sent to the same server. Advanced users can also implement session persistence and weighted load balancing for more control.

NGINX regularly checks the health of backend servers by sending HTTP or TCP requests, halting traffic to any unresponsive servers until they recover. It acts as a scalable load balancer, distributing requests across multiple servers and enabling systems and applications to scale horizontally. As traffic increases, more servers can be easily added to handle the load.

What’s next?

I would suggest trying a few on the list to see what works for your application and infrastructure. If you don’t find a free load balancer suitable for your project, alternatively, you should go for a cloud-based load balancer. And, below are some further solutions for your application performance and security.

-

EditorNarendra Mohan Mittal is a senior editor & writer at Geekflare. He is an experienced content manager with extensive experience in digital branding strategies.

EditorNarendra Mohan Mittal is a senior editor & writer at Geekflare. He is an experienced content manager with extensive experience in digital branding strategies.