Synthetic data generation is a process of artificially generated data that statistically resembles the old real dataset while maintaining compliance with data privacy regulations.

Synthetic data is essential for training machine learning models, testing apps, and gaining business insights. However, acquiring real data often involves several challenges, like privacy concerns, limited access, and complex datasets. This is where synthetic data generation tools step in. These tools can create realistic, privacy-safe, scalable synthetic datasets tailored to your model’s needs.

In this article, I have researched and listed the best synthetic data generation tools and how to use them effectively to train your machine learning models.

- 1. MOSTLY AI

- 2. K2View

- 3. Synthesized.io

- 4. YData

- 5. Gretel Workflows

- 6. DoppelGANger

- 7. Synth

- 8. SDV.dev

- 9. Tofu

- 10. Twinify

- 11. Datanamic

- 12. Benerator

- Show less

You can trust Geekflare

At Geekflare, trust and transparency are paramount. Our team of experts, with over 185 years of combined experience in business and technology, tests and reviews software, ensuring our ratings and awards are unbiased and reliable. Learn how we test.

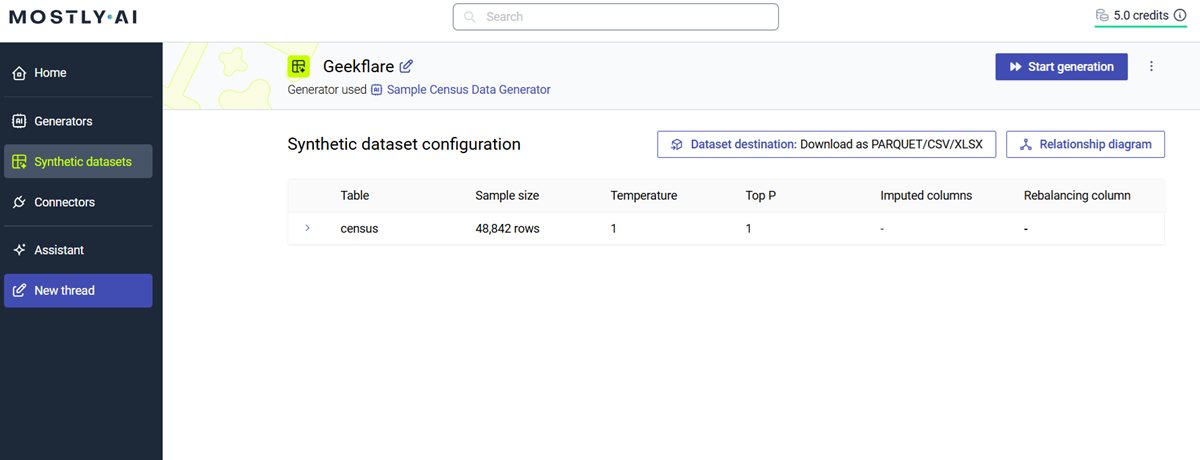

1. MOSTLY AI

MOSTLY AI offers an AI-powered synthetic data generator that uses advanced algorithms to create high-quality, privacy-secure synthetic data with industry-leading accuracy.

It integrates smoothly with data sources like relational databases (MySQL, PostgreSQL, Oracle), cloud platforms (Snowflake, Databricks, BigQuery), and cloud storage (Azure, GCP, AWS).

You can connect MOSTLY AI to your apps or systems through its API. It uses generative AI to understand relationships in the data, filling in gaps with realistic values to keep datasets accurate and reliable. It works with structured data, including numbers, categories, dates, and geolocation data.

MOSTLY AI is ideal for industries like finance (fraud detection, risk modeling), healthcare (research, predictive modeling), and telecom (customer behavior analysis). It offers a free plan with limited features; paid plans start at $3 monthly.

MOSTLY AI Key Features

- Built-in Privacy: It uses original data only for training, keeping it anonymous and preventing re-identification or overfitting.

- Data Insights Reports: Provides a detailed report to evaluate how well synthetic data matches original patterns.

- API & Python Client: Offers API and Python Client for easy application integration.

- AI-Grade Star Schema: Generates synthetic data with an AI-Grade Star schema, preserving relationships and improving table accuracy.

2. K2View

K2View offers an AI-powered synthetic data generation tool to generate realistic and compliant synthetic data for various enterprise needs across development and testing environments without compromising sensitive information and compliance.

It uses synthetic data generation techniques like generative AI, rules engines, data cloning, and data masking to generate the most accurate, compliant, and realistic synthetic data for software testing and AI/ML model training.

Beyond synthetic data generation, K2View offers end-to-end management of the entire synthetic data lifecycle, including source data extraction, subsetting, pipelining, and operational workflows.

K2View Key Features

- Fast Data Generation: It quickly generates large amounts of synthetic data using advanced AI techniques that speed up project timelines and reduce costs for testing.

- Integration-Friendly: Integrate easily with other databases, data lakes, and analytics tools and easily fit into CI/CD workflows and machine learning pipelines using APIs.

- Data Privacy Compliance: Ensures compliance with privacy regulations such as GDPR, HIPAA, and CCPA by replacing sensitive data with synthetic equivalents.

- Customizable Code-Free Data Generation: Users can define their own code-free customizable rules for generating realistic synthetic data.

- End-to-End Automation: Automates the entire synthetic data generation process, reducing manual work and errors.

3. Synthesized.io

Synthesized.io is a generative AI-powered synthetic data generator that creates realistic, production-like test data while protecting sensitive information. It uses intelligent masking techniques to help developers generate accurate data for testing and development with low compliance risks.

It works with Kubernetes to make database provisioning fast and cloud-friendly. Its database generation, masking, and subsetting tools are designed for efficient integration with testing frameworks and platforms like AWS, GCS, Azure, Oracle, and Jenkins.

Synthesized.io uses a “Data as Code” approach to ensure test data stays up-to-date, compliant, and production-like. It quickly detects and fixes data quality issues, helping teams address problems before they cause disruptions.

Synthesized.io offers custom pricing tailored to business needs, focusing on enterprise-scale synthetic data solutions and compliance. It also provides a free developer version with limited features.

Synthesized.io Key Features

- AI-Powered Automated test data: It uses GenAI to generate and automate production-like data, masks sensitive info through intelligent masking techniques, and dynamically updates the application.

- Detect bugs early: Detect and fix data issues and bugs early to avoid operational impacts and ensure stable, reliable releases.

- Speed up cloud and app migration: It eliminates data silos and provides stable, reliable test data for consistent automation results to speed up cloud and app migration.

4. YData

YData supports various data types like time series, transactions, conversational, and relational data to enable effective synthetic data generation and handling using generative AI.

It offers the flexibility to address diverse use cases across healthcare, finance, and e-commerce industries, delivering high-quality synthetic data that mirrors real-world datasets for effective AI and ML model training.

YData Key Features

- Enhance ML performance: It generates additional data samples that preserve the original patterns and relationships, improving machine learning model training.

- Easy Integration: It is easily integrated with your existing data pipelines and machine learning workflows.

- Protects Privacy: It keeps your data safe and compliant with GDPR, CCPA, PIPEDA, and HIPAA by reducing risks tied to sensitive information.

5. Gretel Workflows

Gretel Workflows enables developers to build scheduled synthetic data pipelines that connect directly to data sources and AI/ML workflows. It easily integrates with cloud providers, data warehouses, and popular ML tools and frameworks.

Gretel Workflows offers two deployment options for its Data Plane, depending on your needs:

- Gretel Cloud: A fully managed service where Gretel handles all computing, automation, and scaling tasks in their cloud.

- Gretel Hybrid: Runs in your cloud environment using Kubernetes, supported on GCP, Azure, and AWS. It keeps your data within your cloud for better control, especially sensitive data.

Gretel Workflows offers a Synthetic Data for Everyone plan, providing 100K+ high-quality synthetic records or 2M+ PII detection records, along with 15 monthly workflow credits. For larger needs, a customizable Enterprise Plan is also available.

6. DoppelGANger

DoppelGANger is an open-source implementation of Generative Adversarial Networks (GAN) to generate high-quality, synthetic time-series data.

It is designed for privacy-preserving applications and enables users to simulate realistic data while maintaining the statistical properties of the original time series datasets with metadata. It is helpful for scenarios involving sensitive data, such as healthcare or finance, where using real data might risk privacy.

DoppelGANger is a valuable tool for researchers and developers looking to generate synthetic data for machine learning and simulation purposes. It offers several configuration options (Structural characterization, predictive modeling, algorithm comparison) for training and fine-tuning AI and ML models.

7. Synth

Synth is an open-source data generator that helps you create realistic data to your specifications, hide sensitive information, and develop test data for your applications. It can help you protect sensitive information and build datasets for machine learning.

It uses a declarative configuration language that allows you to specify your entire data model as code. It works with SQL and NoSQL databases and supports thousands of data types like credit card numbers and email addresses. It can import existing data and automatically create detailed, flexible models for your needs.

8. SDV.dev

SDV (Synthetic Data Vault) is a comprehensive platform focused on high-quality synthetic data generation for machine learning and data science. It includes a suite of libraries like CTGAN, DeepEcho, and TimeGAN, catering to diverse data types such as tabular, time-series, and sequential data.

SDV.dev is ideal for accelerating data-driven projects by providing safe, shareable datasets without compromising sensitive information.

9. Tofu

Tofu is an open-source Python library for generating synthetic data based on UK biobank data. Unlike the tools mentioned before that will help you generate any data based on your existing dataset, Tofu only generates data that resembles the UK biobank.

The UK Biobank is a research project involving 500,000 middle-aged participants from England, Scotland, and Wales. It collects detailed health-related data, including surveys, physical measurements, lab tests, activity tracking, imaging, genetic data, and long-term health outcomes.

Tofu generates random data that matches the structure of UK Biobank’s baseline dataset:

- For time fields: Random dates are created.

- For categorical variables: Random values are chosen from the UK Biobank data dictionary.

- For continuous variables: Random values are generated based on the reported distribution for that field.

10. Twinify

Twinify is an open-source Python library designed to generate and preserve privacy-preserving synthetic datasets. It uses advanced differential privacy techniques to generate synthetic data with identical statistical distributions.

It offers flexible data modeling with Bayesian inference and various synthetic data generation methods, making it adaptable for diverse use cases.

To use Twinify, you provide the real data as a CSV file, and it learns from the data to produce a model that can be used to generate synthetic data. It is ideal for researchers, analysts, and developers to analyze data while maintaining compliance with privacy regulations.

11. Datanamic

Datanamic Data Generator helps you quickly create realistic test data for your databases. It’s perfect for developers, DBAs, and testers who need sample data to check if their database-driven applications work properly.

Datanamic data generators are customizable and support most databases such as Oracle, MySQL, MySQL Server, MS Access, and PostgreSQL. It supports and ensures referential integrity in the generated data.

It includes over 40 ready-made data generators, such as lists, CSV files (e.g., addresses), dates, strings, and custom expressions to get started immediately. It generates data based on column characteristics such as email, name, and phone number.

12. Benerator

Benerator is a free and open-source tool for data obfuscation, generation, and migration for testing and training purposes. It helps create realistic test data that meets strict validity rules, making it ideal for unit, integration, and performance testing. Its ability to anonymize sensitive production data ensures privacy during testing or showcasing.

Its intuitive design works well for non-developers and supports data extraction, synthesis, and anonymization from various file formats.

What Is Synthetic Data?

Synthetic data is artificially generated data that statistically resembles the old dataset. It can be used with real data to support and improve AI models or as a substitute. Because it does not belong to any data subject and contains no personally identifying information or sensitive data such as social security numbers, it can be used as a privacy-protecting alternative to real production data.

Differences Between Real and Synthetic Data

Here’s a concise comparison of real data and synthetic data:

- How it’s made: Real data is collected from actual people, like through surveys or app usage, while synthetic data is created artificially but mimics real data.

- Privacy rules: Real data is subject to privacy laws—people must know what’s collected and why, with restrictions on use. Synthetic data doesn’t have these rules because it doesn’t link to real people or include personal details.

- Data availability: Real data depends on what users provide, while synthetic data can be created unlimitedly.

Why You Should Consider Using Synthetic Data

Here’s why you should consider incorporating synthetic data into your workflows:

- Cost-Effective Scaling: Synthetic data is cheaper to produce as it allows you to generate large datasets modeled on smaller existing ones. This provides your machine-learning models with ample data for training.

- Pre-Labeled and Cleaned: Synthetic data comes automatically labeled and cleaned, eliminating the need for manual preparation for machine learning or analytics tasks.

- Privacy-Safe: Since synthetic data doesn’t include personally identifiable information or belong to a data subject, it ensures compliance and can be shared without privacy concerns.

- Mitigate AI Bias: By ensuring adequate representation of minority classes, synthetic data helps in building fair and responsible AI systems.