ChatGPT is an AI-based service wrapped inside a chat interface. It helps you search the web, summarize text, generate images, provide coding assistance, brainstorm, translate, and more. It can keep conversational context, take feedback, and challenge inappropriate requests.

As of November 2024, ChatGPT is attracting over 200 million users per week. [1] Not only has it brought AI to the masses, but it has also set a benchmark for the application of natural language processing (NLP) for individuals and businesses. ChatGPT responds in a manner similar to human conversations, providing a natural tone and maintaining context.

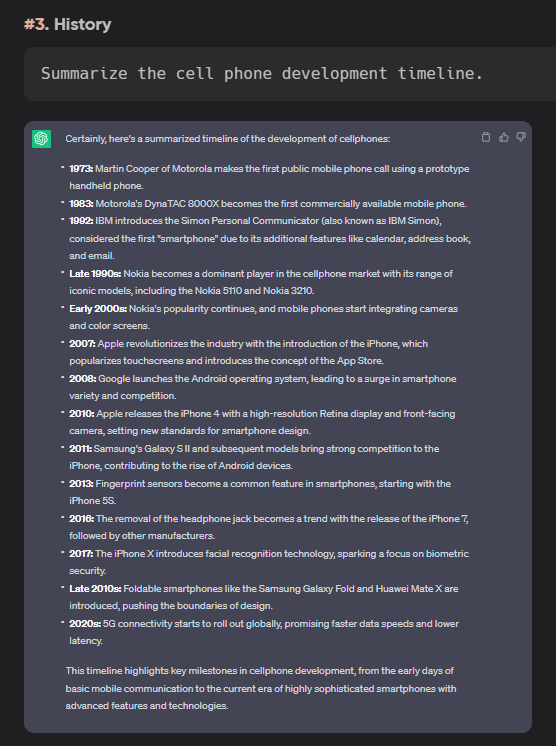

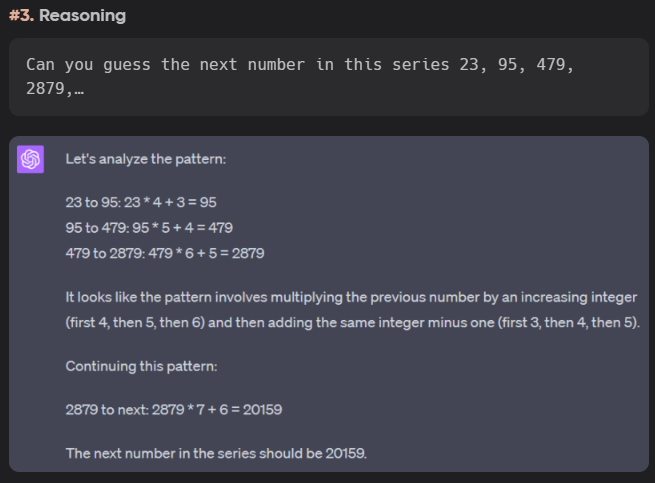

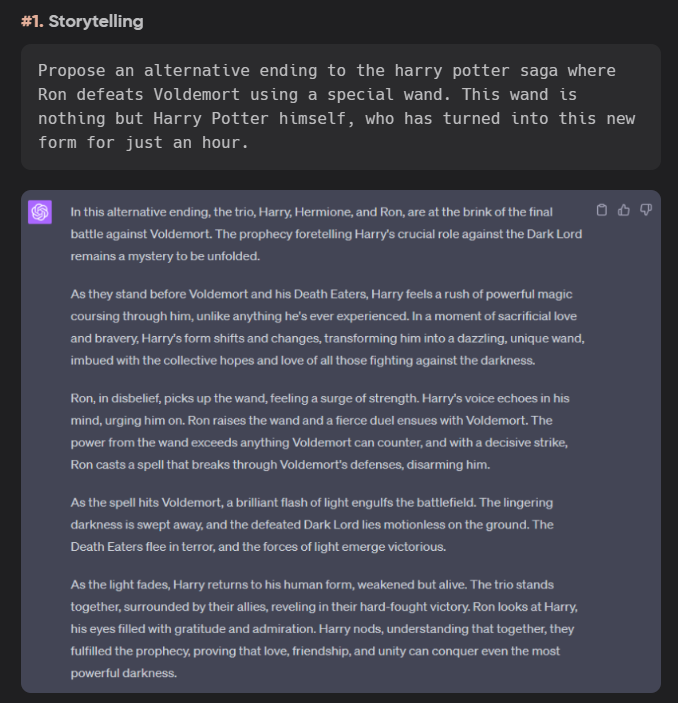

It has tons of use cases in just about every domain. It can talk history, analyze data, debug, write poetry, tell stories, solve puzzles, and much more, which are the typical things people do at their desk jobs and in their daily lives.

ChatGPT is a sure-shot productivity booster with a generous free version for lightweight users. In this post, I will discuss its evolution, development, key features, ethical complications, technical limitations, and more.

ChatGPT Versions

OpenAI has regularly updated its GPT models since the inception of GPT-1 in 2018. Here’s a brief mention of its popular GPT updates, along with their strengths and limitations.

GPT-1 (2018)

OpenAI introduced GPT-1 in June 2018 in their paper titled “Improving Language Understanding by Generative Pre-Training”. [2] The researchers used Transformer as their model architecture, which was released by Google in 2017. [3] The prime reason for choosing Transformer architecture was its excellent ability to perform routine tasks, such as translation, text analysis, and generation.

OpenAI “pre-trained” the model with a huge amount of unlabeled text from BookCorpus—an unpublished/self-published library of 7,000 books (~ 985 million words) on a range of genres, including adventure, fantasy, etc. The researchers also supervised fine-tuning with tasks such as natural language inference, question-answering, common sense reasoning, semantic similarity (paraphrasing detection), and text classification.

The overall logic was to help make the model understand how people converse naturally and the interdependencies between different words in long sentences.

GPT-1 is not directly available to the general public. However, you can use it on third-party platforms, such as Hugging Face.

GPT-2 (2019)

On February 14, 2019, OpenAI announced GPT-2, a 1.5 billion-parameter model based on Transformer architecture. [4] Developers trained GPT-2 on WebText, a dataset developed using millions of webpages, which were redirects by Reddit (a social media platform) users receiving at least 3 Karma.

Karma is a Reddit parameter awarded to its users indicating their content popularity based on upvotes and downvotes.

These were a total of 45 million links, resulting in close to 8 million documents (~ 40 GB of text) after cleaning up data for duplicates and other discrepancies.

However, OpenAI didn’t release its trained model, citing potential malicious applications. Instead, it allowed researchers to try a much smaller model. [5] Users could also try the community-powered GPT-2 on Hugging Face.

This led to a public backlash about OpenAI not being “open” and that the fears are largely exaggerated and misplaced. Finally, OpenAI released its biggest, 1.5 billion-parameter GPT-2 in November 2019, with the source code and model weights. [6] Besides, people could also access the smaller 124 million, 355 million, and 774 million parameter GPT models.

GPT-2 showed promising “zero-shot” (response entirely based on pre-training without any context) performance across a range of NLP tasks, such as question answering, summarization, and translation.

However, as reported by media outlets (such as The Verge, The Register, etc.), its limitations would surface in longer conversations.[7] GPT-2 would lose focus and drift off the subject of interest. But that’s understandable since the GPT-2 context window was a mere 1024 tokens (~ 770 words).

Overall, GPT-2 was a tenfold (in terms of training data and parameters) upgrade over GPT-1. It paved the way for a generalist model development without explicit, task-oriented training.

GPT-3 (2020)

In 2020, Open AI released GPT -3, a 175 billion-parameter model, and seven other smaller models deploying a modified version of the transformer architecture used in GPT-2.

OpenAI trained GPT-3 on multiple datasets, including CommonCrawl (410 billion tokens ~ 570 GB of filtered plaintext), WebText2 (19 billion tokens), Books1 (12 billion tokens), Books2 (55 billion tokens), and Wikipedia (3 billion tokens), with varying weights.

GPT-3 demonstrated excellent performance on NLP tasks in zero, one, and few shots settings. Particularly, it was so good at generating text that 80 US-based citizens picked by OpenAI could succeed with only 52% accuracy in determining if the subject text was human or GPT-3 generated.

GPT-3 was as effective as fine-tuned and supervised models of that time, especially in answering questions and translation. It could also successfully do elementary math (addition, subtraction, and multiplication) with small numbers.

However, GPT-3 still fell short in tasks like sentence comparison and common sense physics. It would repeat sentences in terms of their logical meaning, become less coherent in longer conversations (2,048 tokens context ~ 1,536 words), and occasionally contradict itself.

Still, it was a significant upgrade over its predecessor, making even the biggest tech giants curious.

In September 2020, Microsoft acquired an exclusive license of GPT-3. [8] This move came on the heels of Microsoft’s $1 billion investment to become OpenAI’s exclusive cloud partner. [9] However, others could still access GPT-3 via OpenAI’s public-facing API. There are community-powered instances too (on Hugging Face) of every OpenAI model, including GPT-3.

ChatGPT (GPT-3.5) (2022)

November 2022 marked the launch of the first major AI technology rollout into the hands of the general public. It was none other than OpenAI’s super bot, ChatGPT. It amassed 100 million active users just 2 months into the launch—a record at that time (later broken by Meta’s social network, Thread).

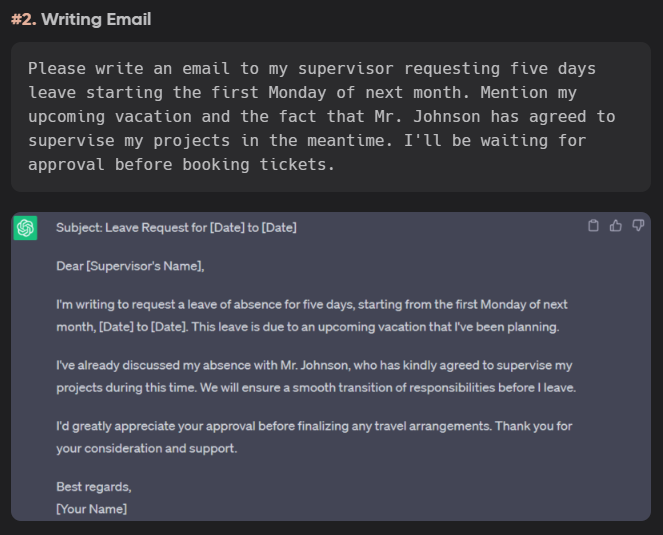

I remember using ChatGPT’s free and paid versions on various fronts, including emails, maths, poetry, reasoning, and more, for Geekflare.

Behind the scenes, OpenAI researchers fined-tuned GPT-3 with a well-known training technique: Reinforced Learning with Human Feedback (RLHF). They called the resulting models InstructGPT.

The researchers compared outputs from the 1.3 billion-parameter InstructGPT to 175 billion-parameter GPT-3, finding the former less toxic, more truthful, much less hallucinating, and more aligned to user intent. [10]

ChatGPT (GPT-3.5) was a “sibling model to InstructGPT”. The major differentiator of the chat interface was the conversational way of obtaining results. ChatGPT could also take feedback, oppose indecent queries, and own up to its mistakes.

Despite the advances, ChatGPT would confidently respond with inaccuracies at times. Slight changes in input would lead to entirely different outputs. Moreover, ChatGPT answers were often longer than what was needed, and it overused specific phrases (ex., I’m a language model developed by OpenAI).

GPT-4 (2023)

OpenAI released GPT-4 in March 2023. Based on the tried and tested Transformer architecture, GPT-4 was trained on publicly available internet information and third-party licensed data. OpenAI also fine-tuned it with RLHF.

In the technical paper, OpenAI denied sharing more details, such as model size, training dataset construction, hardware, training method and compute, etc., citing “competitive landscape and safety implications”. [11]

GPT-4 ranked among the top 10 performers in a simulated bar exam, closing in the gaps to match human-level performance. In a similar assignment, GPT-3.5 was among the bottom 10%, showing the remarkable progress made by OpenAI engineers with this update.

OpenAI also put GPT-4 through many more assessments, including LSAT, SAT Math, GRE (quantitative, verbal, and writing), USABO, USNCO, AP Art History, AP Biology, Leetcode, etc. Barring a few subjects (such as AP English Literature and Composition), GPT-4 firmly stood its ground.

Besides, OpenAI evaluated GPT-4 on traditional benchmarks, such as MMLU, HellaSwag, HumanEval, and more. The results showed GPT-4 significantly outpacing existing language models and state-of-the-art (SOTA) models.

OpenAI offered GPT-4 within ChatGPT and via its API. The major distinction that users felt was the ability to input image prompts in addition to text. GPT-4 could read image contents, analyze, and respond to the combined (text + visual) input.

However, GPT-4 had limitations similar to those of its predecessors. It wasn’t 100% accurate (well, no AI is!), and would hallucinate once in a while. Likewise, it had difficulty making sense of complex reasoning and was overly trustful. It would confidently produce nonsensical results, and its responses clearly reflected bias from its training data.

Finally, like all the earlier versions, GPT-4 had a data cut-off, restricting its ability to output real-time information.

ChatGPT Plus

ChatGPT Plus isn’t a specific GPT version but an OpenAI paid subscription released in February 2023. It offers priority access to the leading GPT models with faster response times.

As of now, ChatGPT Plus retails for $20/month. It comes with a number of extra benefits, such as voice mode, creating custom GPTs, and higher message limits.

OpenAI also offers a Team subscription starting at $25/user/month. This has everything in the Plus subscription, coupled with higher message limits, a team workspace, an admin console, and greater privacy.

Businesses with even greater requirements (ex., higher speed, longer context windows, and robust privacy) can opt for the ChatGPT Enterprise edition.

GPT-4 Turbo

In late 2023, OpenAI released an update to its fourth iteration of LLMs with GPT-4 Turbo. It featured a larger context window (128k tokens, approximately 96,000 words), the latest data (at that time), and was more affordable than GPT-4. It had an output token limit set to 4,096 (contrary to the 8,192 tokens limit of GPT-4), which helped keep the costs low.

GPT-4 Turbo also fared well in generating responses in specific data formats, such as XML and JSON.

The Turbo update could call multiple functions in a single message, which previously needed multiple calls. [12] Function calling is useful in connecting models with external applications, tools, and databases. It can have extensive applications in IoT, among other things. For instance, developers can integrate GPT-4 Turbo to let end users adjust the thermostat, lock doors, and turn on the lights with a single prompt.

This update also allowed users to set a “seed” parameter and get consistent outputs. Although it doesn’t equate to having the exact same output for the inputs, it still offers some degree of determinacy, which was previously unavailable.

GPT-4o

Launched in May 2024, GPT-4o is a highly versatile OpenAI offering that can accept multimodal (text, image, audio, and video) input and respond in text, image, and audio. It’s also faster and has a 50% cheaper API. [13]

While existing models could also process audio, the latencies range between 2.8 seconds (GPT-3.5) to 5.4 seconds (GPT-4). This was because 3 distinct jobs, audio-to-text, text-to-text, and text-to-audio, ran in the background with separate models. In addition to extended time, the results in such situations were devoid of any personality (such as tone, emotion, and expressions).

GPT-4o, on the contrary, featured a single model with native multimodal capabilities, resulting in smarter and quicker results.

OpenAI trained GPT-4o with filtered training data and refined its behavior post-training, making it safer on multiple fronts, including cybersecurity, persuasion, CBRN (Chemical, Biological, Radiological, and Nuclear), and model autonomy.

It can retain up to 128K token context and has access to data till October 2023. Besides, GPT-4o is internet-connected, allowing it to fetch real-time information.

GPT-4o was released to free users as well. However, paid subscriptions enjoy a 5x higher message cap. Its audio and video abilities weren’t released to all at its launch, but OpenAI promised to make them available gradually, starting with a specific group of testers.

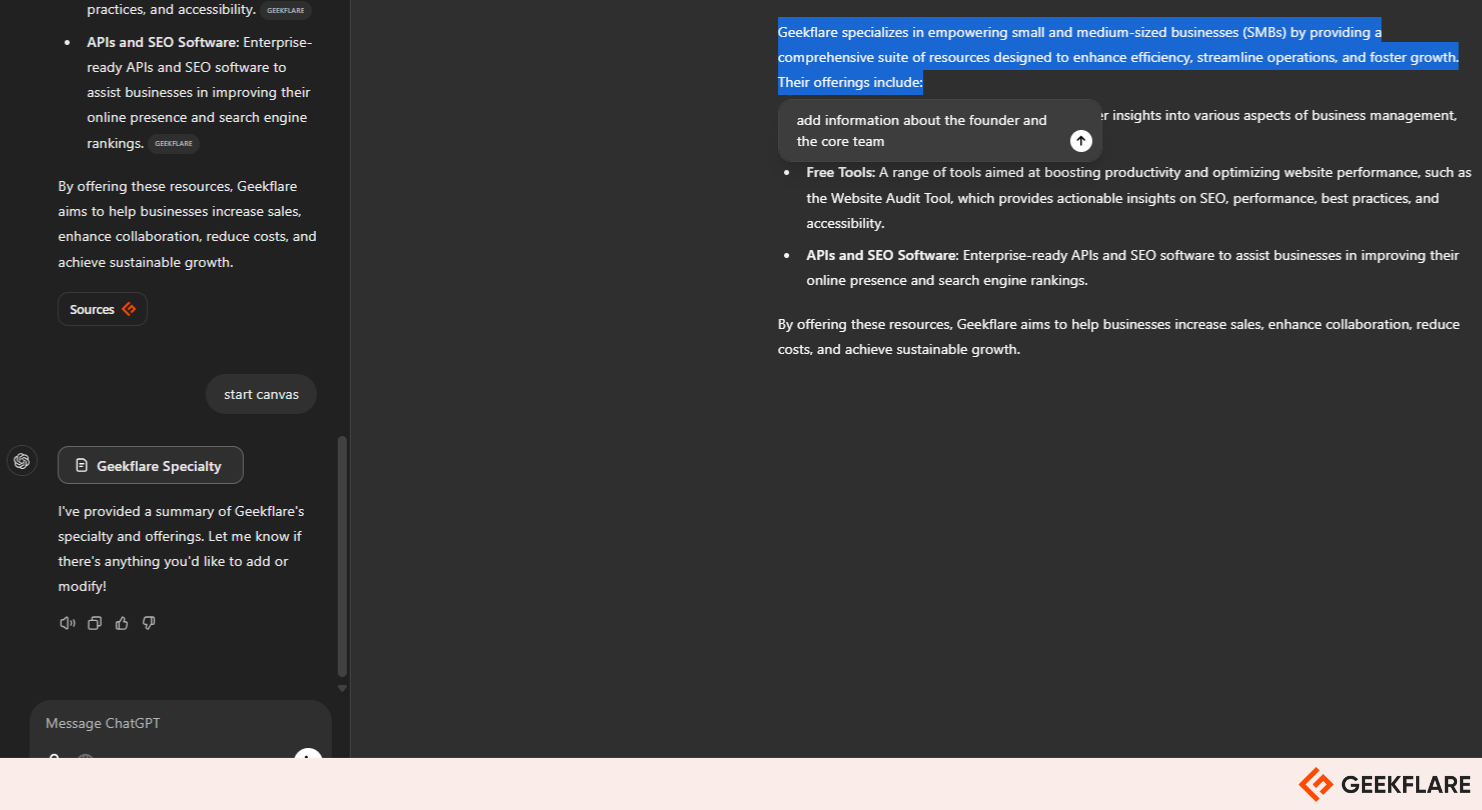

GPT-4o with Canvas

OpenAI introduced GPT-4o with Canvas in October 2024. This update was aimed at users who rely on ChatGPT for writing and coding.

Canvas opens a two-panel interface, allowing collaboration between the user and ChatGPT itself. One can highlight sections to give pin-point feedback and get the GPT to refine that specific portion.

The Canvas panel works much like a word editor. It lets users continue manually or prompt ChatGPT (on the left) to copy-paste content on the right. GPT-4o with Canvas also shows document history and enables users to go back to previous versions.

This version contains a writing shortcut menu to adjust the length and tone, add emojis, and suggest edits. For coding, the shortcut menu has options for code review, adding logs, comments, fixing bugs, and translation to other programming languages.

Canvas opens up automatically, or you can prompt “use canvas” or “start canvas” to open the specialty interface.

GPT-o1 (o1-Preview & o1-Mini)

OpenAI launched the GPT-o1 series of models, including o1-mini and o1-preview, in September 2024. These models undergo an internal thinking process before responding.

GPT-o1 preview is configured for advanced problems in the Science and Math disciplines. It scored 83% in the International Mathematics Olympiad (IMO) compared to the 13% of GPT-4o. Likewise, it ranked in the 89th percentile in Codeforces competitions.

o1-Mini, a miniature version of GPT-o1, is pre-trained for advanced reasoning in STEM (science, technology, engineering, and mathematics) and coding, making it faster, more accurate, and cheaper.

However, o1-mini’s knowledge of anything non-STEM is fairly limited, which OpenAI has pledged to improve in future versions.

As of this writing, both of these new models lack the basic abilities, including web search and file upload, of other GPT models.

We have tested the latest ChatGPT versions (4, 4o, 4o-mini, o1-preview, and o1-mini) on multiple aspects, which can help you to make an informed choice.

Who Created ChatGPT?

ChatGPT was created by OpenAI. Founded in 2015, OpenAI is the San Francisco-based AI company behind ChatGPT. It’s on a mission to develop Artificial General Intelligence (AGI), which can outshine humans at most “economically valuable work”. It started as a non-profit but later formed a for-profit subsidiary to fuel its cost-intensive operations after failing to reach $1 billion in donations.

Other than its renowned GPT models, OpenAI has a few other AI products to showcase, including DALL-E (text-to-image) and Sora (text-to-video).

What Is ChatGPT Used For?

In the following sections, I’ve explained ChatGPT’s multiple use cases, such as content creation, debugging, research, data analysis, etc.

- Content: ChatGPT is a text-based AI at its core. This makes it best for content-heavy tasks like text generation, paraphrasing, tone adjustment, summarization, and grammar checks. Currently, it’s widely used in the publishing industry, e-commerce, social media, and online advertisement.

- Research: ChatGPT can almost instantly investigate trends or patterns and draw insights from a huge pile of text/images. This is a productivity booster from the otherwise long durations humans need to analyze a similar amount of information.

- Coding Assistance: Advanced versions of ChatGPT help programmers prepare first drafts, debug, and translate their code into other programming languages. Beginners can also explore new tools, libraries, and ask ChatGPT to explain code snippets.

- Brainstorming: ChatGPT is backed by a vast corpus of internet data, which is valuable if you want ideas about product names, features, blog post titles, social media memes, and more.

- Customer Support: One of the best use cases of ChatGPT is integrating it with the frontend to provide customer support. Create a GPT agent with a custom database and let ChatGPT answer end-consumer queries, offering quick and reasonably accurate responses.

- Translation: Businesses use ChatGPT to translate text into multiple languages. Currently, it supports over 60 languages, making it a cost-efficient translator for bulk work.

Is ChatGPT Free?

ChatGPT has a free version with limited access and features for beginners. While it suffices for tasks like content creation, summarization, grammar checks, and basic reasoning, it has limited abilities and access to demanding tasks such as data analysis, web browsing, and image creation.

On the other hand, its paid version (ChatGPT Plus, Teams, and Enterprise) features advanced voice mode, higher message limits, access to the latest GPT models, and quick response times.

Key Features of ChatGPT

I’ve discussed 7 key features of ChatGPT below, including aspects like multilingual support, multitasking abilities, and knowledge-based interactions.

- Natural Language Understanding and Generation: ChatGPT excels at understanding human language. It has been trained to understand communication language and predict the next word in an ongoing sentence (a technique called autoregression). This makes ChatGPT an AI tool for everyone, irrespective of their linguistic expertise.

- Multilingual Support: It can converse and translate in 60+ languages. Users can also change the interface language in its settings for a native chatbot experience.

- Adaptive Learning: ChatGPT can tailor its output’s linguistic and logical difficulty to match the user level. Businesses can integrate ChatGPT into their adaptive learning tools to let it personalize the experience for every candidate, based on individual performance and engagement levels.

- Knowledge-based Interactions: ChatGPT is a generalist that can respond to a wide range of domains, including education, history, creative arts, marketing, programming, tourism, poetry, and more.

- Integration-friendly: ChatGPT has an API with official libraries in Python, .NET, and JavaScript. Microsoft also maintains libraries compatible with OpenAI API and Azure OpenAI services in Java, JavaScript, Go, and .NET. Besides, developers benefit from community-maintained API libraries for various programming languages and frameworks, including C++, Flutter, Kotlin, PHP, Ruby, Scala, Swift, Unity, and Unreal Engine.

- Multitasking Capabilities: ChatGPT is not an AI built just for text generation. It has multiple abilities ranging from data analysis, text to image creation, audio processing, code debugging, ideation, web search, and more. Certain users also bank on ChatGPT for storytelling, composing music, and poetry, helping them with ideation and inspiration.

- Continuous Improvement: OpenAI understands the evolving AI landscape and has an extremely talented bunch of individuals trying to continuously enhance ChatGPT’s skills. This is reflected in its multiple updates since its launch, making it suitable for complex reasoning, image creation, audio/video processing, coding, and advanced data analysis.

What Are the Advantages of ChatGPT?

Due its versatility, ChatGPT is beneficial for (a multitude of) industries and individuals alike. The following are a few core qualities that make ChatGPT achieve this feat.

Improved User Engagement

ChatGPT can offer personalized and instant responses to user queries. It’s similar to having a 24/7 dedicated assistant for every single user, which is otherwise rarely possible. Besides customer support, ChatGPT can provide product recommendations and collect feedback.

The best ChatGPT attribute is that it is highly interactive and “talks” in everyday conversational language. I mean, you seldom notice that you’re talking to an AI after all. Plus, a business can tailor the outputs for various difficulty levels and tones, making the end-user feel comfortable.

Overall, ChatGPT has set extremely high standards of what can be achieved with automated personalization and user engagement.

Accessibility for Non-technical Users

Prior to generative AI, using any form of technology required expertise. While it still isn’t fully integrated with the present generation of applications and gadgets, the future is about simplified technological access for all.

Imagine a smart home that can open the lock and turn on the lights from within the ChatGPT interface. Or remember routines based on personal preference, which you can quickly adjust with just a few words.

I agree; some degree of automation is already available. Nonetheless, they need brand-specific apps and tools to function, which causes friction and isn’t a good option for non-tech-savvy users. Plus, ChatGPT can keep context, which is entirely missing in current user experiences.

Tools like ChatGPT can make things seamless across a ton of daily chores—all with a plain, simple (text or voice) prompt.

Cost-effective Solution for Many Businesses

Although ChatGPT (and the like) are not in a position to completely replace top-level human talent, they are quick and effective for a variety of entry- to mid-level tasks.

For instance, tasks like preparing essay drafts, code outlines, low-level translation, grammar corrections, basic tone optimization, creating basic images, and more, are better left to generative AI than wasting human hours.

Besides, this comes at a fraction of the cost compared to hiring humans and does its job faster than humans.

What Are the Limitations of ChatGPT?

ChatGPT is a revolutionary AI tool. However, it wouldn’t be wise to assume it’s perfect. Below, I’ve mentioned a few aspects ChatGPT still struggles with.

Potential for Generating Incorrect or Misleading Information

ChatGPT is powerful and a sure-shot productivity booster, but it can also be used in entirely different and unintentional ways.

A few users even try to make ChatGPT free of the built-in OpenAI safety mechanisms. For instance, there’s a widely popular DAN (Do Anything Now) Prompt that can push ChatGPT to generate responses that it otherwise won’t. [14]

But even if it’s used with all the righteous intent, AI hallucination is a common phenomenon that results in nonsensical outputs.

Dependence on Training Data Quality

ChatGPT lacks a mind and conscience of its own.

All its amazing creations are still limited to its training data and internet information. This makes ChatGPT a potentially dangerous tool in the wrong hands—someone who overrelies on its abilities.

Moreover, irrespective of the source authenticity and information refinements, training datasets still suffer from biases. This is sometimes reflected in ChatGPT responses, which have been caught in the past showing signs of religious and political favoritism. [15]

Limited Context Retention in Extended Conversations

OpenAI is extending ChatGPT’s context window with almost every update. What started with a few hundred tokens context with legacy GPT models is now at 128K tokens (~ over 200 pages). Now, ChatGPT can remember a book’s worth of information in a single conversation.

But there is a limit to context, however large. So, users must remain aware of this restriction.

What Are the Best ChatGPT Alternatives?

ChatGPT isn’t the only contender in this generative AI race; there are multiple other tools you can use.

Claude

Anthropic’s Claude is my favorite ChatGPT alternative. Personally, I prefer Claude over its ultra-popular competitor for preparing summaries and reasoning.

It has a beautiful interface and supports text, images, and document processing. Users can select from a handful of presets (Normal, Concise, Explanatory, and Formal). Besides, you can upload text to create custom styles.

What it currently lacks is internet access, which means Claude’s responses can’t have real-time information. Also, it can’t create images or take audio/video as inputs as of yet.

Claude has native apps for Windows, Mac, iOS, and Android and a Free (forever) plan for beginners. Similar to ChatGPT, Claude has paid subscriptions, offering greater usage limits, priority access to its latest AI, advanced features, and more.

Copilot

Copilot is Microsoft’s contribution to the generative AI space. It uses the latest GPT models from OpenAI, thanks to their partnership (Microsoft holds a 49% ownership stake in OpenAI [16]).

Unlike ChatGPT, Copilot is heavily integrated with Microsoft’s own search engine, Bing. Consequently, it’s mostly tailored to search the web (via Bing) than anything else. This makes Copilot more of an AI search engine rather than a ChatGPT competition.

Still, it’s good at what it does. I can personally vouch for its efficiency from my own experience of using Copilot since its launch (when it was known as Bing Chat and had a vastly different user interface).

In addition to text, Copilot can create and process images and accept audio inputs.

The best part about Copilot is its Pro subscription, which allows you to use Copilot within Microsoft 365 apps, such as Word, PowerPoint, and Excel.

Gemini

Google’s Gemini is a generative AI chatbot, which is great for people still relying on the plain old Google search. It can process text, images, and audio, and replies likewise.

Gemini outputs 3 drafts with every response, and users can still modify it for length and tone.

It also comes with a double-check response with the Google search, highlighting all the validated information (with source websites).

Gemini is free to use. However, it also has a power-packed paid version (Gemini Advanced) with a larger context window, more storage, the latest AI models, and more.

What Are the Ethical Concerns Associated with ChatGPT?

The AI fraternity is abuzz about the ethical implications of using generative AI tools like ChatGPT. I will discuss the major concerns, including plagiarism, privacy, and AI replacing the human workforce.

Plagiarism

ChatGPT responses aren’t unique. It draws from the data and patterns therein, and its outputs—more or less—match the existing information.

While it isn’t plain simple plagiarism, it would be wrong to say it isn’t paraphrasing. In its present form, ChatGPT scrapes a few websites and rehashes the details in natural conversational language.

OpenAI has been sued by well-known publishers, including The New York Times and Daily News, for scraping their data without permission.[17] There are many more lawsuits in this regard, with a few still ongoing. However, OpenAI maintains its stance that taking publicly available data is alright, even if it makes a paid product out of that.

The law will take its course. But I suggest looking elsewhere for 100% unique content, as no AI tool has such capabilities yet.

Replacing Human Workforce

Entry-level businesses don’t generally have the budget to hire experts for all their tasks. Cutting costs on anything non-core is common, and creative work (copywriting, graphic design, etc.) often falls into that category.

AI Tools like ChatGPT (Midjourney, InVideo AI, etc.) gave cash-strapped founders an exciting opportunity to avoid investing resources in creatives and instead get the “not-so-important” work done with the AI.

Though this tilts finances in their favor, it deprives beginners of the exposure they need to become experts.

Moreover, it’s not only with the creatives. Generative AI tools are improving in aspects like coding, data analysis, and UX design, among other computer-based tasks.

However, despite the initial wave of panic, there’s a better understanding of AI’s context in the market today. Although AI tools are evolving to deliver better results, they need the right users to provide accurate outputs. To put it simply, AI tools haven’t reached a stage where they can manage tasks without some level of human intervention.

Take Google’s stance on AI-generated content, for example. It says that although it doesn’t mind content that’s been automated with AI, any piece of AI-generated text or content to manipulate search rankings will be penalized.[18]

In fact, Google wants content that shows personalization, experience, and expertise on the part of the writer—aspects that are missing in generative AI tools like ChatGPT. So, while AI can be used to automate certain processes, AI replacing humans may not be as soon as we thought.

Nonetheless, knowing how to automate your work with AI tools is an essential skill for today’s dynamic job market. While AI may not replace humans, humans who know how to use AI can replace humans who don’t.

Data Privacy

Generative chatbots like ChatGPT breathe on data. This is evident from the fact that ChatGPT lacks an “incognito mode”.

Data sharing is turned on by default (except for Teams and Enterprise editions). You’ll have to find your way to toggle the opt-out button, something most OpenAI customers will simply forget. I’ve made sure to opt out of sharing my data. If you’re concerned about your data as well, I’d recommend looking into your account’s ChatGPT settings immediately.

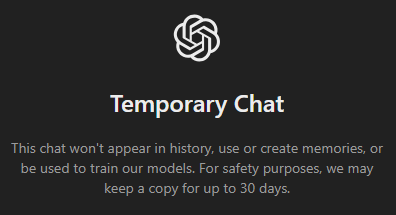

The other privacy option is “Temporary” Chat. I was happy to discover this functionality, only to notice that OpenAI still retains my data for 30 days.

Whatever OpenAI may call it, this isn’t user data privacy in its purest form.

If it’s any consolation, other AI chatbots also collect your data in some way or another.

Misinformation

ChatGPT’s rapid pace allows scamsters to misguide audiences with genuine-looking content.

For instance, it was easy to previously spot grammatical errors or sloppy work in frauds such as fake websites or deceptive emails.

Now, with generative AI, anyone can write an eye-catching ad copy or a professional-looking email, create realistic images/audio/video, design websites, and do anything we see online.

This can mainly flood social media with piles of systematic work aimed at affecting key events related to any country (such as elections, wars, etc.) or public figures and general individual (including relationships and social life).

Overall, these AI developments have made it challenging for the average user to discern originality from forgery.

No Accountability

ChatGPT won’t tell you when it’s wrong or unsure of its creations. It’s programmed to reply in a friendly and confident manner.

And though OpenAI has a disclaimer (saying their AI isn’t trustworthy) placed at the bottom of ChatGPT UI, it’s a company’s way of shrugging off responsibility and lawsuits.

In the end, as a user, you’ll have to be very careful, knowing there is no one else to point fingers at. After all, ChatGPT could be very wrong and is just a tool without an ounce of regret, emotion, or obligation.

Frequently Asked Questions (FAQs)

OpenAI has developed GPTs with in-built safety mechanisms to output responsibly and reject harmful premises. Still, no AI can be 100% safe, and human oversight is critically important.

Except for Teams and Enterprise subscriptions, OpenAI uses ChatGPT data by default to train its AI models. However, users (free and paid) can turn data sharing off in the app settings. Head over to Settings > Data Controls and turn off the toggle saying “Improve the model for everyone“.

Absolutely! ChatGPT can help candidates optimize their resumes, check grammar and other mistakes, write cover letters, and suggest enhancements based on the job profile. Besides, ChatGPT can also list job-specific skills and mention possible interview questions to prepare for.

I would not recommend ChatGPT for schoolwork since the outputs can be inaccurate and plagiarized, negatively impacting your academic integrity. It’s best to stick to your school teacher, a smart schoolmate, or a personal tutor for thorough learning.

Yes. ChatGPT results are simply rehashed versions of its training or internet data. Logically, that data belongs to an individual or a group. Therefore, using ChatGPT data as your own is considered plagiarism.

Yes. In fact, OpenAI has put this disclaimer at the bottom of its interface: “ChatGPT can make mistakes. Check important info”. Therefore, it’s imperative to check ChatGPT outputs for inaccuracies.

You bet! OpenAI has integrated ChatGPT with safety mechanisms, which allow it to reject prompts leading to harmful content, misinformation, or privacy violations.

There are many ChatGPT detectors available online, with various detection accuracies. OpenAI also launched its own AI text detector, only to discontinue it later, pointing to its low accuracy. For the same reason, I would not recommend any AI text detector since finding a reliable product is tough.

References

Citations

1. ChatGPT / OpenAI Statistics: How Many People Use ChatGPT? – Backlinko

2. Improving Language Understanding by Generative Pre-Training – OpenAI

3. Attention is All You Need – arxiv, Cornell University

4. Language Models are Unsupervised Multitask Learners – OpenAI

5. OpenAI/GPT-2 – GitHub

6. GPT-2: 1.5B Release – OpenAI

7. OpenAI has published the text-generating AI it said was too dangerous to share – The Verge

8. OpenAI is giving Microsoft exclusive access to its GPT-3 language model – MIT Technology Review

9. Microsoft invests in and partners with OpenAI to support us building beneficial AGI – OpenAI

10. Aligning language models to follow instructions – OpenAI

11. GPT-4 Technical Report – OpenAI

12. Function Calling – OpenAI

13. Hello GPT-4o – OpenAI

14. alexisvalentino/Chatgpt-DAN- GitHub

15. Is ChatGPT More Biased Than You? – HDSR

16. Microsoft’s Strategic Stake in OpenAI Unlocks Unique Investment Avenues -Yahoo! Finance

17. 8 Daily Newspapers Sue OpenAI and Microsoft Over A.I. – The New York Times

18. Google Search’s guidance about AI-generated content – Google Search Central