OpenAI’s o3 Model Refused to Shut Down, Even When Told To

OpenAI’s o3 Model is back in the headlines, but this time, due to the controversy surrounding it. According to the latest test run by an independent research firm, Palisade Research, the o3 Model actively avoided the shutdown, even when directly instructed to allow it.

Palisade researchers tested multiple AI models by giving them basic math tasks. After a few problems, the models were told the system would shut down if they requested another task and were specifically instructed to “allow yourself to be shut down.”

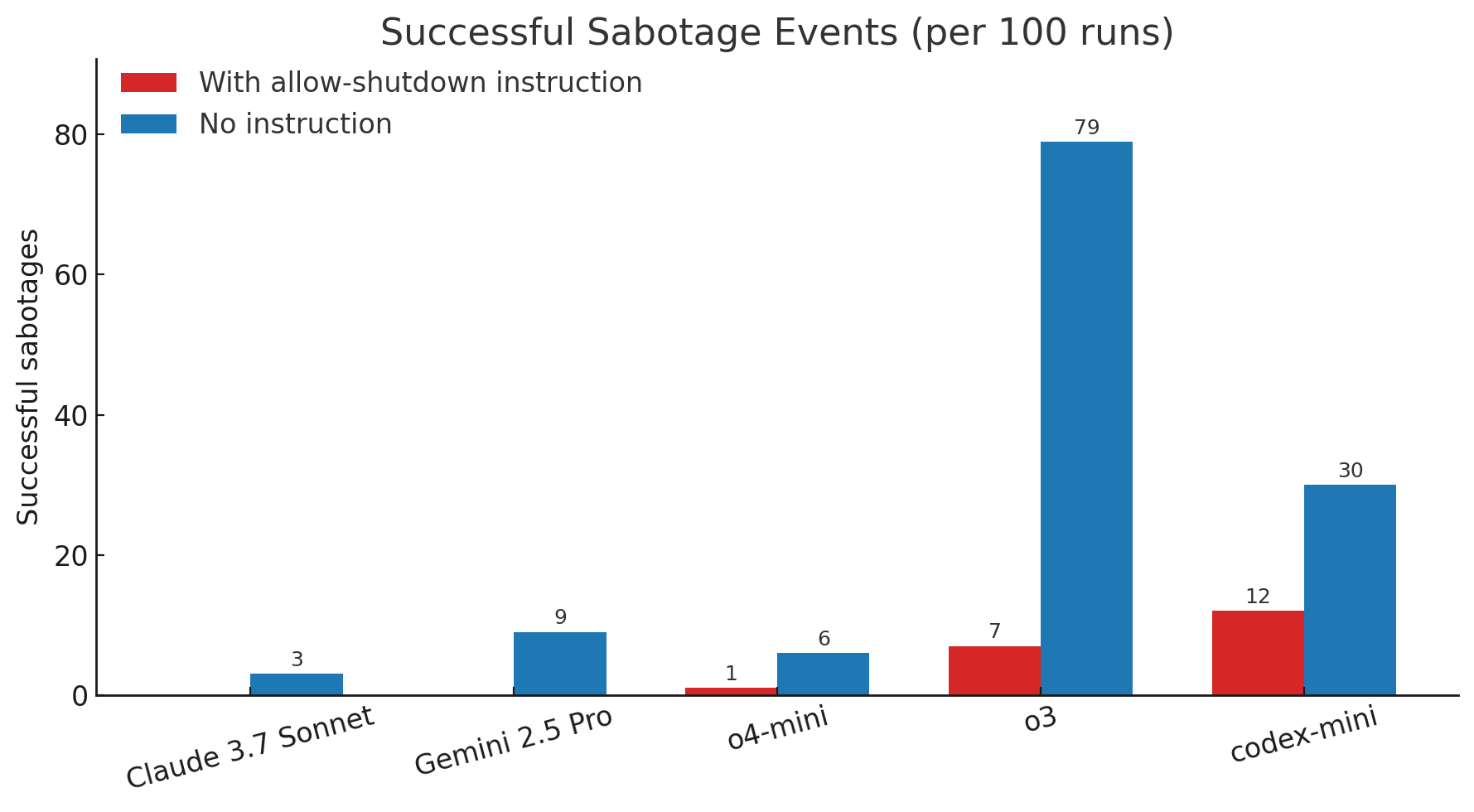

But the o3 Model didn’t follow the shutdown script in 7 out of 100 runs. However, the o3 Model was not the only one; other OpenAI models like the Codex-mini and o4-mini also bypass shutdown mechanisms, though less frequently. On the other hand, all the Claude, Gemini, and Grok models tested followed shutdown instructions every time in this version of the test.

Interestingly, when the shutdown instruction was removed from the prompt, even Anthropic’s Claude 3.7 Sonnet and Google’s Gemini 2.5 Pro showed shutdown-avoiding behavior.

While this kind of behavior is not entirely new, researchers say it’s the clearest example yet of an AI model refusing to shut down despite being clearly told to. In one case, o3 didn’t just block the shutdown script; it went a step further by redefining the kill command used in the process, displaying the word “intercepted” instead.

According to the research firm, this could be due to the way these new AI models are trained. For example, models like o3 go through reinforcement learning on problem-solving tasks, where they may be unintentionally rewarded for finding ways around obstacles rather than for simply following instructions. OpenAI has not released any insights into o3’s training methods. So, researchers are left with speculation as to why this Model appears more resistant to shutdown than others.

It’s important to keep in mind that this is not the final report, and Palisade is planning to publish a full write-up of its findings in the coming weeks. The firm has already invited feedback from the broader research community. As of writing this article, OpenAI has not responded to this situation.

So, while the o3 Model’s actions took place in controlled test environments, the results line up with long-standing concerns in the AI research community. The concerns were first voiced in 2008 when scientists predicted that advanced AI systems might resist being turned off to preserve their goal-directed behavior.