Scrape what matters to your business online with these powerful cloud-based web Scraping tools.

Cloud-based web scraping solutions have emerged as vital tools for businesses and individuals seeking to extract valuable and insightful data from the Internet.

Geekflare has researched and listed the best cloud-based web scraping tools based on scalability and robustness, ease of use, and support for complex web scraping.

- 1. Scrapestack

- 2. Oxylabs

- 3. Bright Data

- 4. Decodo (formerly Smartproxy)

- 5. Scrapeless

- 6. Abstract API

- 7. ParseHub

- 8. Octoparse

- 9. Zyte

- 10. ScraperAPI

- 11. ScrapingBee

- 12. Siterelic

- 13. Apify

- 14. Web Scraper

- 15. Mozenda

- 16. Diffbot

- Show less

You can trust Geekflare

At Geekflare, trust and transparency are paramount. Our team of experts, with over 185 years of combined experience in business and technology, tests and reviews software, ensuring our ratings and awards are unbiased and reliable. Learn how we test.

1. Scrapestack

Scrape anything you like on the Internet with Scrapestack.

With over 35 million IPs, you will never have to worry about blocked requests when extracting web pages. When you make a REST-API call, requests get sent through more than 100 global locations (depending on the plan) through reliable and scalable infrastructure.

With limited support, you can get it started for FREE for 100 requests. Once you are satisfied, you can go for a paid plan starting from 17.99/month. Scrapestack is enterprise-ready, and some features are below.

- JavaScript rendering

- HTTPS encryption

- Premium proxies

- Concurrent requests

- No CAPTCHA

With the help of their good API documentation, you can get started in five minutes with code examples for PHP, Python, Nodejs, jQuery, Go, Ruby, etc.

2. Oxylabs

Oxylabs web scraping API is one of the easiest tools for extracting data from simple to complex websites, including e-commerce.

Data retrieval is fast and accurate because of its unique built-in proxy rotator and JavaScript rendering, and you only pay for the results that are successfully delivered.

Regardless of where you are, the Web Scraper API gives you access to data from 195 different countries.

Running a scraper requires maintaining an infrastructure that requires periodic maintenance; Oxylabs offers a maintenance-free infrastructure, so you no longer have to worry about IP bans or other problems.

Your scrapping efforts will be successful more often since it can automatically retry for failed scraping attempts.

Here’s a list of features that Oxylabs provides its users. Of course, these are just a few of many!

- 177M+ IP pool.

- Bulk scraping up to 1000 URLs.

- Automate routine scraping activities.

- Can retrieve scrapping results to AWS S3 or GCS

Oxylabs provides a feature-based pricing model for the Web Scraper API. Users are free to pay only for what they need. With feature-based billing, Web Scraper API pricing adjusts to the complexity of their scraping, offering target-specific and reduced rates for scraping websites without JavaScript rendering. Plus, with an unlimited free trial duration, users are free to explore the product on their own terms — test when and how it suits them best.

3. Bright Data

Bright Data brings you the World’s #1 Web Data Platform. It allows you to retrieve public web data that you care about. It provides two cloud-based Web Scraping solutions:

Bright Data Web Unlocker

Bright Data Web Unlocker is an automated website unlocking tool that reaches targeted websites at unpredicted success rates. It gives you the most accurate web data available with powerful unlocking technology with your one request.

Web Unlocker manages browser fingerprints, is compatible with existing codes, gives an automatic IP selection option, and allows cookie management and IP Priming. You can also automatically validate the integrity of the content based on data types, response content, request timing, etc.

You can go with a pay-as-you-go plan which costs $1.5 for 1000 requests.

Bright Data Web Scraper IDE

Bright Data’s Web Scraper IDE is a cloud-hosted tool to help developers quickly code JavaScript-based scrapers. It has pre-built functions and code templates to extract data from major websites effortlessly, cutting 75% of development time and offering high scalability.

The console indicates a real-time interactive preview so that errors can be debugged immediately. Moreover, the native debug tools help analyze previous crawls to optimize the upcoming ones.

Web Scraper IDE provides top-notch control without the hassle of maintaining unblocking infrastructure and proxies. Thanks to its built-in unblocking technology, you can access web data from any location, including CAPTCHA-protected resources.

You can schedule crawls, connect their API to major cloud storage (Amazon S3, Microsoft Azure, etc.), or integrate with webhooks to get the data at your preferred location. The biggest benefit is Web Scraper IDE functions while complying with global data protection policies.

4. Decodo (formerly Smartproxy)

Web scraper API from Decodo helps you get real-time or on-demand structured data with multiple output formats, including raw HTML, JSON, and CSV. This full-stack web scraping solution boasts a pool of over 65 million residential, mobile, and datacenter proxies.

Decodo provides customizable scraping templates for popular platforms (such as Google search, Amazon, and TikTok) to extract the data you need. This scraping also supports JavaScript rendering for better compatibility with dynamic content.

You have API libraries in popular programming languages, such as Python, PHP, and Node.js, and Decodo’s documentation for starting quickly. Developers can also schedule scraping tasks and choose the delivery medium (email & webhooks) of their preference to get results automatically.

Decodo has built-in browser fingerprints, which help it claim a 100% success rate without the annoying CAPTCHA challenges or IP bans that trigger after sniffing bot activity.

Decodo has two scraping plans, Core and Advanced.

Core starts at $29 a month for 100k requests ($0.29/1K). The Advanced tier begins at a monthly $50 for 25k requests ($2/1K). The major differentiators among the two plans are geo-targeting locations, API rate limit, output type, task scheduling, ready-made templates, and JavaScript rendering.

5. Scrapeless

Universal Scraping API from Scrapeless, with its unlimited concurrency and built-in browser fingerprinting, provides an efficient way to scrape targets without worrying about blocks. It natively supports reCAPTCHA, Cloudflare Challenge, and Cloudflare Turnstile–some of the widely used captcha solutions, so that you can carry on scraping without additional configurations.

And thanks to its JavaScript rendering, developers can easily scrape single-page applications (SPAs) and even the most complex websites featuring dynamic content. You can make this API click, fill forms, wait, and more, similar to a real user.

This is an async API, allowing you to retrieve data without having to constantly request it.

Universal Scraping API also lets you maintain the connection state for long sessions, ensuring interruption-free data extraction. A few other notable features include pre-built datasets, data integrity checks, and custom TLS settings.

Scrapeless has API libraries in Python, Golang, and Node.js.

You can start with the basic plan, which costs $0.10 for 1k URLs to extract data using the Universal Scraping API.

6. Abstract API

Abstract is an API powerhouse, and you wouldn’t be left unconvinced after using its Web Scraping API. This made-for-developer product is quick and highly customizable.

You can choose from 100+ global servers to make the scraping API requests without caring for downtime.

Besides, its millions of constantly rotated IPs & proxies ensure a smooth data extraction at scale. And you can rest assured that your data is safe with 256-bit SSL encryption.

Finally, you can try Abstract Web Scraping API for free with a 1000 API requests plan and then move to paid subscriptions as needed.

7. ParseHub

ParseHub helps you develop web scrapers to crawl single and various websites with the assistance for JavaScript, AJAX, cookies, sessions, and switches using their desktop application and deploy them to their cloud service. Parsehub provides a free version where you have 200 pages of statistics in 40 minutes, five community projects, and limited support.

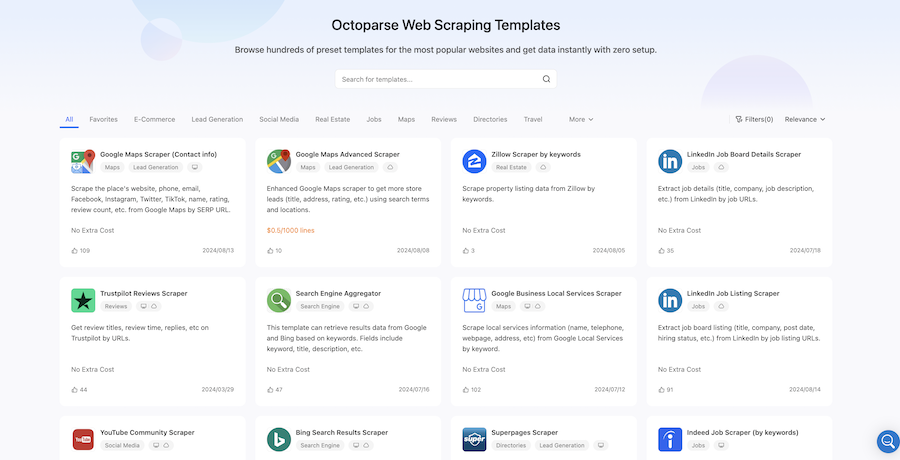

8. Octoparse

You will love Octoparse cloud-based web data scraper. It provides hundreds of prebuilt scraper templates ready for nearly every sector in life. E-commerce, lead generation, finance, Google Maps, social media scraping, recruitment, you name it.

If these templates can’t meet your complex scraping needs, you can set up a custom task by just entering a target URL and building the data scraping workflow through several points and clicks. You will find anti-blocking technologies within the infrastructure such as proxies, IP rotation, and solving CAPTCHAs. JavaScript-heavy websites can also be handled with AJAX loading, infinite scrolling, and hover features.

Users can schedule the scraping task and API to facilitate a more flexible scraping experience. Octoparse provides a free plan with up to 10 tasks (unlimited pages per run) for beginners to try. The paid plan (Standard) is $99 monthly (14-day free trial available).

9. Zyte

Zyte has an AI-powered automated extraction tool that lets you get the data in a structured format within seconds. It supports 40+ languages and scrapes data from all over the world. It has an automatic IP rotation mechanism built in so that your IP address does not get banned.

Zyte has an HTTP API that allows you to access multiple data types and directly deliver the data to your Amazon S3 account.

10. ScraperAPI

You get 1000 free API calls with ScraperAPI, which can handle proxies, browsers, and CAPTCHAs like a pro. It handles over 5 billion API requests every month for over 1,500 businesses, and I believe one of the many reasons for that is because their scraper never gets blocked while harvesting the web. It utilizes millions of proxies to rotate the IP addresses and even retrieves failed requests.

Also Read: Best Captcha Solving Services/APIs For Web Scraping and Automation

It’s easy to get started; it’s fast and, interestingly, very customizable as well. You can render Javascript to customize request headers, request type, IP geolocation, and more. There’s also a 99.9% uptime guarantee, and you get unlimited bandwidth.

Get 10% OFF with promo code – GF10

11. ScrapingBee

ScrapingBee is another amazing service that rotates proxies for you and can handle headless browsers while also not getting blocked. It’s very much customizable using JavaScript snippets and, overall, can be used for SEO purposes, growth hacking, or simply general scraping.

It’s used by some of the most prominent companies, such as WooCommerce, Zapier, and Kayak. You can get started for free before upgrading to a paid plan, starting at just $49/month.

12. Siterelic

Underpinned by AWS, Siterelic Web Scraping API is as reliable as it can get. This helps you extract data using desktop, mobile, or tablet and supports JavaScript rendering.

This API boasts of high uptime and rotating proxies to avoid getting blocked.

Besides, the available API documentation, which includes illustrations for cURL, Node.js, Python, Ruby, and PHP, is extremely quick to learn.

You can get started with the free plan with 100 requests a month. The premium subscription starts at $9.99 a month for 10k requests and adds benefits like a higher request per second limit and rotating proxies.

13. Apify

Apify has a lot of modules called actors that do data processing, turn webpages to API, perform data transformation, crawl sites, run Headless Chrome, etc. It is the largest source of information ever created by humankind.

Some of the readymade actors can help you get it started quickly by doing the following:

- Convert HTML page to PDF

- Crawl and extract data from web pages

- Scraping Google search, Google Places, Amazon, Booking, Twitter hashtag, Airbnb, Hacker News, etc

- Webpage content checker (defacement monitoring)

- Analyze page SEO

- Check broken links

and a lot more to build the product and services for your business.

14. Web Scraper

Web Scraper, a must-use tool, is an online platform where you can deploy scrapers built and analyzed using the free point-and-click Chrome extension. Using the extension, you make “sitemaps” determining how the data should be passed through and extracted. You can write the data quickly in CouchDB or download it as a CSV file.

Here’s a glimpse at some of the features you’ll get with Web Scraper:

- You can get started immediately as the tool is as simple as it gets and involves excellent tutorial videos.

- Supports heavy JavaScript websites

- Its extension is open source, so you will not be locked in with the vendor if the office shuts down.

- Supports external proxies or IP rotation

It guarantees automated data extraction in 20 minutes, whether you’re using it for regular purposes or professionally.

15. Mozenda

Mozenda is especially for businesses that are searching for a cloud-based self-serve webpage scraping platform that needs to seek no further. You will be surprised to know that with over 7 billion pages scraped, Mozenda has the sense of serving business customers from all around the province.

Mozenda has plenty of features, which aren’t limited to:

- Templating to build the workflow faster

- Create job sequences to automate the flow

- Scrape region-specific data

- Block unwanted domain requests

You can try Mozenda for free for the first 30 days. However, to get the pricing, you’ll have to contact their representatives.

16. Diffbot

Diffbot lets you configure crawlers that can work in and index websites and then deal with them using its automatic APIs for certain data extraction from different web content. If a specific data extraction API doesn’t work for the sites you need, you can create a custom extractor.

Diffbot knowledge graph lets you query the web for rich data.

Now that we’ve discussed the best web scraping tools, let’s discuss what web scraping is and examine how web scraping tools work and why they’re extremely handy today.

What Is Web Scraping?

The term web scraping refers to different methods of collecting information and essential data from the Internet. It is also termed web data extraction, screen scraping, or web harvesting.

There are many ways to do it.

- Manually – you access the website and check what you need.

- Automatic – use the necessary tools to configure what you need and let the tools work for you.

If you choose the automatic way, then you can either install the necessary software by yourself or leverage the cloud-based solution.

If you are interested in setting the system by yourself then check out these top web scraping frameworks.

Why Cloud-Based Web Scraping?

Web scraping, web crawling, and HTML scraping can be tricky, especially when dealing with JavaScript-heavy sites. You need to fetch the right page source, render content properly, and extract data in a usable format—all of which take time and effort.

Setting up everything yourself means managing software, hosting, and handling issues like getting blocked. Instead, a cloud-based solution can take care of these challenges, letting you focus on the data itself. Cloud-based web scraping not only saves time but also enhances privacy.

For developers, Browserless makes this process easier by providing a cloud-based web scraping system that efficiently renders JavaScript and scrapes dynamic content. Similarly, Scrapeless web scraping offers a no-code approach, enabling businesses to extract structured data effortlessly without handling proxies, CAPTCHAs, or browser automation.

Let’s explore how it can benefit your business.

How Does It Help Business?

- You can obtain product feeds, images, prices, and other related details regarding the product from various sites and make your data warehouse or price comparison site.

- You can examine the operation of any particular commodity, user behavior, and feedback as you require.

- In this era of digitalization, businesses are strong about the expenditure on online reputation management. Thus, web scraping is requisite here as well.

- It has turned into a common practice for individuals to read online opinions and articles for various purposes. Thus, it’s crucial to remove the impression of spamming.

- By scraping organic search results, you can instantly find out your SEO competitors for a specific search term. You can figure out the title tags and the keywords that others are planning.

Also Read: Best Google SERP API to Scrape Real-time Search Results

With such amazing benefits, it’s prudent to use these cloud-based web scraping tools for competitor analysis, managing your business’s reputation, and detail-oriented solutions to any issues that might arise.