The software development world is not a constant and finding always better ways to deliver high-quality applications is getting more complex than ever before. The expectations and requirements from clients are just rising as they see what’s possible today with the latest cloud platforms.

Enter event-driven architecture (EDA), an architecture pattern that has gained significant popularity in recent years. Embracing the principles of loose coupling, scalability, and real-time data processing, event-driven architecture has become a game-changer in software delivery.

We will delve into that rabbit hole, exploring its numerous benefits and discussing its implementation in software delivery. Then we uncover how this approach models a more flexible and resilient system, where developers build applications that can handle massive workloads with ease.

Understanding Event-Driven Architecture

Event-driven architecture is a software design pattern that relies on the processing of events within a system and their flow. The system’s components communicate and interact exclusively via those events.

Instead of direct synchronous calls, events workflow is asynchronous, which brings a significant amount of freedom and independence between the components.

Let’s explore the practical aspects of implementing event-driven architecture and discuss some patterns that developers can use to build robust systems.

The Power of Loose Coupling in Event-Driven Architecture

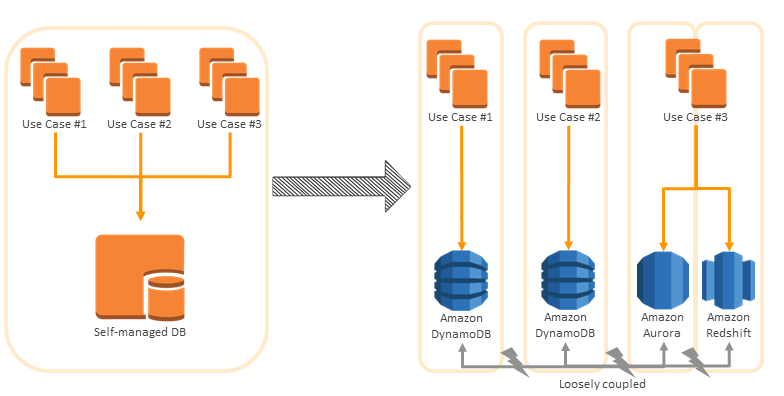

One key advantage of event-driven architecture is its ability to decouple components within a system. You can design events as the primary means of communication, which can be the ultimate interface between the components.

Individual components become independent entities that can operate autonomously and, most of the time, asynchronously. This property enhances the modularity of the whole system but also prepares for easier maintenance and scalability. It’s much easier to add, modify, or replace components without impacting the rest of the system in such an architecture.

This architecture, therefore, promotes loose coupling between components. One component does not care about the functionality of the other one. Simply said, there is no inner-dependency.

You have much higher flexibility in designing such architecture when there is no need to consider the impact on the rest of the system while modifying only a small part of the system.

Loose coupling is such a fundamental aspect that it makes sense to explore it a bit further in detail and what exactly it means inside the event-driven architecture.

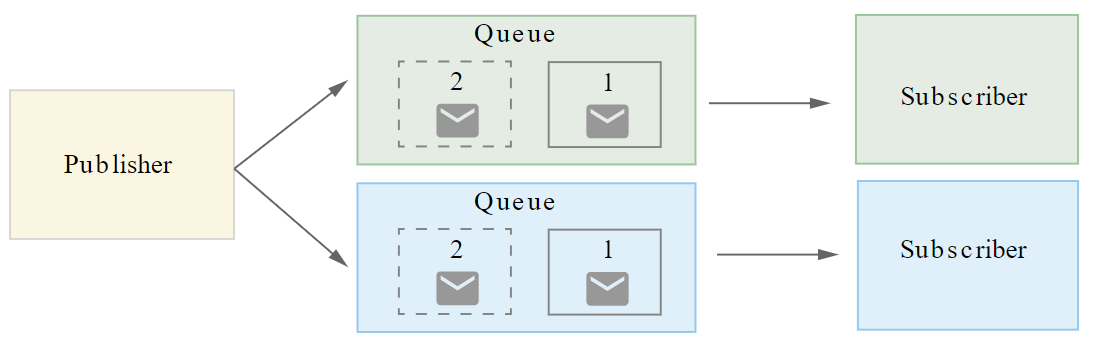

#1. Event Publishing And Subscribing

Components usually publish events to a message broker or event bus (you can understand this as a buffer full of asynchronous messages) without needing to know which specific components will consume those events. This loose coupling principle enables components to operate independently, as publishers and subscribers are strictly decoupled.

For example, a product catalog component can publish a “new product added” event, and other components, such as inventory search, can subscribe to that event and perform that actual search action.

#2. Event Schema Evolution

As the system evolves, event construction may need to change. Loose coupling gives you the freedom for the evolution of event schemas over time without impacting other components. Nothing that you have already implemented is set in stone and can be easily enhanced over time.

What’s more, components that consume events can be designed to be resilient to changes in event structure so that you ensure backward compatibility. This is important as you don’t want to break the existing flows over time but still want to keep the possibility of changes on as many components as possible. It enables the system to evolve and adapt to changing requirements without causing disruptions.

#3. Dynamic Component Adition

Event-driven architecture brings the benefit of easy new components introduction if you need to perform additional processing as the platform evolves.

For example, a fraud detection component can be added at any point to the system to analyze purchase events and identify potential suspicious activities.

This is how loose coupling enables the system to easily extend its functionality without tightly coupling new components with existing ones.

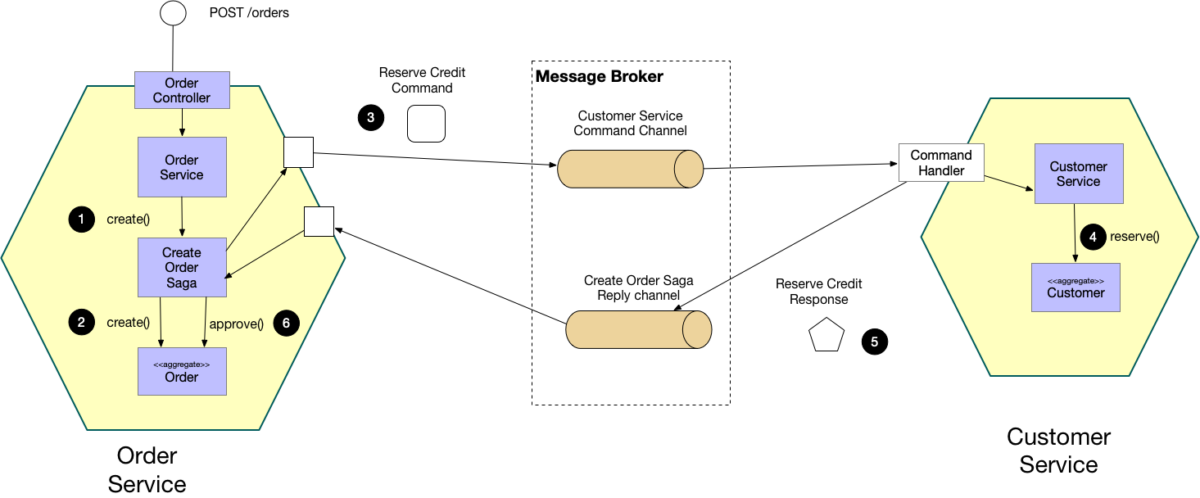

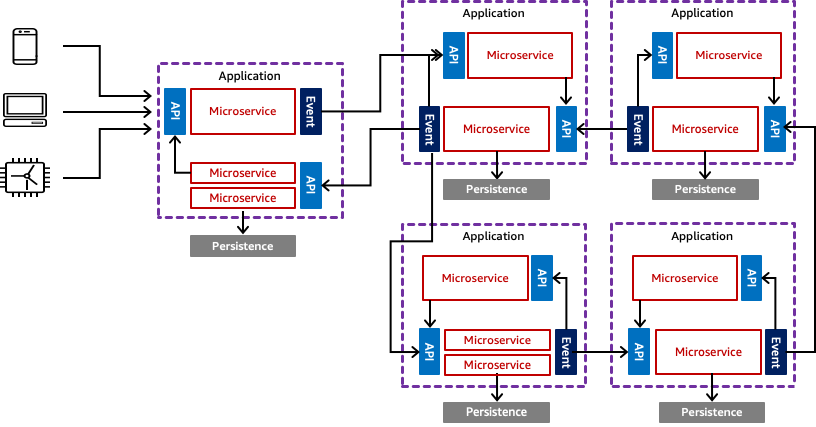

#4. Event-Driven Microservices

Each microservice that you use in the platform can be designed as an independent component that communicates through events. This is where loose coupling enables even microservices to be developed, deployed, and scaled independently of each other.

For example, a user management microservice can publish events related to user registration or authentication, and other microservices can subscribe to those events to perform their functional tasks inside the application once the user has been successfully authenticated.

#5. Event Choreography

Components can collaborate through event choreography, which basically means that events orchestrate the system’s behavior. They define what the end-to-end processes are doing inside the platform.

Such a loose coupling principle enables components to interact indirectly through events without needing to know the specifics of each other’s implementation. This is a huge advantage as you don’t even need to fix your application to a specific set of programming languages.

For example, in an order processing system, events such as “order placed,” “payment received,” and “shipment dispatched” can trigger different components to perform their tasks, but they are linked together via specific events, together forming a united workflow.

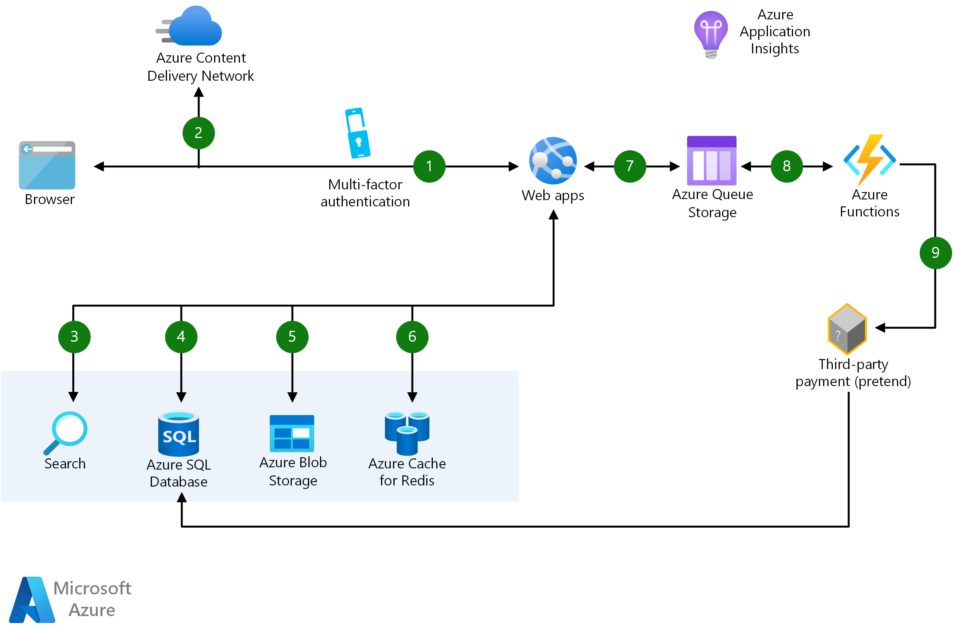

Achieving Scalability in Event-Driven Architecture

Scalability is another area where event-driven architecture shines. With its inherent ability to handle a large number of events concurrently, you can scale up or down applications based on actual demand in the application.

If the workload is distributed across multiple components, event-driven systems can handle spikes in traffic and ensure optimal performance even under heavy loads.

This is crucial for applications that experience unpredictable spikes in traffic during their operations. Or if they need to handle large volumes of data unpredictably. Scalable systems can handle such varying workloads and accommodate them without sacrificing performance.

So, what exactly does it mean to effectively scale components? To answer that, there are some key principles for implementing scalability within your architecture.

#1. Horizontal Scaling

Horizontal scaling means adding more instances of the same components to distribute the same workload. Effectively, you will increase the parallelism of your existing processes but ensure they do not consume the same raw system resources. Instead, each of the instances operates with its system resources.

In event-driven architecture, this can be achieved by deploying multiple instances of event-consuming components, such as event processors or event handlers. By distributing the workload across these instances, the system can handle a higher volume concurrently.

Horizontal scaling is particularly effective when combined with load-balancing techniques to evenly distribute events among the instances. This will prevent one of the instances from becoming a bottleneck for the rest.

#2. Asynchronous Processing

Event-driven architecture, by default, supports asynchronous processing, which is essential for scalability. Asynchronous processing enables components to handle events independently without blocking or waiting for each event to complete.

You can’t get into any kind of deadlock or resource locking in general if you leverage only asynchronous processes. Instead, you constantly utilize all resources and handle a larger number of events simultaneously. Your architecture must, however, count on asynchronous communication, which is not always easy to design.

#3. Event Partitioning

With event partitioning, you can divide events into logical partitions based on certain criteria, such as event type, source, or destination.

Each partition can be processed independently by separate components or instances as they are distinct from the data perspective. Hence again, you support parallel processing and improved scalability.

Event partitioning can be achieved through techniques like event sharding or event topic partitioning, which depends mostly on the specific requirements of your system.

#4. Distributed Message Brokers

Message brokers play a central role in event-driven architecture as it facilitates event communication between components. To maintain scalability, it is crucial to use distributed message brokers that can handle high throughput.

Distributed message brokers divide events across multiple nodes, so they enable horizontal scaling and ensure that the system can handle the incoming events.

#5. Monitoring And Auto-Scaling

Finally, implementing monitoring and auto-scaling mechanisms is essential so that your system can dynamically adjust its resources based on the current workload. Without that, you have no visibility when such scaling is necessary.

By monitoring key metrics such as event queue length, processing time, and resource utilization, you can let the system automatically scale up or down by adding or removing instances as needed.

This dynamic scaling results in optimal performance and responsiveness, even during peak loads.

Real-Time Data Processing in Event-Driven Architecture

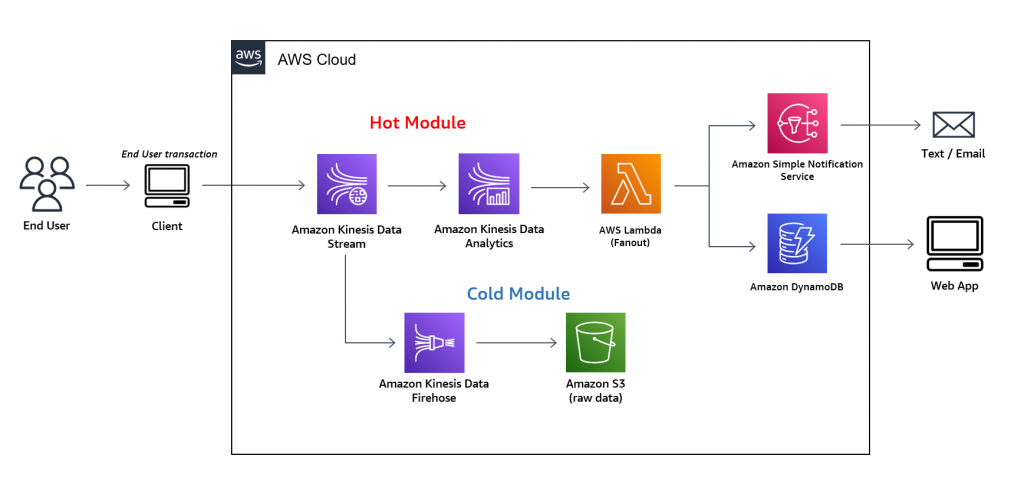

Real-time data processing is a critical requirement for many modern applications, and event-driven architecture excels in this domain. It’s night and day difference if you can capture and process events as they occur, thus reacting in real-time. As a result, you get uninterrupted up-to-date information.

Such capability is essential for applications that require immediate responses or need to provide up-to-date information to users. Whether it’s monitoring various Internet of Things (IoT) devices or processing financial transactions, the event-driven architecture shall ensure response to events with minimal latency.

There is a substantial difference between real-time and near-real-time data processing. The latter is usually much easier to achieve as you can still afford to have some delay (usually in minutes). However, assuring true real-time architecture is a very challenging task.

To get a better picture of what exactly that means, let’s explore a concrete example to illustrate what to expect from true real-time data processing event-driven architecture.

Consider a ride-sharing platform operating in a city. The platform needs to process a high volume of events in real-time to be usable for both drivers and passengers. If it would be only near-real-time, it does not have the desired effect anymore. Here is what to consider in such architecture.

#1. Event Generation

Events are generated as soon as passengers request a ride or when drivers accept the ride requests. Then the events are published to a message broker.

For example, the event is created with details such as the pickup location and destination.

#2. Real-Time Matching

When a ride request event is published, a matching component subscribes to the event and processes it in real-time (that’s where the available driver will pair to this request).

The implementation component uses algorithms to match the request with the most suitable available driver based on factors like proximity or vehicle type. Again, the requirement for real-time processing is very strong, as the driver moves quite fast in the car. In five minutes the distance can be significantly different as it was at the time of passenger request.

#3. Dynamic Pricing

As ride requests and driver availability events are processed, a pricing component can subscribe to these events as the next one. This component will calculate the actual cost of the drive.

For example, during peak hours or high demand, the pricing component can increase the fare or decrease it in case of low demand. High and low demand can change very quickly, so even here a delay is not desirable.

#4. Real-Time Tracking

Once a ride is accepted, the real-time position of the driver’s location is quite important. The driver’s location updates are captured as events and processed in real-time. Passengers can see and track this information through a tracking component.

#5. Real-Time Analytics

The platform performs real-time analytics on various aspects. For example, events related to driver ratings or customer feedback are processed in real time to generate insights and trigger actions such as driver performance notifications or reward programs.

Practical Implementation of Event-Driven Architecture

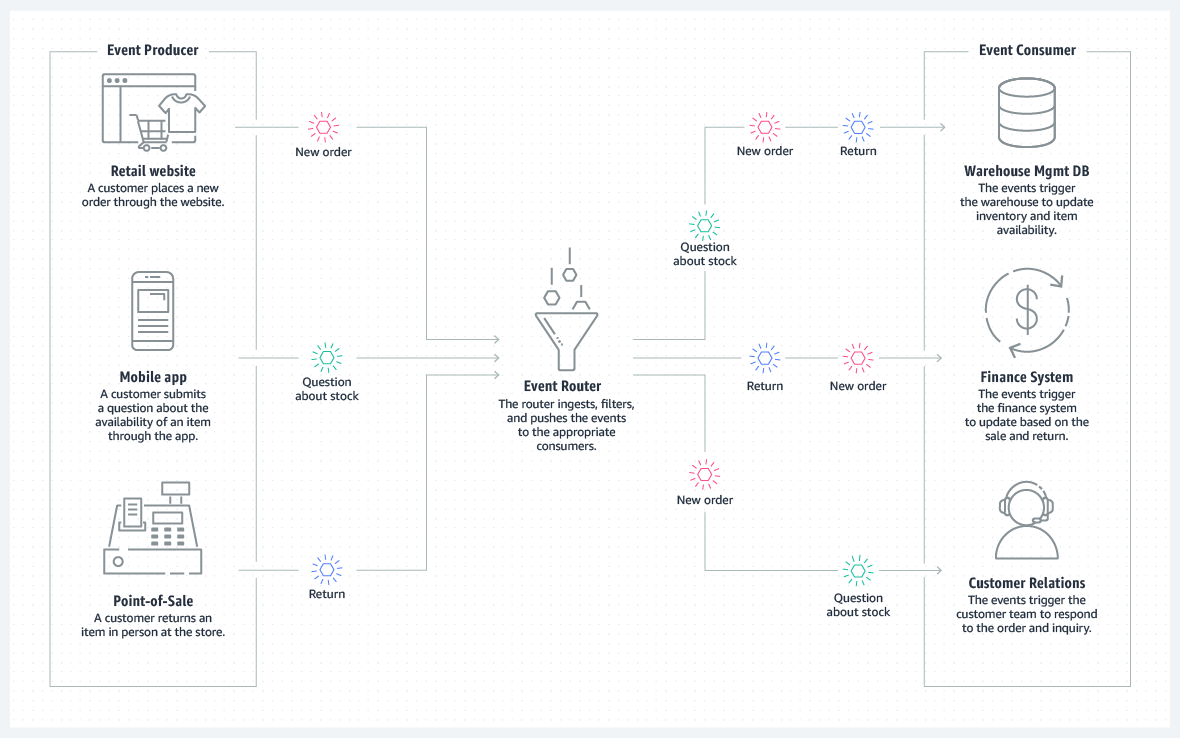

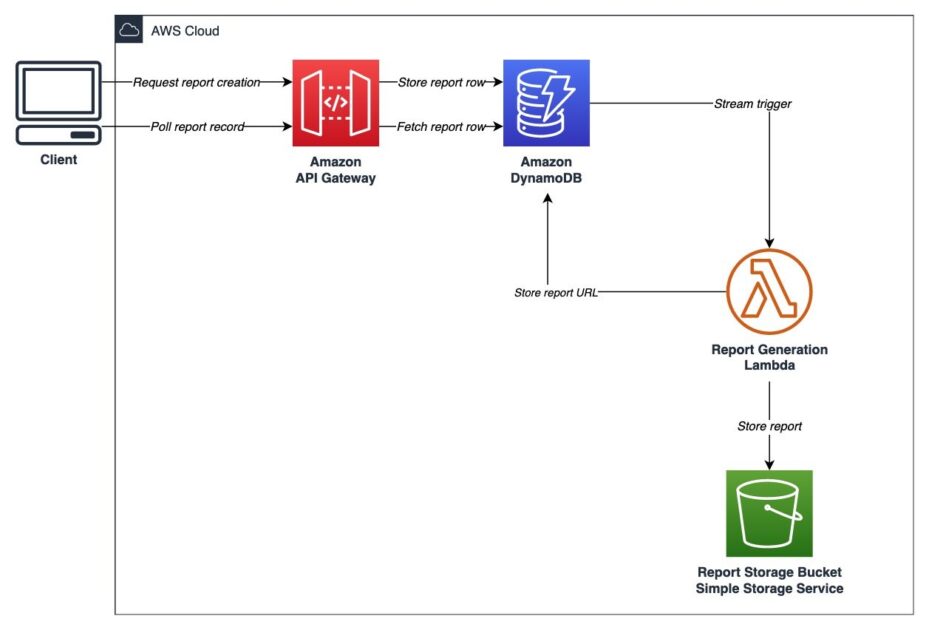

Let’s now consider another practical example of how event-driven architecture can be implemented in the context of an e-commerce platform.

In this scenario, think about an online marketplace that connects buyers and sellers. The platform needs to handle a high volume of events. For example, new product listings, purchases, inventory updates, or user interactions.

First, the platform can decouple its components. Product catalog, inventory management, order processing, or user notifications are standalone components. Each component can communicate through events. For example, when a seller adds a new product listing, an event is generated and published to a message broker.

As the platform experiences spikes in traffic, the architecture will scale horizontally by adding more instances of components that process events. For instance, when a large number of users simultaneously make purchases, the order processing component scales up to handle the load by consuming events from the message broker.

Further, the platform provides users with up-to-date information. For example, when a buyer places an order, the order processing component immediately updates the inventory and triggers notifications to the seller and buyer. This must be done immediately. Otherwise, many discrepancies could arise.

Next, if you decide to introduce a recommendation engine, you can add a new component that consumes user interaction events and generates personalized recommendations without impacting other (existing) components.

In the event of component failures, event-driven architecture needs to have a mechanism how to restore. Suppose the inventory component experiences an outage. In that case, events related to inventory updates can be buffered or redirected to an alternative component. For the end user, this will still look like uninterrupted order processing.

Conclusion

Event-driven architecture offers a practical and efficient approach to software delivery. It’s not easy to implement, nor are the teams overly familiar with patterns such architecture requires.

However, its benefits, including loose coupling, scalability, and real-time data processing, make it a compelling choice for building robust applications for future software platforms.

Related Articles

-

EditorNarendra Mohan Mittal is a senior editor at Geekflare. He is an experienced content manager with extensive experience in digital branding strategies.

EditorNarendra Mohan Mittal is a senior editor at Geekflare. He is an experienced content manager with extensive experience in digital branding strategies.