AI platforms have completely changed how businesses create and scale the deployment of new applications. The difficulties of creating and running AI systems successfully and quickly while minimizing overhead costs are addressed by a new category of enterprise software – AI platforms.

AI platforms make it possible for companies to build and maintain machine learning models at scale, making the technology more affordable than ever. For enterprises, this new prospect has wider ramifications to venture out to several industries.

In this article, I’ll take a look at the best AI platforms for developing your own apps. It is worth noting that the choice of the technology stack for AI platform development depends on factors such as scalability, performance, cost, and user requirements. After listing out the platforms, I’ll also discuss the benefits of AI platforms and explain why they are so important for companies who want to remain competitive in the digital era.

Let us now take a comprehensive look at each of the platforms.

Amazon AI Services

Amazon Web Services (AWS) has made tremendous advancements in the fields of artificial intelligence (AI) and machine learning (ML), providing a full range of services, infrastructure, and resources to help clients at every stage of their ML adoption journey.

The AI platform from AWS has been essential for making predictions based on new data, hosting trained models in the cloud, and training machine learning models at scale.

Users of AWS’ AI platform training service may choose from a variety of machine types for training tasks, enable distributed training, use hyperparameter tweaking, and accelerate operations using GPUs and TPUs, among other customization possibilities.

However, regardless of whether the model was trained on the AWS AI platform, the prediction service offered by the AWS AI platform enables users to deliver predictions that are based on the trained models.

The most recent news from Amazon included the debut of “Bedrock”, a set of generative AI tools. Bedrock is a collection of generative AI tools that may assist AWS users in creating chatbots, creating and classifying pictures based on prompts, and generating and summarizing text.

AI services available on AWS:

As you can see, AWS is quite comprehensive in terms of the AI services that it provides to developers. This makes it one of the top choices on this list.

TensorFlow

TensorFlow has become an exhaustive framework for machine learning that can handle each stage of the workflow. In addition to pre-trained models and tools to simplify model construction and produce scalable solutions, TensorFlow also offers machine-learning models that are ready for production.

The most recent version, TensorFlow 2, includes support for distributed training and Python 3.7 while streamlining several APIs from TensorFlow 1.

TensorFlow Enterprise offers superior performance and dependability for AI applications, as well as managed services and professional support for businesses.

A variety of file systems and file types are also added to the platform’s built-in functionality by TensorFlow I/O. For machine learning problems utilizing Dataset, streaming, and file system extensions that are not covered by TensorFlow’s built-in support, the collection is a helpful complement.

AI services that are available on TensorFlow can be accessed through TensorFlow Hub, which acts as the repository for hundreds of ready-to-deploy machine learning models.

Google AI Services

Google Cloud Platform offers Google AI Services, a complete set of tools for machine learning activities. Users may create predictions using hosted models on Google Cloud and manage tasks, models, and versions using the AI platform REST API.

The AI platform training service offers customized options for training models, including machine type selection, distributed training support, and GPU and TPU accelerated training.

On the Google Cloud console, which offers a user-friendly interface for interacting with machine learning resources, users may manage their models, versions, and tasks. Resources from the AI platform are connected to Google Cloud’s Cloud Logging and Cloud Monitoring tools, among others.

Additionally, customers may utilize the command-line program Gcloud CLI to maintain their models and versions, submit jobs, and carry out additional AI platform functions.

AI services available on Google Cloud:

Google AI is committed to bringing the advantages of AI to everyone, from cutting-edge research to product integrations that make routine activities easier.

H2O

Software provider H2O.ai gives businesses access to a range of machine-learning platforms and solutions. For machine learning and predictive analytics, H2O is a quick, scalable, in-memory, and open-source platform. Users may simply deploy machine learning models in corporate settings after developing them on Big Data.

The durable and effective methods found in H2O, such as generalized linear models, deep learning, and gradient-boosted machines, are well recognized. Model training and inference can be completed quickly because of the optimized, rapid, and distributed computing capabilities of the H2O platforms.

H2O is a great option for enterprise-level applications because of its scalability, which enables it to manage enormous datasets and difficult modeling jobs. To create the greatest supermodel possible, H2O’s AutoML function automates all hyperparameters and algorithms.

AI services available on H2o.ai:

Over 18,000 consortiums worldwide use H2O, which is well-liked in both the R and Python communities. H2O has three different price tiers: Lite (free), Plus (beginning at $140/month), and Enterprise (custom).

Petuum

Petuum is an AI platform that provides cutting-edge AI solutions and allows next-generation AI automation for enterprises. The composable, open, and flexible corporate MLOps platform from Petuum is made to make it simple for AI/ML teams to scale and operationalize their machine-learning pipelines.

Also Read: Learn MLOps with these Courses

The platform, which is the first composable MLOps platform in the world, enables anybody to automate processes using the most recent Large Language Models (LLMs) without knowing any programming or AI. Customers of Petuum have seen increases in speed to value and productivity of ML teams and resources of more than 50%.

Petuum is currently figuring out how to make money off the platform, but one possible revenue stream is a licensing scheme where clients pay based on the number of machines being used on a certain AI system.

Petuum has received generous funding from investors, including SoftBank, Tencent, Advantech Capital, Northern Light Venture Capital, and Oriza, totaling $108 million.

Polyaxon

In order to handle deep learning and other machine learning models at scale, users can use the open-source Polyaxon platform. In order to manage deep learning and other machine learning models, Polyaxon offers a platform that enables users to automatically track important model metrics, hyperparameters, visualizations, artifacts, and resources, as well as version control code and data.

Also Read: Difference Between AI, Machine Learning, and Deep Learning

In addition to a workflow engine for scheduling and managing intricate interdependencies between processes, Polyaxon offers an optimization engine for automating model tuning. Additionally, Polyaxon offers a registry with role-based access control, security, analytics, and governance for storing and versioning components.

Reproducibility and pipelines are based on input and output files, and Polyaxon is language‘ and framework-agnostic, supporting a broad range of programming languages and libraries.

Run your distributed models and concurrent experiments using Polyaxon, which is available for on-premises or cloud deployment, to make the most of cluster resources.

AI services available on Polyaxon:

For enterprises wishing to handle deep learning and other machine learning models at scale, Polyaxon offers a strong and adaptable machine learning platform that is worth exploring.

DataRobot

DataRobot is a complete AI platform that offers a wide range of system interoperability and a team of AI specialists to assist businesses in maximizing the benefits of AI.

With an open and comprehensive AI lifecycle platform, the platform’s capabilities, such as production-scale value, data platforms, and deployment infrastructure, allow enterprises to get the most out of their current technological investments.

Users may create, deploy, and manage machine learning models on the cloud using DataRobot AI Cloud, the company’s cloud-based version of its platform. With DataRobot, you may automate a variety of activities without the need for specialized knowledge.

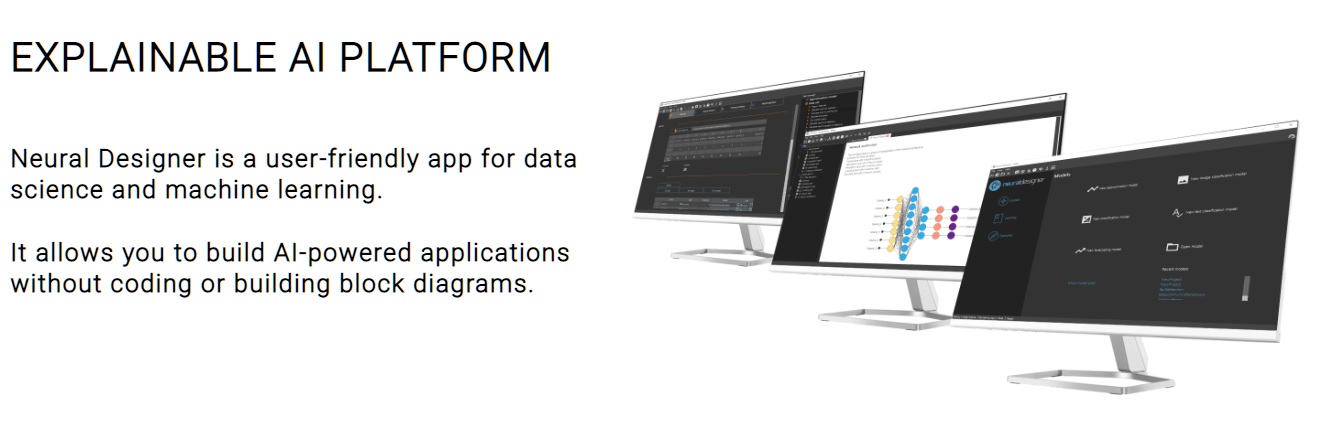

Neural Designer

Neural Designer is a user-friendly data science and machine learning tool that focuses on neural networks, an advanced technique in the field. Users do not need to write code or create block diagrams to create AI-driven apps thanks to Neural Designer.

A broad range of capabilities is available on the platform, such as automated machine learning, model maintenance and deployment, and seamless connections with other programs and platforms.

Neural Designer has several benefits, the most notable of which is that it uses the least energy than any competing machine learning platforms out there. When training neural networks, this can save a lot of money.

The quantity of data utilized, the utilization of GPUs, the kind of technical assistance, and the length of the subscription period are factored into how much Neural Designer costs for the enterprise. Neural Designer offers thorough assistance, broken down by license type, with all the advantages of an internal technical department.

IBM Watson

Watson is a supercomputer developed by IBM that provides intelligent responses to queries by combining analytical software and AI, known as “cognitive intelligence”, combining state-of-the-art technology and software with compute rates of up to 80 teraflops.

IBM Watson uses NLP to comprehend the syntax and meaning of human language. Watson responds to questions from humans in a matter of seconds by digesting and analyzing enormous volumes of data.

Many businesses now have a competitive advantage in predictive analytics and problem-solving due thanks to IBM Watson’s technology, which raises stakeholder and consumer value. Due to its cloud-based accessibility, IBM Watson has become a popular choice for small and midsize businesses in a variety of industries.

Also Read: Best Predictive Analytics Tools for Data Driven Decision Making

AI products available on IBM Watson:

- IBM Watson Discovery

- IBM Watson Orchestrate

- IBM Watson Knowledge Catalog

- IBM Watson Studio

- IBM Instana Observability

- IBM Turbonomic

IBM Watson has developed into a dependable and formidable AI system that can provide insightful analysis and practical solutions across various sectors.

Now that you’ve checked out the list of the best AI platforms for making your apps, I’ll also provide you with the 101 in terms of the benefits that these platforms can bring to your business.

Benefits of AI Platforms for Businesses

AI platforms make scalable machine learning and deep learning model design, development, deployment, and management possible. AI platforms lower the cost of enterprise software development by minimizing software-specific tasks like data manipulation, management, and deployment for different use cases.

Based on the form of content that AI technology produces —visuals, audio, text, or codes —use cases may be divided into many categories. Here is a quick summary of application cases for AI technology across several industries:

- Design neural networks to perform business-specific tasks

- Code generation: Code generation, compilation, and bug-fixing

- Text generation: Translation services, chatbots, content production

- Audio generation: Music composition, Text-To-Speech (TTS), Voiceover generation

- Visual output generation: Image generation, 3D-shape generation, video production

Apart from the benefits, you’d also do well to know more about the architecture of these AI platforms.

AI Platform Architecture

Perception, reasoning, and learning that qualify and quantify to be human intelligence traits are challenged by AI technology. Striving to reach human intelligence capabilities, solving tasks traditionally handled by humans is carried out by AI platforms using machine learning models.

Engineers working on AI platforms can alter the model to train it on a variety of specializations. Layers in AI platforms allow for the deployment of these models by businesses using a variety of frameworks, languages, and tools. In general, there are three categories:

- Data and integration layer: This layer gathers data from multiple sources to feed the AI algorithms. It gathers data from many systems, processes it, and stores it in a data repository for training and testing AI models. This gets done using data integration tools.

- Model development layer: Offers tools and frameworks for creating and testing machine learning models. Along with libraries and frameworks for building and training models, it also contains tools for finetuning and optimizing the models for greater accuracy.

- Deployment and management layer: This layer makes it possible to deploy the trained models to production and manage them. In order to make sure that these models function as predicted in the real world, there are management tools integrated for model scaling, maintenance, monitoring, and versioning.

While these AI platform architectures are highly alterable based on requirements, they do require a fair amount of testing. Yes, they do have plenty of benefits, but should you choose them over your traditional enterprise software platforms? Let’s find out.

Differences Between AI Platforms and Traditional Enterprise Software Platforms

AI platforms are different from regular commercial software platforms in a number of ways, including their focus on AI-specific technologies, pre-built models and frameworks, and the requirement for specialized skill sets. Here, we look at some of the key differentiators between standard enterprise software and an AI platform.

- Traditional enterprise software is more adaptable, while AI platforms are created for the development, implementation, and management of AI applications.

- Intelligent technologies like NLP are often deployed on AI platforms that are not available in regular enterprise software platforms.

- AI platforms require specialists to build and deploy AI applications, while typical enterprise software platforms can be designed and executed by more generalist software developers.

- AI platforms require massive data sets to train their models in order to be effective, while ordinary commercial software platforms can be produced with less data.

In summary, AI platforms can be helpful if you’re looking to develop an app that’s for a particular niche, and have the specialists to operate the platform. Traditional software, although somewhat adaptable and easy to use, can’t match up to AI platforms when it comes to versatility. This is thanks to the implementation of technologies like NLP in AI platforms.

Now that you know all about these AI platforms, let’s take a final look at the popular technology stacks in use today. This will help you determine the technology stack that you’ll require for your development needs.

Popular Technology Stacks

Now let us take a look at some of the popular and widely used technology stacks used in AI technology to develop AI platforms.

The technology stack for AI platform development varies depending on the specific requirements and use cases. However, some of the commonly used technologies and frameworks include:

- Programming languages: Python, Java, C++, and R

- Machine learning frameworks: TensorFlow, Keras, PyTorch, Scikit-learn, and Apache MXNet

- Data processing and management: Apache Spark, Apache Hadoop, and Apache Kafka

- Cloud platforms: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP)

- Database management: MySQL, PostgreSQL, MongoDB, and Cassandra

- Containerization: Docker and Kubernetes

So, based on your requirements, you can choose a framework and AI platform that fits your needs.

Conclusion

To wrap up, the usage of AI platforms has dramatically revolutionized how businesses approach AI-powered applications. Machine learning models can now be built and maintained at scale, making the technology more economical than ever before.

The AI platform design allows developers to modify the model to train it on a range of specializations, and AI platform layers allow companies to deploy these models using a variety of frameworks, languages, and tools.

Although AI platforms need specialized skill sets and vast data sets to train their models, they are critical for businesses competing for an edge in the digital economy. As AI technology advances, we may anticipate more developments in AI platforms that transform the way we approach machine learning and deep learning models and their impact on society.

You can trust Geekflare

At Geekflare, trust and transparency are paramount. Our team of experts, with over 185 years of combined experience in business and technology, tests and reviews software, ensuring our ratings and awards are unbiased and reliable. Learn how we test.