Network latency is the delay in transmitting requests or data from the source to destination in a network ecosystem. Let’s see how you can troubleshoot network latency.

Any action requiring the usage of the network, like opening a web page, clicking a link, or opening an app and playing an online game, is called an activity. A user’s activity is the request, and the response time of a web application is the time it takes to answer this request.

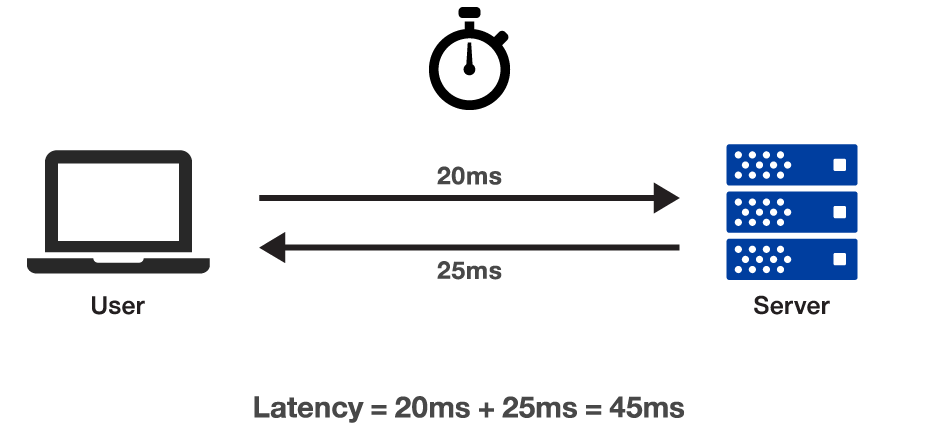

This time delay also includes the time it takes a server to complete the request. As a result, it is defined as the round trip – the time it takes for a request to be recorded, processed, and then received by the user, where it’s decoded.

The term “low latency” refers to data transfer delays that are relatively short. On the other hand, long delays or excessive latency are undesirable since they degrade the user experience.

How to fix Network Latency?

There are many tools and software available on the internet for analyzing and troubleshooting a network. Some are paid, and some are free of cost. However, there is a tool called Wireshark, which is an open-source and GPL licensed application used to capture the data packets in real-time. Wireshark is the most popular and commonly used network protocol analyzer in the world.

It will assist you in capturing network packets and displaying them in detail. You can use these packets for real-time or offline analysis once it catches the network packets. This application allows you to examine your network traffic under a microscope, filtering and drilling down into it to find the source of issues, assisting with network analysis and, ultimately, network security.

What Causes Network Delay?

Few top reasons for the slow network connectivity including :

- High Latency

- Application dependencies

- Packet loss

- Intercepting devices

- Inefficient window sizes

And in this article, we examine each cause of network delay and how to resolve the problems with Wireshark.

Examining With Wireshark

High Latency

High latency refers to the time it takes for data to transit from one endpoint to another. The impact of high latency on network communications is enormous. In the diagram below, we look at the round-trip time of a file download on a high-latency path as an example. The round-trip latency time might often exceed one second, which is unacceptable.

- Go to Wireshark Statistics.

- Select the option TCP stream graph.

- Choose the Round Trip time graph to find out how long it takes for a file to download.

Wireshark is used to calculate the round-trip time on a path to see whether this is the cause of flawed Transmission Control Protocol (TCP) communications network performance. TCP is used in various applications, including online surfing, data transmission, file transfer protocol, and many others. In many cases, the operating system can be tweaked to perform more effectively on high-latency channels, notably when hosts are using Windows XP.

Application Dependencies

Some applications are dependent on other applications, processes, or host communications. Suppose your database application, for example, relies on connecting to other servers to fetch database items. In that case, sluggish performance on those other servers can impair the load time of the local application.

Take, for example, a web browsing experience where the target server refers to several other websites. For instance, to load the site’s main page, www.espn.com, you must first visit 16 hosts that supply adverts and content for the main www.espn.com page.

In the above figure, The HTTP Load Distribution window in Wireshark displays a list of all servers used by the www.espn.com home page.

Packet loss

One of the most prevalent issues I find on networks is packet loss. Packet loss occurs when data packets are not correctly delivered from sender to recipient over the internet. When a user visits a website and begins downloading the site’s elements, missed packets cause re-transmissions, increasing the ascertain to download the web files and slowing the overall downloading process.

Furthermore, when an application uses TCP, missing packets have a particularly negative impact. When a TCP connection detects a dropped packet, the throughput rate automatically slows down to compensate for network issues.

It gradually improves to a more acceptable pace until the next packet is dropped, resulting in a significant reduction in data throughput. Large file downloads, which should otherwise flow easily across a network, suffer significantly from packet loss.

What does it look like when a packet is lost? It is debatable. Packet loss can take two forms if the program is operating via TCP. In one example, the receiver monitors packets based on their sequence numbers and detects a missing packet. The client makes three requests for the missing packet (double acknowledgments), resulting in a resend. When a sender observes that a receiver has not confirmed receipt of a data packet, the sender times out and retransmits the data packet.

Wireshark indicates that network congestion has occurred, and multiple acknowledgments cause the re-transmission of the problematic traffic by color-coding it. A high number of duplicate acknowledgments indicates packet loss and significant delay in a network.

To improve network speed, pinpointing the exact site of packet loss is critical. When packet loss occurs, we move the Wireshark down the path until no more packet loss is visible. We’re “upstream” from the packet drop point at this time, so we know where to focus our debugging efforts.

Intercepting Devices

Network traffic cops are interconnecting devices that make forwarding choices, such as switches, routers, and firewalls. When packet loss occurs, these devices should be investigated as a probable reason.

Latency can be added to the path by these linking devices. For example, if traffic prioritization is enabled, we can witness extra latency injected into a stream with a low priority level.

Inefficient Window sizes

Aside from the Microsoft operating system, there are other “windows” in TCP/IP networking.

- Sliding Window

- Receiver Window

- Congestion Control window

These windows together constitute the network’s TCP-based communication performance. Let’s start by defining each of these windows & their impact on network bandwidth.

Sliding Window

As data is acknowledged, the sliding window is utilized to broadcast the next TCP segments over the network. The sender receives acknowledgments for transmitted data fragments, the sliding window expands. As long as there are no lost transmissions on the network, larger amounts of data can be transferred. When a packet is lost, the sliding window shrinks because the network cannot manage the increased amount of data on the line.

Receiver Window

The TCP stack’s receiver window is a buffer space. When data is received, it is stored in this buffer space until an application picks it up. The receiver window fills up when an application does not keep up with the receive rate, eventually leading to a “zero window” scenario. All data transmission to the host must come to a halt when a receiver announces a zero window condition. The rate of throughput falls to zero. A method known as Window Scaling (RFC 1323) allows a host to increase the receiver window size and lower the likelihood of a zero window scenario.

The above picture displays a 32-second delay in network communications due to a zero window scenario.

Congestion Window

The congestion window defines the maximum amount of data that the network can handle. The sender’s packet transmission rate, the network packet loss rate, and the receiver’s window size all contribute to this figure. The congestion window increases steadily during a healthy network communication until the transfer is completed or it reaches a “ceiling” established by the network health. The sender’s transmit capabilities or the receiver’s window size. Each new connection starts the window size negotiation procedure all over again.

Tips for a Healthy Network

- Learn how to utilize Wireshark as a first-response task to quickly and efficiently discover the source of poor performance.

- Identify the source of network path latency and, if possible, reduce it to an acceptable level.

- Locate and resolve the source of packet loss.

- Examine the data transmission window size and, if possible, reduce it.

- Examine intercepting devices’ performance to see if they add latency or drop packets.

- Optimize apps so that they can deliver larger amounts of data and, if possible, retrieve data from the receiver window.

Wrapping Up 👨🏫

We’ve gone through the main reasons for network performance issues, but one factor that shouldn’t be missed is a lack of understanding of network communications behavior. Wireshark provides network visibility just like X-rays, and CAT scans offer visibility into the human body for accurate and prompt diagnoses. This tool has become a vital tool for locating and diagnosing network problems.

You should now examine and resolve the network performance via several filters and tools using Wireshark. 👍