Explainable AI (XAI) is an emerging concept whose demand among businesses is increasing as it helps them interpret and explain artificial intelligence and machine learning models.

In the modern world, everything is data-driven, and AI serves as the central point of interest.

Different types of AI solutions are used in a large number of operations for various industries to enhance their growth and smoothen their operation.

This also helps you understand how the AI models work and produce outputs.

If you are still wondering why you should care about XAI, this article is for you.

Let’s start!

What Is Explainable AI?

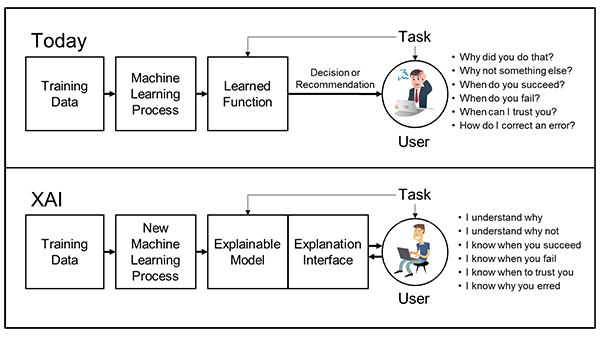

Explainable AI (XAI), is a set of methods and processes that is focused on helping users understand and trust the output and results given by AI models. Basically, XAI allows users to get an insight into how a complex machine-learning algorithm works and what are the logics that drives those models’ decision-making.

XAI, through its framework and set of tools, helps developers and organizations bring a transparency layer in a given AI model so that the users can understand the logic behind the prediction. It is advantageous in an organization’s ecosystem where AI is implemented in various ways as it enhances the AI’s accuracy, outcomes, and transparency.

Furthermore, XAI helps in showcasing what biases and issues complex ML algorithms might bring along while working on a specific outcome. The black box model that gets created as a result of a complex ML algorithm is almost impossible to understand, even for data scientists who create the algorithm.

So, the transparency that XAI brings along is beneficial for an organization to figure out how to properly utilize the power of AI and make the right decision. The explainability that XAI puts forward is mainly in the form of texts or visuals, offering insights into the internal functioning of the AI models.

To explain the extra metadata information of the AI model, XAI also utilizes many other explanation mechanisms – feature relevance, simplified explanation, and explaining through examples.

The demand for XAI is increasing rapidly as more and more organizations are implementing them in their ecosystems. As it evolves, the techniques and process of explainability through writing are also improving.

Why Does XAI Matter?

Nowadays, most organizations use AI models in their business operations and decide their future moves according to AI’s prediction. However, it is only partially accurate, and there are many biases which is a predominant problem in most AI models.

The biases are based on different factors, and it influences the decision of AI models. Significantly, AI mode’s decisions often degrade when the AI model is fed with production data which is different from training data.

Moreover, ML models and neural networks can be daunting to explain, and it is often impossible for data scientists to interpret them. If you fully trust every decision of AI models for your company’s growth, then it could cause unforeseen issues and hamper the overall growth.

Therefore, it becomes vital for an organization to have a complete understanding of the AI decision process and logic that goes behind every outcome these AI models put forward. This is where XAI comes as a handy tool that assists organizations in getting a full explanation of a given AI model’s decision-making process, along with the logic it utilizes.

From understanding machine learning algorithms to evaluate neural networks and deep learning networks, XAI helps you monitor everything and get accurate explanations for every decision. When you implement XAI, it becomes more straightforward for you to assess the accuracy and accountability of every AI decision and then decide whether it will be suitable for your organization.

Moreover, XAI serves as an essential component because it is responsible for implementing and maintaining a responsible AI model in your business ecosystem that will yield fair and accurate decisions. XAI also helps withhold trust among end users while curbing any security and compliance risks.

How Does XAI Work?

Modern AI technology that is implemented in businesses provides their outcome or decision by using different models. But these AI technologies don’t define how they have achieved the result or logic behind their decision.

To fill this gap, businesses are now implementing XAI, which uses an explainable model and all the metadata information with an explanation interface to help understand how the AI model works.

When AI technology is incorporated into a business ecosystem, AI methods are introduced at different levels. These AI methods are machine learning (ML), machine reasoning (MR), and integration between MR and ML.

To be precise, the components that XAI introduces in an existing AI model are explanation, explainability of data, MR explainability, and ML explainability. Plus, XAI also introduces interpretability and explainability between MR and ML.

The working of XAI is categorized into three types:

- Explainable Data: It highlights the data type and contents that are being used to train the AI model. Plus, it showcases the reason behind the choices, the process of choosing, and reports of efforts needed to remove bias.

- Explainable Predictions: Here, XAI puts forward all the features that the AI model has used to obtain the output.

- Explainable Algorithms: These reveal all the layers in an AI model and explain how each layer helps in producing the ultimate output.

However, explainable prediction and algorithms are still in the development stage, and only explainable data can be used for explaining neural networks.

To explain the decision process, XAI utilizes two approaches:

- Proxy Modeling: In this approach, an approximated model is utilized, which differs from the original. It leads to an approximated result which could provide a different result than the actual one.

- Design for Interpretability: It is a popular approach where XAI develops a model that is easy to understand by human users. However, these models lack accuracy or production power when compared with actual AI models.

Benefits of XAI

Explainable AI or XAI has a significant impact on the boom of AI because it helps humans understand how an AI works. It offers many benefits, such as:

Improves Transparency and Trust

Businesses implementing AI models into their systems can understand how a complex AI model works and why they produce specific output under different conditions.

XAI is also highly useful for businesses to understand the reason behind the outcome of black box models. Thus, it improves the transparency and trust between machine and human users.

Enhanced Adoption of AI Models

As organizations start understanding the working and logical process behind an AI model and recognizing its benefits, the adoption rate of AI models is increasing. They will also be able to trust their decision more because of proper explanations.

Boost in Productivity

With the adoption of XAI in the AI mode, ML operation teams can easily find errors and also areas that need improvement in the existing system. It also helps ML operation teams to maintain the smooth and efficient operation of AI processes.

Thus, there will be a significant boost in productivity because the MLOps team can understand the logic that leads the AI model to produce a particular output.

Reduced Risk and Cost

XAI has been instrumental in reducing the cost of AI model governance. Since it explains all the outcomes and areas of risks, it minimizes the need for manual inspection and the chance of costly errors that will hamper relations with end users.

Uncover New Opportunities

When your technical and business team gets the opportunity to get insights into AI decision-making processes, it will give them a chance to uncover new opportunities. When they take a deeper look at specific results, they can find out new things that weren’t visible in the first place.

Challenges of XAI

Some common challenges of XAI are:

- Difficulty in interpretation: There are many ML systems that are difficult to interpret. So, the explanations that XAI provides are difficult to understand for users. When a black box strategy is unexplainable, it may cause severe operational and ethical issues.

- Fairness: It is pretty challenging for XAI to determine whether a particular decision of an AI is fair or not. Fairness is entirely subjective, and it depends upon the data on which the AI model has been trained.

- Security: One of the significant problems with XAI is that clients can perform actions to change the decision-making process of the ML model and influence the output for their own benefit. Any technical staff can only recover the dataset used by the algorithm for training.

Difference Between XAI and AI

Even though both XAI and AI are correlated, there are some fundamental differences between them.

| Explainable AI | AI |

| Explainable AI provides an explanation or logic of the decision-making process of a complex ML model. | Artificial intelligence only provides the verdict or output of the decision made by an ML model. |

| Since XAI provides a proper explanation, it helps users to have more trust in a particular AI model. | With AI, you only get the verdict, and the users are left perplexed by the thought of how the AI has come to a conclusion. So, the decisions would need more trust. |

| It reduces biases that are associated with many AI models. | It often makes decisions based on biases in the world. |

| It reduces the cost of mistakes taken by the AI mode. | AI models are not 100% accurate, and they can make wrong predictions. If a wrong prediction is made by an AI model, it could lead to a loss in a business. |

| XAI is yet to evolve entirely as there are still limitations, especially when it comes to explaining complex black boxes. | AI as a whole has evolved a lot, and it can quickly solve a lot of problems without much hassle. |

| Since XAI explains every logic and decision process of an AI model, anyone can easily influence the process for ill means. | AI models don’t provide the main reason or logic behind their final prediction, so there is hardly a chance for someone to influence its decision. |

Impact of XAI on Different Sectors

The arrival of XAI has made a significant impact in different sectors where AI plays a crucial role in decision-making. Let’s take a look at them.

#1. Finance

AI-powered solutions are predominant in finances, and most companies are using them for different tasks. However, the finance sector is heavily regulated and requires a lot of auditing before making a financial decision.

XAI can help in that part and put forward justification behind a financial decision. It is instrumental in retaining the trust of end users because they will understand the reason behind a financial decision that an organization makes.

#2. Healthcare

The inclusion of XAI has benefited healthcare systems in a variety of ways. It can help detect and diagnose many diseases and put out the root cause. This can help doctors provide the correct treatment.

#3. Manufacturing

Nowadays, AI models are heavily implemented in manufacturing sectors for applications like management, repair, logistics, etc. But these AI models are not always consistent, and it leads to trust issues.

To this, XAI has solved a lot of such issues as it can provide the best possible way for many manufacturing applications, along with an explanation. It will showcase the logic and reason behind a particular decision made for workers, which will ultimately help them build trust.

#4. Automobile

The integration of XAI in self-driving cars has been imperative as it has allowed a vehicle to provide a rationale for each decision it makes during accidents. By learning from different situations and accidents, XAI helps autonomous cars to make decisions and improve the overall security of the passengers as well as pedestrians.

Learning Resources

Apart from the above information, here are a few books and courses that you can consider if you want to learn more about XAI.

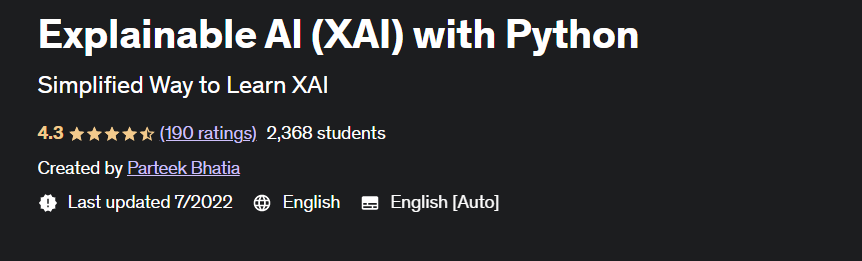

#1. Explainable AI With Python

Explainable AI with Python program by Udemy is designed by Parteek Bhatia. It will help you learn different aspects of XAI, including the applications, various XAI techniques, and What-if tools from Google. You will also find the categorization of XAI in various aspects.

#2. Explainable Artificial Intelligence

Written jointly by Uday Kamath and John Liu, Explainable AI is an excellent book for learning interpretability in machine learning.

| Preview | Product | Rating | |

|---|---|---|---|

|

Explainable Artificial Intelligence: An Introduction to Interpretable Machine Learning | Buy on Amazon |

You will find many case studies and associated materials for learning XAI. The authors of this book have provided many practical examples that come in handy in understanding XAI.

#3. Hands-On Explainable AI (XAI) With Python

Hand-on Explainable AI (XAI) with Python is a well-known book authored by Denis Rothman.

| Preview | Product | Rating | |

|---|---|---|---|

|

Hands-On Explainable AI (XAI) with Python: Interpret, visualize, explain, and integrate reliable AI… | Buy on Amazon |

It offers a detailed study of XAI tools and techniques for understanding AI results, which are needed for modern businesses. You will also learn to handle and avoid various issues related to biases in AI.

Conclusion

Explainable AI is an effective concept that makes it easy for business owners, data analysts, and engineers to understand the decision-making process of AI models. XAI can interpret complex ML models that are even impossible to decode for data scientists.

Even though it is making progress with time, there are still areas it needs to improve upon. I hope this article gives you better clarity on XAI, how it works, its benefits and challenges, and applications in different sectors. You can also refer to the above-mentioned courses and books to gain more ideas on XAI.

You may also read Artificial Narrow Intelligence (ANI).